Neutron-Linux-Bridge-Plugin

- Git Branch: https://github.com/openstack/neutron/tree/master/neutron/plugins/ml2/drivers/linuxbridge

- Created: Dec 24 2011

Update: This is now merged.

Neutron L2 Linux Bridge Plugin

Contents

Abstract

The proposal is to implement a Neutron (formerly Quantum) L2 plugin that configures a Linux Bridge to realize neutron's Network, Port, and Attachment abstractions. Each network would map to an independent VLAN managed by the plugin. Sub-interfaces corresponding to a VLAN would be created on each host, and a Linux Bridge would be created enslaving that sub-interface. One or more VIFs (VM Interfaces) in that network on that host would then plug into that Bridge. To a certain extent this effort will achieve the goal of creating a Basic VLAN Plugin (as discussed in the Essex Summit) for systems which support a Linux Bridge.

Requirements

Support for Linux Bridge (brctl package).

Design

Plugin manages VLANs. The actual network artifacts are created by an agent (daemon) running on each host on which the Neutron network has to be created. This agent-based approach is similar to the one employed by the OpenVSwitch plugin.

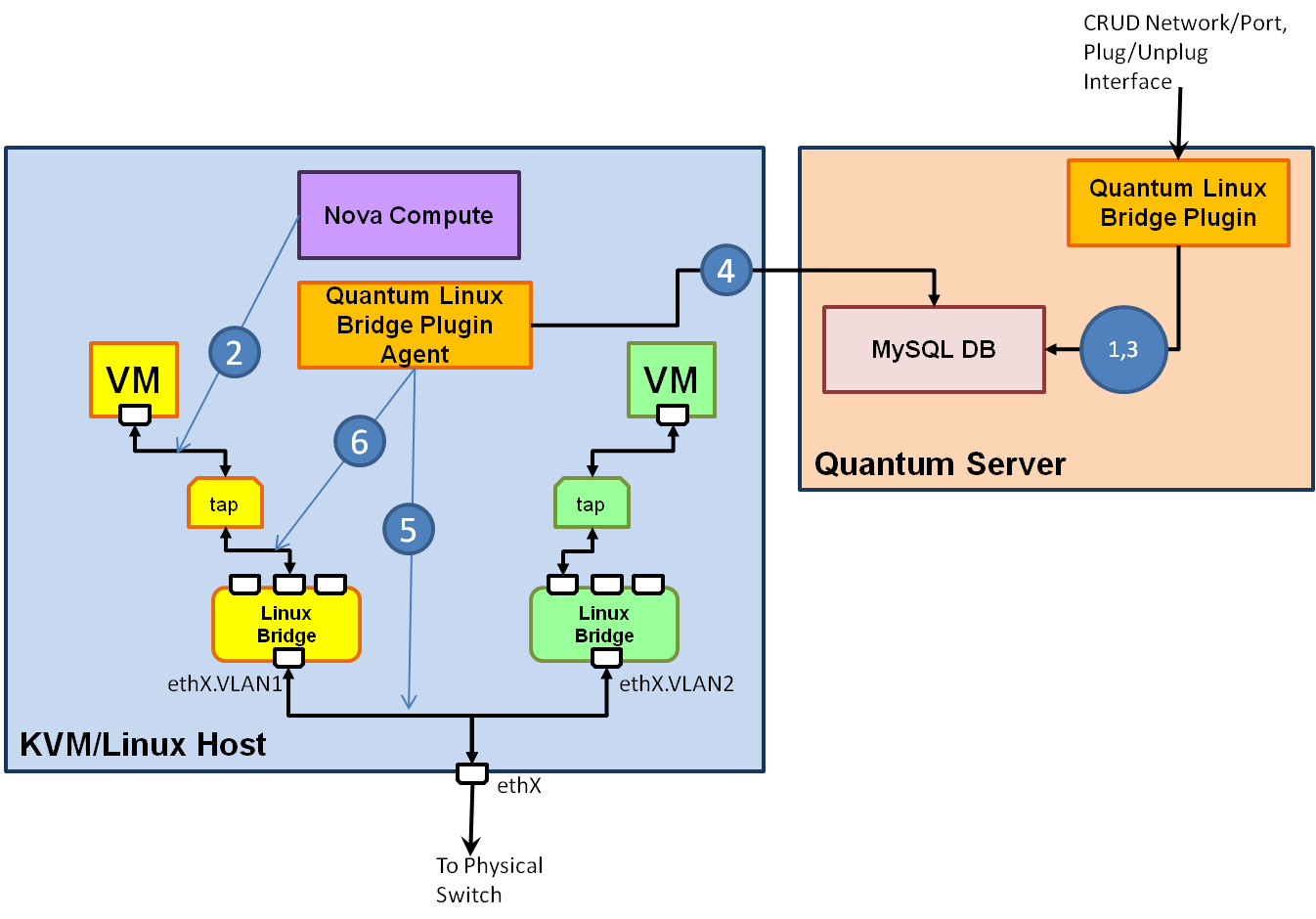

The diagram below explains the working of the plugin and the agent in the context of creating networks and ports, and plugging a VIF into the Neutron port.

- The tenant requests the creation of a Neutron network and a port on that network. The plugin creates a network resource and assigns a VLAN to this network. It then creates a Port resource and associates it with this network.

- The tenant requests the instantiation of a VM. Nova-compute will invoke the Linux-bridge VIF driver (this driver is different from the Linux bridge VIF driver that comes packaged with Nova) will create a tap device. Subsequently nova-compute will instantiate the VM such that the VM's VIF is associated with the tap device.

- The tenant will request plugging the above VIF into the Neutron port created earlier. The plugin will create the association of the VIF and the port in the DB.

- The agent daemon on each host in the network will pick up the association in created in Step 3.

- If a tap device exists on that host corresponding to that VIF, the agent will create a VLAN and a Linux Bridge on that host (if it does not already exist).

- Note: A convention to use the first 11 characters of the UUID is followed to name the tap device. The agent deciphers the name of the tap device from the VIF UUID using this convention.

- The agent will subsequently enslave the tap device to the Linux Bridge. The VM is now on the Neutron network.

Integration with Nova

- A nova-compute VIF driver is made available. This VIF driver is very similar to the one used by the OpenVSwitch plugin. This VIF driver is required on every compute node.

- Linux network driver (linux_net.py) extension is also required so as to be able to plug the gateway. As with the VIF driver, this driver also creates a tap device for plugging the Gateway interface. Some more processing happens in this driver with respect to the Gateway IP as explained below. This linux network/gateway driver is required on every nova-network host.

Handling the Gateway Interface

There is a bit of complexity involved with creating and initializing the Gateway interface. The following the set of operations are performed to achieve this:

- The aforementioned Gateway driver first creates a tap device and associates a MAC address provided by Nova with this device. This happens in the "plug" hook of the driver and executes within nova's process space. Subsequently once this plug method exits, the QuantumManager will associate a Gateway IP address with this tap device. However, since no VM plugs into this tap device, this tap device will not respond to this IP. To overcome this issue, we right away create the bridge for this network on this host in this plug method and also associate the same Gateway IP address with this bridge. The bridge will now respond to the Gateway IP.

- Note: We also associate the same MAC address as the Gateway tap device to the bridge. Although the Gateway tap device is not directly responding to the Gateway IP, it is required since for the bridge to work correctly at least one device on the bridge should have the same MAC address. This requirement is satisfied by the Gateway tap device in this case.

- The QuantumManager (network manager within nova that interfaces with Neutron) plugs the gateway tap device created earlier into a port on the network. This results in a logical binding between the gateway tap device interface ID and the Neutron port/network (LinuxBridge plugin handles this). This step executes in the Neutron server's process space.

- The agent running on the relevant host picks up the logical binding and also the presence of the gateway tap device. It enslaves the gateway tap device to the relevant bridge (note in this case that the bridge was already created in the linux network/gateway driver). This step executes in the agent's process space.

- The gateway initialization is done in the "initialize_gateway" hook of the linux network driver (linux_net.py). This, among other things, involves associating the DHCP IP address with the Gateway tap device and sending a gratuitous ARP for the gateway IP. This step executes in nova's process space.

(Contact: Sumit Naiksatam, Salvatore Orlando)