NetworkServiceDiablo

Contents

Network Service Proposal for Diablo

Overview

This proposal is the result of discussions between several community members that had independently submitted blueprints for networking capabilities in Openstack. Those proposals included:

(List proposals here)

This page describes the resulting target services to be delivered in experiemental form in the Diablo timeframe.

Network Services Model

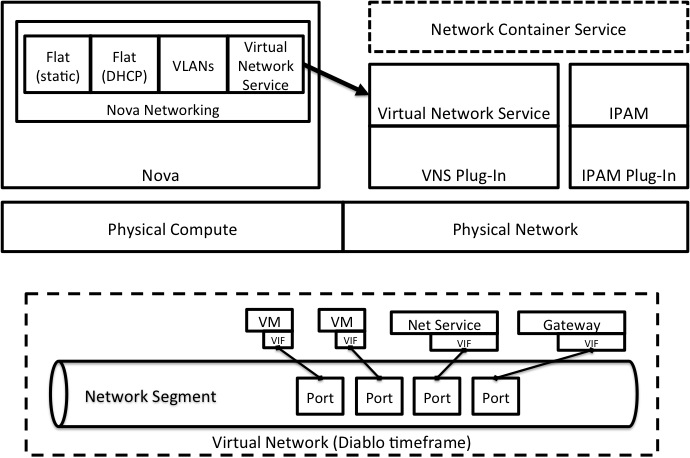

The diagram below describes two things:

- The software services architecture, and the relationship of the Virtual Network Service and IPAM Service to Nova

- The logical network architecture elements used to describe a virtual network topology in the Diablo timeframe

Network Services Actors

Nova Refactoring and Responsibilities

It is proposed that Nova networking be refactored in two ways:

- Combine networking capabilities in a more contained model, but do not remove support for existing options

- Add support for the Virtual Network Service to the existing flat (static), flat (DHCP), and VLAN options

Virtual Networking Service

(This project needs a name. "Quantum" was proposed.)

The Virtual Networking Service will have the following responsiblities for Diablo:

- Provide API for managing L2 network abstraction (“virtual networks”?)

- Provide authentication, verification, etc

- Provide error handling

- Decompose request (if necessary)

- Dispatch requests to appropriate plug-in(s)

- Resource consumption look-up service

- Plug-in capability directory

Virtual Networking Service Plug-in

Plug-ins to the VNS will have the following responsibilities for Diablo:

- Receive and verify requests from L2 Service

- Map logical abstractions to actual physical/virtual service implementation/configuration

- Advertise capabilities to L2 Service

IPAM Service

- today: Nova just hands out ip addresses from predefined blocks

- network block subdivision, carving up large blocks into smaller subnets

- DHCP (Should provide at least dns-masq equivalent and plug-in support)

- Consumers of IPAM service - openstack services (nova, LBaaS, Layer 2, etc)

- IPv4 and IPv6

- multi-tenant (overlapping ip ranges)

- public address space

- private (overlapping) address space

- must be able to associate IPs to tenant/project and to network segments

- should store ip address, def. gateway, subnet, (dhcp options: dns server, ntp, etc)

- policy - allocation - rules for how a block is used (reserve certain addresses for certain purposes, etc)

- Issue: how do we handle multiple IP subnets on the same L2 segment?

- policies and priorities?

- Issue: static IPs?

- nova reserves, then holds?

- whether the consumer gets to specify the IP to acquire

- Need support Floating IPs

- IPAM service is repository, not an "actor" (stores info and answers queries, doesn't push it out actively) *

IPAM Service Plug-in

- needs to support plug-ins for DHCP-functionality

- need understand if there are requirements for plug-in for broader IP services

Container Service

The container service (if targeted in Diablo timeframe) will:

- Provide an API for managing a container abstraction

- Container represents a logical group of resources to be managed as a unit

- Initial focus to be on networks and net services

- Provide catalog of container “flavors”

- Provide way to define container “flavors”

- Provide way to instantiate instance of container “flavor”

- Provide way to build container definition dynamically

- Provide mechanism for modifying container instance at run-time.

- Decompose request an communicate with other network services as required.

Virtual Network Components

Network Segment

The central element of the virtual network topology for the Diablo timeframe is the network segment:

- Represents single Layer 2 network subnet

- Provides access via “Ports”

- Any device connected to a “port” can communicate with any other device on the segment

Virtual Interface (VIF)

The VIF represents an interface (like a virtual NIC) from a device connecting to the network (e.g. a VM, network service or gateway)

Port

A port represents a connection into the network segment. Ports can be individually configured, and can be connected to any valid VIF. There is, however, a 1:1 relationship between a VIF and a Port when that VIF is "attached" to the network segment.

Other items proposed for discussion (captured by Dan W)

L2 Service Discussion Notes (Tuesday 4/26/2011)

- ** Tenant/Logical API ***

Basic Abstractions: - Interfaces (exposed by interface services, e.g., nova, firewall, load balancer, etc.) - Networks (exposed by L2 service). - Ports, which is associated with a Network (exposed by L2 service)

- Tenants can call the L2 service to "attach" an Interface to a Port (likely a "PUT" operation on the Port object. The semantics of this attachment is a "virtual cable" between a interface device in the interface service (e.g., a VM NIC) and a port on a "logical network". - It was mentioned that a service like nova could potentially act as a client to this API as well, for example, automatically creating and attaching a VM's public NIC to a shared public network. - The concept of "flavors" for a L2 network was left open. This may be more appropriate for a higher-level container service like donabe, but some people expressed concern over a provider could then work with a plugin that had multiple ways of implementing an L2 segment (e.g., vlan vs. overlay).

- ** The Network Edge: ***

- Must be able to describe the connection point between a switch managed by the L2 network service and and interface service. - This can be thought of as tuple of (interface-id, interface-location), where interface-id is a logical interface identifier used in the Tenant/Logical API, while interface-location is the description of the interface's current location that can be understood by both the compute service and the L2 network service (i.e., Josh's notion of a shared namespace). - The format of the interface-location information will differ based on the technology used for the interface service (XenServer vs. KVM vs. VMware ESX vs. VMware with vDS). - A deployment of openstack must make sure that it has a L2 network service that understands all of the formats that will be used by the interface services, otherwise the L2 service will not actually be able to connect all interfaces. There should be a mechanism/handshake to check this at setup. - The exact mechanism for communicating this info is still undefined. Some mentioned an explicit directory for the mapping, others an admin API to support queries.

- ** Plugin Interface ****

- The L2 Network plugin interface will map to the Tenant/Logical API and any Admin APIs. - What exists "above" the plugin would be code to implement the API parsing, perform basic validation, enforce API limits as required by the service provider, and authorize the request using keystone. - If there is a desire to utilize multiple plugins, one option is a "meta-plugin" that dispatches calls to the appropriate plugin based on some defined policy. - There is nothing to stop a plugin from potentially talking to multiple types of devices (e.g., a plugin that talks to Cisco physical switches and to Open vSwitch. In this sense, a plugin is different from a "driver", for which there is usually a one-to-one correspondence between a driver and the "type" of thing it talks to. (note: this discussion happened after some people headed to lunch, so we may not have full consensus).

- ** Network & Scheduling ****

- Did not explore in detail, but there seems to be a need to let the L2 plugin define a notion of a "zone" within which it can create L2 networks. For example, if VLANs were being used, this might map to a data center "pod" that is a single L2 domain. - Other reasons to have networking inform scheduling were also raised, for example, the desire to be able to meet the bandwidth guarantee of a host. - This topic likely deserves its own dedicated discussion with the appropriate folks in nova.

- ** Other ***

- Tenantive name for L2 service is still "quantum". Network Container concept is "donabe". - Discussed whether L2 bridging was part of the L2 service or part of a higher-level service that would "plug" into an L2 network. Final decision was not reached, as there were fairly strong opinions on both sides. We should strive to finalize this before the end of the summit.