Manila/design/manila-mitaka-data-replication

Contents

- 1 Manila Disaster Recovery / Data Replication Design

- 2 FAQs

- 3 Unanswered Questions

- 4 Examples

- 5 Implementation Progress

- 6 Older Design Pages

Manila Disaster Recovery / Data Replication Design

Introduction

Replicated shares will be implemented in Manila without adding any new services or any new drivers. The code for creating replicated shares and for adding/removing replicas and other replication-related operations will all go into the existing share drivers. The most significant change required to allow this will be that share drivers will be responsible for communicating with ALL storage controllers necessary to achieve any replication tasks, even if that involves sending commands to other storage controllers in other Availability Zones (or AZs).

While we can't know how every storage controller works and how each vendor will implement replication, this approach should give driver writers all the flexibility needed to implement what they need with minimal added complexity in the Manila core. There are already examples of drivers reading config sections for other drivers when a operation requires communicating with 2 backends. The Manila Share Manager itself is expected to communicate all necessary backend details for share replicas that exist across AZs to a backend which it requests an operation upon.

Intra-AZ replication is supported, but in an ideal solution, AZs should be perceived as failure domains. So this feature tends to shine in an inter-AZ replication use case.

Supported Replication Types

There are 3 styles of Data Replication that we would like to support in the long run:

- writable - Amazon EFS-style synchronously replicated shares where all replicas are writable. Promotion it not supported and not needed.

- readable - Mirror-style replication with a primary (writable) copy and one or more secondary (read-only) copies which can become writable after a promotion of the secondary.

- dr (for Disaster Recovery)- Generalized replication with secondary copies that are inaccessible until after a promotion of one of the secondary copies.

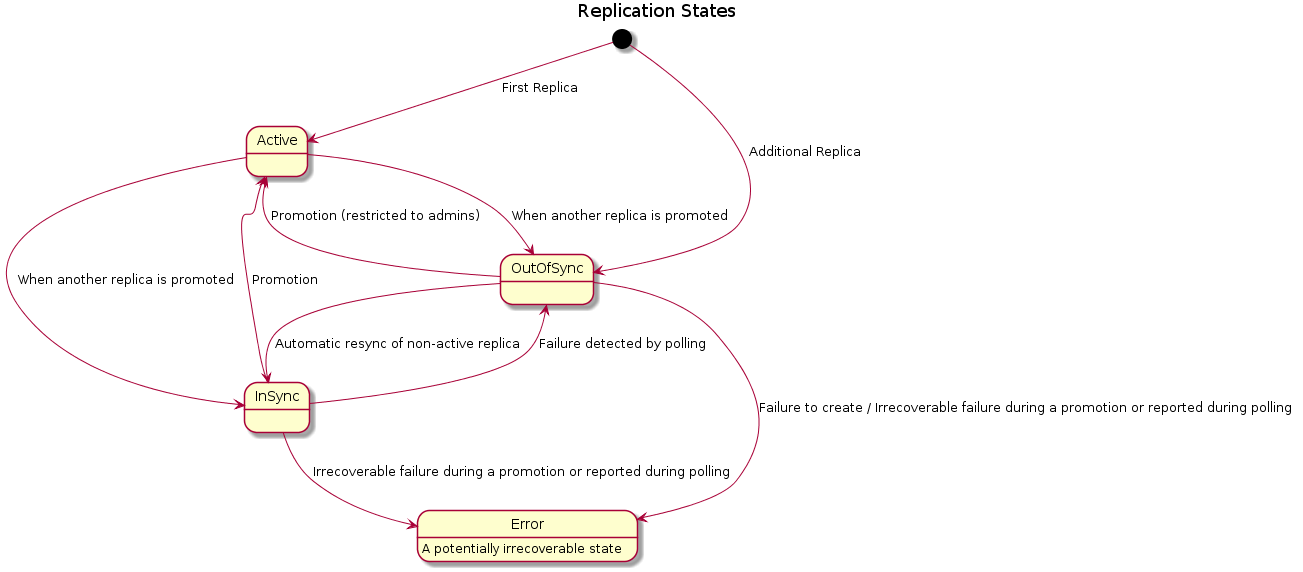

Replica States

Each replica has a replica_state which has 4 possible values:

- active - All writable replicas are active.

- in_sync - Passive replica which is up to date with the active replica, and can be promoted to active.

- out_of_sync - Passive replica which has gone out of date, or new replica that is not yet up to date.

- error - Either the scheduler failed to schedule this replica or some irrecoverable damage occurred during promotion of a non-active replica. Drivers may set the replica_state to error if some irrecoverable damage to the replica is discovered at any point during its existence. (through the periodic replica_state update)

replication_change - New transient state triggered by a change of the active replica. Access to the share is cut off while in this state.

Promotion

For readable and dr styles, we refer to the task of switching a non-active replica with the active replica as promotion. For the writable style of replication, promotion does not make sense since all replicas are active (writable) at all given points of time. Promotion of replicas with replica_state set to out_of_sync or error may be unsupported by the backend. However, we would like to allow the action as an administrator feature and such an attempt may be honored by backends if possible.

When multiple replicas exist, multiple replication relationships between shares may need to be redefined at the backend. If the driver fails at this stage, the replicas may be left at an inconsistent state. The manager will set all replica instances to have replica_state and status to error. Recovery from this stage would require administrator intervention.

User Workflows

- Administrators can create a share type with extra-spec replication_type, specifying the style of replication the backend supports.

- Users can use the share type to create a new share that allows/supports replication.

- A replicated share always starts out with one replica instance, the share itself. This should not be confused as the share having a replica already. The user can verify if the share has any replicas by requesting the details of the share.

Creating a replica

- POST to /share-replicas

User has to specify the share name/id of the share that is supposed to be replicated, an availability zone for the replica to exist in and optionally a share_network_id. According to existing design, replicas of a share cannot exist in the same availability zone as the share itself.

- A newly created share_replica starts out in out_of_sync state and might transition to in_sync state when the driver reports this state.

Listing and showing replicas

- GET from /share-replicas

User can list all replicas and verify their status and replica_state.

- GET from /share-replicas?share_id=<share_id>

User can list replicas for a particular share.

- GET from /share-replicas/<id>

User can view details of a replica.

Promoting a non-active replica

- POST to /share-replicas/action with body {'promote': None}

For replication types that permit promotion, the user can promote a replica with in_sync replica_state to active replica_state by initiating the promote_replica call.

- Only administrators can attempt promoting replicas with replica_state error or out_of_sync.

Deleting Replicas

- DELETE to /share-replicas/<id>

The user can delete a replica. The last active replica cannot be deleted using this delete_replica call.

System Workflows

- Creating a share that supports replication

- Same process as creating a new share.

- The replication_type extra-spec from the share-type is copied over to the shares data on the DB.

- Scheduler uses the replication_type extra-spec to filter available hosts and schedule the share on an appropriate backend that supports that specific style of replication_type.

- Creating a replica:

- Create a share instance on the database.

- Update the share instance replica_state to out_of_sync.

- Cast a call to the scheduler to find an appropriate host to schedule the replica on.

- In the scheduler, find weighted host for the replica.

- If host cannot be chosen, update the replica's status and replica_state on the Database to error. Throw an exception.

- Cast the create_share_replica call to the driver based on the weighted host selection.

- Prior to invoking the driver's call, collate information regarding existing active replica instance, existing share access rules and the replica's share_server, pass these to the driver while invoking create_replica.

- The driver may return export_locations and replica_state. If they are returned, the database is updated with these values.

- If the replication_type is writable, the driver MUST return the replica_state set to active.

- All the access rules for the new replica are set to active state on the database.

- The driver may throw an exception, update the status and replica_state of the replica to error.

- Listing/showing shares:

- For shares that support replication, the result of listing/showing the share will have new fields, replication_type: denoting the replication style supported and has_replicas to denote if the share has replicas.

- For replicated shares, the primary instance for the share must be preferred among those replicas that have a status replication_change or among those that have a replica_state set to active.

- Listing replicas

- For listing, Grab share instances from the database that have a replica_state among {in_sync, active, out_of_sync, error}.

- If share_id is provided, grab only share instances that belong to that share and have a replica_state among {in_sync, active, out_of_sync, error}.

- Limits and offsets must be respected on the list calls.

- Promoting a non-active replica:

- If replica is already active, do nothing.

- Update the status of the replica being promoted to replication_change.

- Grab all available replicas and invoke the appropriate drivers promote_replica call by passing the available replicas and the new replica.

- If the driver throws an exception at this stage, there is a good chance that the replicas are somehow altered on the backend. Loop through the replicas and set their replica_states to error and leave the status unchanged. Also set the status of the replica that failed to promote to available as before this operation. The backend may choose to update the actual replica_state during the replica monitoring call.

- The driver may return an updated list of replicas. Update the export_locations and replica_states to the database.

- The status of the replica that was promoted should return to available from replication_change.

- Periodic replica update:

- The share manager implements a looping call with default interval of 5 minutes to query from each driver and each backend the replica_state of all non-active replicas that are associated with them.

- The driver is allowed to set the replica_state to in_sync, out_of_sync, and in exceptional cases, error.

- If the driver sets the replica_state of a replica to error, it is assumed that some irrecoverable damage has occured to the replica instance. The status of the replica instance must be set to error as well.

- Deleting a share replica

- If the replica had no host, it is simply removed from the database.

- The status of the replica is set to deleting on the database and the appropriate driver method is called to delete the replica.

- If the driver fails to delete the replica, the status of the replica is updated to error_deleting on the database.

- If the driver returns without errors, the replica instance is removed from the database.

Scheduler Impact

- The host_manager must update the replication_type capability from the backend. The backends must report this capability at the host/pool level.

- The filter_scheduler must then match the replication_type capability with the capability that the replication share_type demands.

- The replication_type must be one of the styles mentioned above.

DB Impact

- Share Export Locations:

Preferred export locations will only be from the instances with replica_state set to active.

def export_locations(self):

# TODO(gouthamr): Return AZ specific export locations for replicated

# shares

# NOTE(gouthamr): For a replicated share, export locations of the

# 'active' instances are taken, if 'available'.

all_export_locations = []

select_instances = list(filter(

lambda x: x['replica_state'] == constants.REPLICA_STATE_ACTIVE,

self.instances)) or self.instances

for instance in select_instances:

if instance['status'] == constants.STATUS_AVAILABLE:

for export_location in instance.export_locations:

all_export_locations.append(export_location['path'])

return all_export_locations

- Share Instance:

Preferred instance will be an instance with status set to replication_change or any instance with status set to available and replica_state set to active.

def instance(self):

# NOTE(gouthamr): The order of preference: status 'replication_change',

# followed by 'available' and 'creating'. If replicated share and

# not undergoing a 'replication_change', only 'active' instances are

# preferred.

result = None

if len(self.instances) > 0:

order = [constants.STATUS_REPLICATION_CHANGE,

constants.STATUS_AVAILABLE, constants.STATUS_CREATING]

other_statuses = ([x['status'] for x in self.instances

if x['status'] not in order])

order.extend(other_statuses)

sorted_instances = sorted(

self.instances, key=lambda x: order.index(x['status']))

select_instances = sorted_instances

if (select_instances[0]['status'] !=

constants.STATUS_REPLICATION_CHANGE):

select_instances = (

list(filter(lambda x: x['replica_state'] ==

constants.REPLICA_STATE_ACTIVE,

sorted_instances)) or sorted_instances

)

result = select_instances[0]

return result

- New field on Share:

replication_type = Column(String(255), nullable=True)

- New field on ShareInstance

replica_state = Column(String(255), nullable=True)

API Design

GET /share-replicas/ GET /share-replicas?share-id=<share_id> GET /share-replicas/<replica_id>

POST /share-replicas/<share-id>

Body:

{

'share_replica':

{

'availability_zone':<availability_zone_id>,

'share_id':<share_id>,

'share_network_id':<share_network_id>

}

}

POST /share-replicas/<replica-id>/action

Body:

{'os-promote_replica': null}

DELETE /share-replicas/<replica-id>

Policies

policy.json - All replication group policies should default to the default policy

"share_replica:get_all": "rule:default", "share_replica:show": "rule:default", "share_replica:create" : "rule:default", "share_replica:delete": "rule:default", "share_replica:promote": "rule:default"

Driver API

Only a single replication driver method will be called at a time for a given share as they are locked by share id. Drivers should avoid raising errors to the manager for any of these methods. If the driver hits an issue it should attempt to return the correct model updates with the correct error states set to represent the actual state of the resources. A driver raising an exception will result in all replicas in the error status with the replica_state unchanged.

def create_replica(self, context, active_replica, new_replica,

access_rules, share_server=None):

"""Replicate the active replica to a new replica on this backend.

:param context: Current context

:param active_replica: A current active replica instance dictionary.

EXAMPLE:

.. code::

{

'id': 'd487b88d-e428-4230-a465-a800c2cce5f8',

'share_id': 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'deleted': False,

'host': 'openstack2@cmodeSSVMNFS1',

'status': 'available',

'scheduled_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'launched_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'terminated_at': None,

'replica_state': 'active',

'availability_zone_id': 'e2c2db5c-cb2f-4697-9966-c06fb200cb80',

'export_locations': [

<models.ShareInstanceExportLocations>._as_dict()

],

'share_network_id': '4ccd5318-65f1-11e5-9d70-feff819cdc9f',

'share_server_id': '4ce78e7b-0ef6-4730-ac2a-fd2defefbd05',

'share_server': <models.ShareServer>._as_dict() or None,

}

:param new_replica: The share replica dictionary.

EXAMPLE:

.. code::

{

'id': 'e82ff8b6-65f0-11e5-9d70-feff819cdc9f',

'share_id': 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'deleted': False,

'host': 'openstack2@cmodeSSVMNFS2',

'status': 'available',

'scheduled_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'launched_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'terminated_at': None,

'replica_state': 'out_of_sync',

'availability_zone_id': 'f6e146d0-65f0-11e5-9d70-feff819cdc9f',

'export_locations': [

models.ShareInstanceExportLocations._as_dict()

],

'share_network_id': '4ccd5318-65f1-11e5-9d70-feff819cdc9f',

'share_server_id': 'e6155221-ea00-49ef-abf9-9f89b7dd900a',

'share_server': <models.ShareServer>._as_dict() or None,

}

:param access_rules: A list of access rules that other instances of

the share already obey.

EXAMPLE:

.. code::

[ {

'id': 'f0875f6f-766b-4865-8b41-cccb4cdf1676',

'deleted' = False,

'share_id' = 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'access_type' = 'ip',

'access_to' = '172.16.20.1',

'access_level' = 'rw',

}]

:param share_server: <models.ShareServer>._as_dict() or None,

Share server of the replica being created.

:return: (export_locations, replica_state)

export_locations is a list of paths and replica_state is one of

active, in-sync, out-of-sync or error.

A backend supporting 'writable' type replication should return

'active' as the replica_state.

Export locations should be in the same format as returned by a

share_create. This list may be empty or None.

EXAMPLE:

.. code::

[{'id': 'uuid', 'export_locations': ['export_path']}]

"""

raise NotImplementedError()

def delete_replica(self, context, active_replica, replica,

share_server=None):

"""Delete a replica.

:param context:Current context

:param active_replica: A current active replica instance dictionary.

EXAMPLE:

.. code::

{

'id': 'd487b88d-e428-4230-a465-a800c2cce5f8',

'share_id': 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'deleted': False,

'host': 'openstack2@cmodeSSVMNFS1',

'status': 'available',

'scheduled_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'launched_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'terminated_at': None,

'replica_state': 'active',

'availability_zone_id': 'e2c2db5c-cb2f-4697-9966-c06fb200cb80',

'export_locations': [

models.ShareInstanceExportLocations._as_dict()

],

'share_network_id': '4ccd5318-65f1-11e5-9d70-feff819cdc9f',

'share_server_id': '4ce78e7b-0ef6-4730-ac2a-fd2defefbd05',

'share_server': <models.ShareServer>._as_dict() or None,

}

:param replica: Dictionary of the share replica being deleted.

EXAMPLE:

.. code::

{

'id': 'e82ff8b6-65f0-11e5-9d70-feff819cdc9f',

'share_id': 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'deleted': False,

'host': 'openstack2@cmodeSSVMNFS2',

'status': 'available',

'scheduled_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'launched_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'terminated_at': None,

'replica_state': 'in_sync',

'availability_zone_id': 'f6e146d0-65f0-11e5-9d70-feff819cdc9f',

'export_locations': [

models.ShareInstanceExportLocations._as_dict()

],

'share_network_id': '4ccd5318-65f1-11e5-9d70-feff819cdc9f',

'share_server_id': '53099868-65f1-11e5-9d70-feff819cdc9f',

'share_server': <models.ShareServer>._as_dict() or None,

}

:param share_server: <models.ShareServer>._as_dict() or None,

Share server of the replica to be deleted.

:return: None.

"""

raise NotImplementedError()

def promote_replica(self, context, replica_list, replica, access_rules, share_server=None):

"""Promote a replica to 'active' replica state.

:param context:Current context

:param replica_list: List of all replicas for a particular share.

This list also contains the replica to be promoted. The 'active'

replica will have its 'replica_state' attr set to 'active'.

EXAMPLE:

.. code::

[

{

'id': 'd487b88d-e428-4230-a465-a800c2cce5f8',

'share_id': 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'replica_state': 'in-sync',

...

'share_server_id': '4ce78e7b-0ef6-4730-ac2a-fd2defefbd05',

'share_server': <models.ShareServer>._as_dict() or None,

},

{

'id': '10e49c3e-aca9-483b-8c2d-1c337b38d6af',

'share_id': 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'replica_state': 'active',

...

'share_server_id': 'f63629b3-e126-4448-bec2-03f788f76094',

'share_server': <models.ShareServer>._as_dict() or None,

},

{

'id': 'e82ff8b6-65f0-11e5-9d70-feff819cdc9f',

'share_id': 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'replica_state': 'in-sync',

...

'share_server_id': '07574742-67ea-4dfd-9844-9fbd8ada3d87',

'share_server': <models.ShareServer>._as_dict() or None,

},

...

]

:param replica: Dictionary of the replica to be promoted.

EXAMPLE:

.. code::

{

'id': 'e82ff8b6-65f0-11e5-9d70-feff819cdc9f',

'share_id': 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'deleted': False,

'host': 'openstack2@cmodeSSVMNFS2',

'status': 'available',

'scheduled_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'launched_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'terminated_at': None,

'replica_state': 'in_sync',

'availability_zone_id': 'f6e146d0-65f0-11e5-9d70-feff819cdc9f',

'export_locations': [

models.ShareInstanceExportLocations._as_dict()

],

'share_network_id': '4ccd5318-65f1-11e5-9d70-feff819cdc9f',

'share_server_id': '07574742-67ea-4dfd-9844-9fbd8ada3d87',

'share_server': <models.ShareServer>._as_dict() or None,

}

:param access_rules: A list of access rules that other instances of

the share already obey.

EXAMPLE:

.. code::

[ {

'id': 'f0875f6f-766b-4865-8b41-cccb4cdf1676',

'deleted' = False,

'share_id' = 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'access_type' = 'ip',

'access_to' = '172.16.20.1',

'access_level' = 'rw',

}]

:param share_server: <models.ShareServer>._as_dict() or None,

Share server of the replica to be promoted.

:return: updated_replica_list or None

The driver can return the updated list as in the request

parameter. Changes that will be updated to the Database are:

'export_locations' and 'replica_state'.

:raises Exception

This can be any exception derived from BaseException. This is

re-raised by the manager after some necessary cleanup. If the

driver raises an exception during promotion, it is assumed

that all of the replicas of the share are in an inconsistent

state. Recovery is only possible through the periodic update

call and/or administrator intervention to correct the 'status'

of the affected replicas if they become healthy again.

"""

raise NotImplementedError()

def update_replica_state(self, context, replica, share_server=None):

"""Update the replica_state of a replica.

Drivers should fix replication relationships that were broken if

possible inside this method.

:param context:Current context

:param replica: Dictionary of the replica being updated.

EXAMPLE:

.. code::

{

'id': 'd487b88d-e428-4230-a465-a800c2cce5f8',

'share_id': 'f0e4bb5e-65f0-11e5-9d70-feff819cdc9f',

'deleted': False,

'host': 'openstack2@cmodeSSVMNFS1',

'status': 'available',

'scheduled_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'launched_at': datetime.datetime(2015, 8, 10, 0, 5, 58),

'terminated_at': None,

'replica_state': 'active',

'availability_zone_id': 'e2c2db5c-cb2f-4697-9966-c06fb200cb80',

'export_locations': [

models.ShareInstanceExportLocations._as_dict()

],

'share_network_id': '4ccd5318-65f1-11e5-9d70-feff819cdc9f',

'share_server_id': '4ce78e7b-0ef6-4730-ac2a-fd2defefbd05',

}

:param share_server: <models.ShareServer>._as_dict() or None

:return: replica_state

replica_state - a str value denoting the replica_state that the

replica can have. Valid values are 'in_sync' and 'out_of_sync'

or None (to leave the current replica_state unchanged).

"""

raise NotImplementedError()

Manila Client

share-replica-create Creates a replica share-replica-delete Remove one or more share replicas. share-replica-list List share replicas. share-replica-promote Promote replica to active replica. share-replica-show Show details about a replica.

Configuration Options

replica_state_update_interval This value, specified in seconds, determines how often the share manager will poll for the health (replica_state) of each replica instance.

First-Party Driver

DR will require first-party driver support before it can be promoted from an experimental feature for two main reasons.

- A feature is not truly open if only a single vendor can develop it due to it only being supported by a proprietary driver.

- In order to ensure the quality of a feature, it must be testable in upstream gate.

FAQs

- How do we deal with Network issues in multi-SVM replication?

If we choose to make replication a single-svm-only feature, the share-network API doesn't need to change. In order to support replication with share-networks, we also need to modify the share-network create API which allows creation of share networks with a table of AZ-to-subnet mappings. This approach allows us to keep a single share-network per share (with associated security service) while allowing the tenant to specify enough information that each share instance can be attached to the appropriate network in each AZ. Multi-AZ share networks would also be useful for non-replicated use cases. - Do we support Pool-level Replication?

Some vendors have suggested that certain backends can mirror groups of shares or whole pools more efficiently than individual shares. This design only addresses the mirroring of individual shares. In the future, we may allow allow replication of groups of shares, but only if those groups are contained within a single tenant and defined by the tenant. - From where to where do we allow replication? Is it intra-cloud or inter-cloud? Do we allow replication to something that's not managed by Manila?

Intra-cloud. Replicating to something outside of Manila allows a bit more freedom, but with significantly less value, because there's practically nothing we can do to automate the failover/failback portion of a disaster. For use cases involving replication outside of Manila, we would need to involve other tools with more breadth/scope to manage the process. - Who configures the replication? The admin? The end user? The manila scheduler?

The end user. In the original design we presumed that the actual replication relationships should be hidden from the end user, but this doesn't match well with the concept of AZs that we are adding to Manila. If the users need to have control over which AZ the primary copy of their data lives in, then they also need to control where the other copies live. This means that the administrator's job is to ensure that for any share type that is replicated, it can be replicated from any AZ to any other AZ. - Is replication supported only intra-region or inter-region as well? How about intra-AZ vs inter-AZ?

This design is for intra-region only. Inter-region replication is clearly a feature we want, but it would look pretty different from intra-region replication; this design does not cover that. Intra-AZ replication is supported, however inter-AZ replication is recommended as AZs should be perceived as failure domains.

Unanswered Questions

- Is there no way to achieve non-disruptive failover?

(bswartz) I would love to find out that our initial intuition here is wrong, because it would change a lot of aspects of the design. It's worth spending time to brainstorm and research possibilities in this area. So far the most promising ideas involve:- Using VirtFS to mediate filesystem access and achieving non-disruptive failover that way

- Using some kind of agent inside the guests to mediate file access

- Can a replica have a different share_type? There is a valid use case where a user would want to create a share replica on backend with different capabilities than the one the original share resides on. For instance, replicas might need to be on a less expensive backend. In that case, can the replica have a different share_type altogether?

"Currently", we inherit the share_type of the share and believe that replication has to be on symmetric terms, where both backends have similar capabilities. - Can we allow the driver to restrict replication support between available backends? Backends may support replication to other compatible backends only. Hence, they must report some sort of information to the scheduler so that when creating a replica for an existing share, the scheduler would use that information to schedule the creation of the replica. What information should this be?

(gouthamr) We're investigating including 'driver_class_name' in a ReplicationFilter, including the possibility of backend reported configuration, 'replication_partners'. - How are access rules persisted across replicas/share instances?

- Do all replicas have the same access rules applied? (gouthamr): This is Currently being pursued

- Should access rules be applied only to "active" replicas?

- How does migration affect replicated shares?

- (gouthamr)In the Tokyo summit, it was decided that we currently disallow migration of a share that has replicas. The replication relationships must be broken and reestablished after the migration is complete in order to migrate a share with replicas.

- (gouthamr)In the Tokyo summit, it was decided that we currently disallow migration of a share that has replicas. The replication relationships must be broken and reestablished after the migration is complete in order to migrate a share with replicas.

- Do we need an API to initiate a sync? (resync or force-update)

This is a great idea and one certain use case is during planned failovers. (gouthamr) - How to recover from promotion failures?

(gouthamr) Currently, if some exception occurs at the driver level, the replicas are rendered useless. Should we provide escape hatches to avoid complete loss in case these are recoverable exceptions? The administrator has the ability to 'reset-state' and affect the 'status' of any replica. Currently, there exists no API to 'reset-replica-state' for a replica.

Examples

These examples assume that the replicas exist in different AZs.

Writable replication example

- Administrator sets up backends in AZs b1 and b2 that have capability replication_type=writeable

- Administrator creates a new share_type called foo

- Administrator sets replication_type=writeable extra spec on share type foo

- User creates new share of type foo in AZ b1

- Share is created with replication_type=writeable, and 1 active replica in AZ b1

- User grants access on share to client1 in AZ b1, obtains the export location of the replica, mounts the share on a client, and starts to write data

- User add new replica of share in AZ b2

- A second replica is created in AZ b2 which initially has state out_of_sync

- Shortly afterwards, the replica state changes to active (after the replica finishes syncing with the original copy)

- The user grants access on the share to client2 in AZ b2, obtains the export location of the new replica, mounts the share, and sees the same data that client1 wrote

- Client2 writes some data to the share, which is immediately visible to client1

Readable replication example

- Administrator sets up backends in AZs b1 and b2 that have capability replication_type=readable

- Administrator creates a new share_type called bar

- Administrator sets replication_type=readable extra spec on share type bar

- User creates new share of type bar in AZ b1

- Share is created with replication_type=readable, and 1 active replica in AZ b1

- User grants access on share to client1 in AZ b1, obtains the export location of the replica, mounts the share on a client, and starts to write data

- User add new replica of share in AZ b2

- A second replica is created in AZ b2 which initially has state out_of_sync

- Shortly afterwards, the replica state changes to in_sync (after the replica finishes syncing with the original copy)

- The user grants access on the share to client2 in AZ b2, obtains the export location of the new replica, mounts the share, and sees the same data that client1 wrote

- Client2 cannot write data to the share but continues to see updates

Failover/failback example

(Continued from above)

- An outage occurs in AZ b1

- Administrator sends out a bulletin about the outage to his users "the power transformer in b1 turned to slag, it will be 12 hours before it can be replaced, please bear with us, yada yada"

- User notices that his application on client1 is no longer running, and investigates

- User finds out that client1 is gone, and reads a bulletin from the admin explaining why

- User notes that the b1 replica is his share is still active and the b2 replica is in_sync, while the state of the share is available

- User calls set active replica to AZ b2 on his share

- The share goes to state replication_change and access to the share is briefly lost on client2

- The state of replica b2 changes to active and the state of replica b1 changes to out_of_sync after Manila fails to contact the original primary. The state of the share changes back to available, and access is restored on client2

- User starts his application on client2, and the application recovers from an apparent crash, with a consistent copy of the application's data

- User application is back up and running, disaster is averted

- Eventually maintenance on the b1 AZ is completed, and all of the equipment is reactivated

- Administrator sends out a bulletin to his users about the outage ending "we replaced the transformer with a better one, everything is back online, thanks for your understanding, yada yada"

- Manila notices the out_of_sync replica is reachable and initiates a resync, bringing the b1 replica in_sync within a short time

- After some time, the users reads the bulletin and observes that his share is being replicated again. He decides to intentionally move back to b1.

- User gracefully shuts down his application, and flushes I/O

- User calls set active replica to AZ b1 on his share

- The share goes to state replication_change and no disruption is observed because the application is shut down

- The state of replica b1 changes to active and the state of replica b2 changes to in_sync after Manila reverses the replication again. The state of the share changes back to available

- User starts his application on client1, and the application starts cleanly, having been shut down gracefully

Implementation Progress

Core Work

Blueprint for Core API/Scheduler implementation Blueprint for Client implementation

Ex Driver Implementation

Blueprint for cDOT Driver Implementation

Older Design Pages

- Replication Use Cases: https://wiki.openstack.org/wiki/Manila/Replication_Use_Cases

- Design Notes: https://wiki.openstack.org/wiki/Manila/Replication_Design_Notes