Difference between revisions of "WholeHostAllocation"

(→Defining and managing pools of servers) |

(→Summary) |

||

| (10 intermediate revisions by the same user not shown) | |||

| Line 4: | Line 4: | ||

== Summary == | == Summary == | ||

| − | + | Pclouds (PrivateClouds) allow a tenant to allocate all of the capacity of a host for their exclusive use. The host remains part of the Nova configuration, i.e this is different from bare metal provisioning in that the tenant is not getting access to the Host OS - just a dedicated pool of compute capacity. This gives the tenant guaranteed isolation for their instances, at the premium of paying for a whole host. | |

| − | + | ||

| − | Extending this further in the future could | + | The Pcloud mechanism provides an abstraction between the user and the underlying physical servers. A user can uniquely name and control servers in the Pcloud, but then never get to know the actual host names or perform admin commands on those servers. |

| + | |||

| + | The initial implementation provides all of the operations need create and manage a pool of servers, and to schedule instances to them. Extending this further in the future could allow additional operations such as specific scheduling policies, control over which flavors and images can be used, etc. | ||

| + | |||

| + | Pclouds are implemented as an abstraction layer on top of Host Aggregates. Each host flavor pool and each Pcloud is an aggregate. Properties of the Host Flavors and Pclouds are stored as aggregate metadata. Hosts in a host flavor pool are not available for general scheduling. Host are allocated to Pclouds by adding them into the Pcloud aggregate. | ||

| + | (they may also be in other types of aggregates as well, such as an AZ). All of the manipulation of aggregates including host allocation is performed by the Pcloud API layer - users never have direct access to the aggregates, aggregate metadata, or hosts. (Admins of course can still see and manipulate the aggregates) | ||

| + | |||

| + | [[File:Pclouds.png]] | ||

| − | + | === Supported Operations === | |

| − | + | The Cloud Administrator can: | |

| − | + | * Define the types of server that can be allocated (host-flavors) | |

| − | + | * Add or remove physical servers from a host-flavor pool | |

| + | * See details of a host flavor pool | ||

| − | |||

| − | |||

| − | * | + | A User can: |

| − | * | + | * Create a Pcloud |

| − | * | + | * See the list of available host flavors |

| − | * | + | * Allocate hosts of a particular host-flavor and availability zone to their Pcloud |

| + | * Enable or disable hosts in their Pcloud | ||

| + | * Authorize other tenants to be able to schedule to their Pcloud | ||

| + | * Set the ram and cpu allocation ratios used by the scheduler within their Pcloud | ||

| + | * See the list of hosts and instances running in their Pcloud | ||

| − | |||

| − | A | + | A Tenant that has been authorized by a Pcloud can: |

| − | + | * Schedule an instance to run in the Pcloud. Scheduling is controlled by an additional filter, so all other filters (Availability Zones, affinity, etc) are still valid | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | === Host Flavors === | ||

| − | + | Host flavors define the types of hosts that users can allocate to their Pclouds (c.f. flavors for instances). Because physical host have fixed characteristics a host flavor is defined by just three properties: | |

| − | Pclouds | + | * An unique Identifier |

| − | + | * A description (which can include details of the number of cpus memory, etc) | |

| + | * A Units value which is used to control the quota cost of a host | ||

| + | Host Flavors are implemented as aggregates with the name "Pcloud:host_flavor:<id>", and have the following metadata values: | ||

| − | '' | + | {| class="wikitable" |

| − | + | |- | |

| + | ! Key !! Value !! Notes | ||

| + | |- | ||

| + | | pcloud:type || 'host_flavor_pool' || Identifies this as a host flavor aggregate | ||

| + | |- | ||

| + | | pcloud:flavor_id || ''flavor_id'' || | ||

| + | |- | ||

| + | | pcloud:description || ''description'' || A description of the host type | ||

| + | |- | ||

| + | | pcloud:units || ''units'' || The number of quota units consumed by a host of this type | ||

| + | |} | ||

| − | + | A host must be empty and its compute service disabled before it can be added to a host flavor pool. Adding it to the host flavor pool will enable the compute-service (the Pcloud scheduler filter will stop anything from being schedule to it until it is allocated to a Pcloud). Hosts remain in this aggregate even when they are allocated to a Pcloud - membership of the host flavor aggregate defines the the host flavor of the host - so there is no need to keep a separate mapping of hosts to host types. | |

| − | |||

| − | + | === Pclouds === | |

| − | |||

| + | A Pcloud is in effect a user defined and managed aggregate. However the Pclouds API provides an abstraction layer to control ownership and provide authorization schematics, and encapsulate system system properties such as host names. On creation a Pcloud is assigned a uuid, which forms part of the aggregate name and provides the Pcloud ID for users to use in the API. | ||

| − | + | Pclouds are implemented as aggregates with the name "Pcloud:<uuid>", and have the following metadata values on creation: | |

| − | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Key !! Value !! Notes | ||

| + | |- | ||

| + | | pcloud:type || 'pcloud' || Identifies this as a pcloud aggregate | ||

| + | |- | ||

| + | | pcloud:id || ''uuid'' || The generated uuid for this Pcloud | ||

| + | |- | ||

| + | | pcloud:name || ''name'' || The user assigned name for this Pcloud | ||

| + | |- | ||

| + | | pcloud:owner || ''tenant_id'' || The tenant_id that created the Pcloud. Used for authorisation and billing | ||

| + | |} | ||

| + | |||

| − | + | ==== Host Operations ==== | |

| − | |||

| − | + | A user adds a host by specifying a name (which must be unique within the pcloud) and the required host flavor type and optionally an availability zone. The Plcoud API finds a free host in the appropriate host flavor aggregate and adds it to the Pcloud aggregate. Note that because this is a simple allocation mechanism there is no need to call to the scheduler, there is either a free host in the host flavor aggregate or there isn't. | |

| − | + | ||

| + | In adding the host to the Pcloud aggregate the following additional metadata values are also added | ||

| + | |||

| + | {| class="wikitable" | ||

| + | |- | ||

| + | ! Key !! Value !! Notes | ||

| + | |- | ||

| + | | pcloud:host:<name> || ''hypervisor_hostname'' || Provides the mapping from the users name to the real hostname | ||

| + | |- | ||

| + | | pcloud:host_state:<hostname> || ''disabled'' || Controls whether a host can be used for scheduling. Hosts are always disabled when added | ||

| + | |} | ||

| − | |||

| − | + | Because the allocation occurs in the API sever, there is a risk of a race condition when two allocation requests are processed at the same time. The Pcloud API provide a protection against this by: | |

| + | * Host are selected randomly from the list of available hosts. | ||

| + | * After the host has been added to the Pcloud aggregate a further check is made to see how many Pcloud aggregates the host is a member of. If it is a member of more than one Pcloud aggregate then it is removed from the Pcloud and the allocation re-tried (up to a configurable number of attempts). In this way if more that one Pcloud allocates the same host one or all of them will release it and retry. | ||

| − | + | In order to remove a host from a Pcloud it must be empty (so that it can be used by another Pcloud) and in order to empty it the Pcloud owner must be able to prevent further instances from being scheduled to it. Pclouds therefore provide a simple enable / disable mechanism for hosts, which is recorded in the metadata and implemented by the Pcloud filter. The underlying service enable / disable is not used so that this remains available to the System administrators. | |

| − | + | A host must be both empty and disabled before it can be removed fro a Pcloud. | |

| + | The Pcloud Owner can see a list of hosts, their states, and the uuid's of instances on those hosts | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ==== Tenant Operations ==== | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | The owner of a Pcloud can authorize other tenants to be able to schedule to the Pcloud. Authorizing a tenant adds the following record to the aggregate metadata: | |

| − | + | {| class="wikitable" | |

| − | + | |- | |

| − | + | ! Key !! Value !! Notes | |

| − | + | |- | |

| − | + | | pcloud:<tenant_id> || 'pcloud-tenant' || Indicates that <tenant_id> can schedule to this Pcloud | |

| − | + | |} | |

| − | |||

| − | |||

| − | + | Note that to avoid being limited by the metadata record size a separate metadata value is created for each authorized tenant. | |

| + | Note that owner of a Pcloud is automatically added as an authorized tenant and creation time, but they can remove themselves if required. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ==== Scheduler Configuration ==== | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | Where a scheduler filter can be configured by aggregate properties its possible to provide that configuration on a per Pcloud basis. | |

| − | + | Currently the Core Filter and Ram Filter support per Aggregate configuration, and so the Pcloud API provides the capability to set the appropriate values on the underlying aggregate | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | === Using a Pcloud === | |

| − | + | ||

| − | + | Scheduling is controlled by the PcloudFilter, which must be configured as part of the filter scheduler. | |

| − | + | ||

| − | + | A User requests an instance to be scheduler to a Pcloud by passing the scheduler hint "pcloud=<pcloud_uuid>". | |

| − | + | ||

| + | The Pcloud filter implements the following rules for each host: | ||

| + | |||

| + | * If the user has not specified a Pcloud, a host only passes if it neither a Pcloud or Host Flavor aggregate | ||

| + | * If the user has specified a Pcloud, a host only passes if it is in the specified Pcloud, is Enabled, and the Tenant is authorized to use that Pcloud | ||

| + | * In all other cases the host does not pass | ||

| + | |||

| + | |||

| + | === Examples of using Pclouds === | ||

| − | + | See here for examples using nova client [[WholeHostAllocation-pcloud-example|Pcloud Examples]] | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 22:03, 30 October 2013

- Launchpad Entry: NovaSpec:whole-host-allocation

- Created: 6th May 2013

- Contributors: Phil Day, HP Cloud Services

Contents

Summary

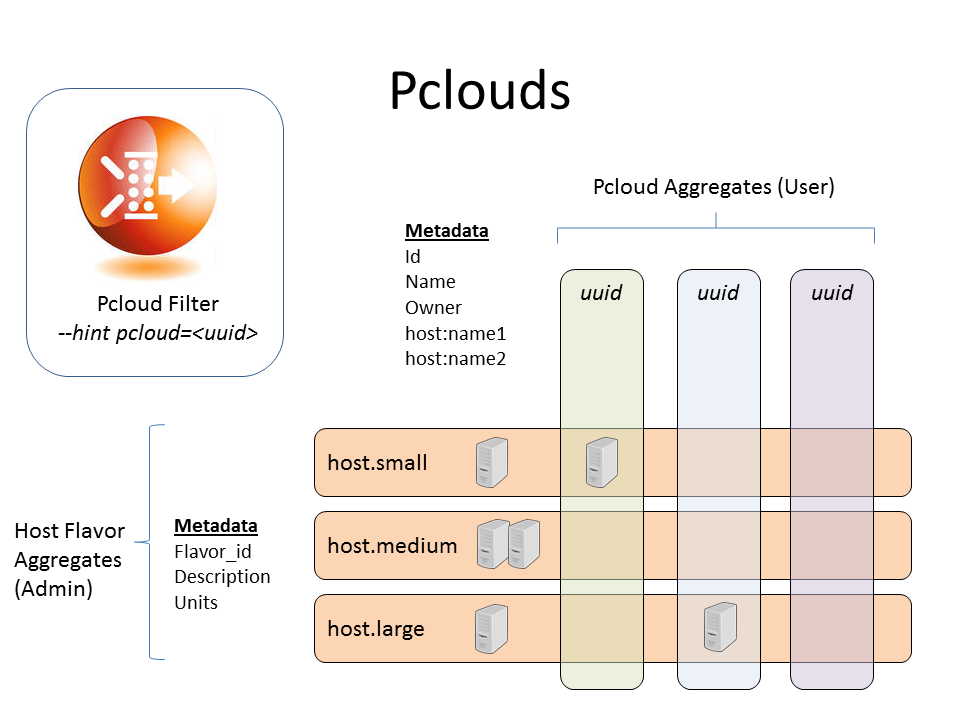

Pclouds (PrivateClouds) allow a tenant to allocate all of the capacity of a host for their exclusive use. The host remains part of the Nova configuration, i.e this is different from bare metal provisioning in that the tenant is not getting access to the Host OS - just a dedicated pool of compute capacity. This gives the tenant guaranteed isolation for their instances, at the premium of paying for a whole host.

The Pcloud mechanism provides an abstraction between the user and the underlying physical servers. A user can uniquely name and control servers in the Pcloud, but then never get to know the actual host names or perform admin commands on those servers.

The initial implementation provides all of the operations need create and manage a pool of servers, and to schedule instances to them. Extending this further in the future could allow additional operations such as specific scheduling policies, control over which flavors and images can be used, etc.

Pclouds are implemented as an abstraction layer on top of Host Aggregates. Each host flavor pool and each Pcloud is an aggregate. Properties of the Host Flavors and Pclouds are stored as aggregate metadata. Hosts in a host flavor pool are not available for general scheduling. Host are allocated to Pclouds by adding them into the Pcloud aggregate. (they may also be in other types of aggregates as well, such as an AZ). All of the manipulation of aggregates including host allocation is performed by the Pcloud API layer - users never have direct access to the aggregates, aggregate metadata, or hosts. (Admins of course can still see and manipulate the aggregates)

Supported Operations

The Cloud Administrator can:

- Define the types of server that can be allocated (host-flavors)

- Add or remove physical servers from a host-flavor pool

- See details of a host flavor pool

A User can:

- Create a Pcloud

- See the list of available host flavors

- Allocate hosts of a particular host-flavor and availability zone to their Pcloud

- Enable or disable hosts in their Pcloud

- Authorize other tenants to be able to schedule to their Pcloud

- Set the ram and cpu allocation ratios used by the scheduler within their Pcloud

- See the list of hosts and instances running in their Pcloud

A Tenant that has been authorized by a Pcloud can:

- Schedule an instance to run in the Pcloud. Scheduling is controlled by an additional filter, so all other filters (Availability Zones, affinity, etc) are still valid

Host Flavors

Host flavors define the types of hosts that users can allocate to their Pclouds (c.f. flavors for instances). Because physical host have fixed characteristics a host flavor is defined by just three properties:

- An unique Identifier

- A description (which can include details of the number of cpus memory, etc)

- A Units value which is used to control the quota cost of a host

Host Flavors are implemented as aggregates with the name "Pcloud:host_flavor:<id>", and have the following metadata values:

| Key | Value | Notes |

|---|---|---|

| pcloud:type | 'host_flavor_pool' | Identifies this as a host flavor aggregate |

| pcloud:flavor_id | flavor_id | |

| pcloud:description | description | A description of the host type |

| pcloud:units | units | The number of quota units consumed by a host of this type |

A host must be empty and its compute service disabled before it can be added to a host flavor pool. Adding it to the host flavor pool will enable the compute-service (the Pcloud scheduler filter will stop anything from being schedule to it until it is allocated to a Pcloud). Hosts remain in this aggregate even when they are allocated to a Pcloud - membership of the host flavor aggregate defines the the host flavor of the host - so there is no need to keep a separate mapping of hosts to host types.

Pclouds

A Pcloud is in effect a user defined and managed aggregate. However the Pclouds API provides an abstraction layer to control ownership and provide authorization schematics, and encapsulate system system properties such as host names. On creation a Pcloud is assigned a uuid, which forms part of the aggregate name and provides the Pcloud ID for users to use in the API.

Pclouds are implemented as aggregates with the name "Pcloud:<uuid>", and have the following metadata values on creation:

| Key | Value | Notes |

|---|---|---|

| pcloud:type | 'pcloud' | Identifies this as a pcloud aggregate |

| pcloud:id | uuid | The generated uuid for this Pcloud |

| pcloud:name | name | The user assigned name for this Pcloud |

| pcloud:owner | tenant_id | The tenant_id that created the Pcloud. Used for authorisation and billing |

Host Operations

A user adds a host by specifying a name (which must be unique within the pcloud) and the required host flavor type and optionally an availability zone. The Plcoud API finds a free host in the appropriate host flavor aggregate and adds it to the Pcloud aggregate. Note that because this is a simple allocation mechanism there is no need to call to the scheduler, there is either a free host in the host flavor aggregate or there isn't.

In adding the host to the Pcloud aggregate the following additional metadata values are also added

| Key | Value | Notes |

|---|---|---|

| pcloud:host:<name> | hypervisor_hostname | Provides the mapping from the users name to the real hostname |

| pcloud:host_state:<hostname> | disabled | Controls whether a host can be used for scheduling. Hosts are always disabled when added |

Because the allocation occurs in the API sever, there is a risk of a race condition when two allocation requests are processed at the same time. The Pcloud API provide a protection against this by:

- Host are selected randomly from the list of available hosts.

- After the host has been added to the Pcloud aggregate a further check is made to see how many Pcloud aggregates the host is a member of. If it is a member of more than one Pcloud aggregate then it is removed from the Pcloud and the allocation re-tried (up to a configurable number of attempts). In this way if more that one Pcloud allocates the same host one or all of them will release it and retry.

In order to remove a host from a Pcloud it must be empty (so that it can be used by another Pcloud) and in order to empty it the Pcloud owner must be able to prevent further instances from being scheduled to it. Pclouds therefore provide a simple enable / disable mechanism for hosts, which is recorded in the metadata and implemented by the Pcloud filter. The underlying service enable / disable is not used so that this remains available to the System administrators.

A host must be both empty and disabled before it can be removed fro a Pcloud.

The Pcloud Owner can see a list of hosts, their states, and the uuid's of instances on those hosts

Tenant Operations

The owner of a Pcloud can authorize other tenants to be able to schedule to the Pcloud. Authorizing a tenant adds the following record to the aggregate metadata:

| Key | Value | Notes |

|---|---|---|

| pcloud:<tenant_id> | 'pcloud-tenant' | Indicates that <tenant_id> can schedule to this Pcloud |

Note that to avoid being limited by the metadata record size a separate metadata value is created for each authorized tenant. Note that owner of a Pcloud is automatically added as an authorized tenant and creation time, but they can remove themselves if required.

Scheduler Configuration

Where a scheduler filter can be configured by aggregate properties its possible to provide that configuration on a per Pcloud basis. Currently the Core Filter and Ram Filter support per Aggregate configuration, and so the Pcloud API provides the capability to set the appropriate values on the underlying aggregate

Using a Pcloud

Scheduling is controlled by the PcloudFilter, which must be configured as part of the filter scheduler.

A User requests an instance to be scheduler to a Pcloud by passing the scheduler hint "pcloud=<pcloud_uuid>".

The Pcloud filter implements the following rules for each host:

- If the user has not specified a Pcloud, a host only passes if it neither a Pcloud or Host Flavor aggregate

- If the user has specified a Pcloud, a host only passes if it is in the specified Pcloud, is Enabled, and the Tenant is authorized to use that Pcloud

- In all other cases the host does not pass

Examples of using Pclouds

See here for examples using nova client Pcloud Examples