Difference between revisions of "Obsolete:ArchitecturalOverview"

(import cleanup) |

|||

| Line 43: | Line 43: | ||

Currently, there are three strategies for networking, implemented by different managers: | Currently, there are three strategies for networking, implemented by different managers: | ||

| − | * | + | * FlatManager -- ip addresses are grabbed from a network and injected into the image on launch. All instances are attached to the same manually configured bridge. |

* FlatDHCPManager -- ip addresses are grabbed from a network, and a single bridge is created for all instances. A dhcp server is started to pass out addresses | * FlatDHCPManager -- ip addresses are grabbed from a network, and a single bridge is created for all instances. A dhcp server is started to pass out addresses | ||

| − | * | + | * VlanManager -- each project gets its own vlan, bridge and network. A dhcpserver is started for each vlan, and all instances are bridged into that vlan. |

The implementation of creating bridges, vlans, dhcpservers, and firewall rules is done by the driver linux_net. This layer of abstraction is so that we can at some point support configuring hardware switches etc. using the same managers. | The implementation of creating bridges, vlans, dhcpservers, and firewall rules is done by the driver linux_net. This layer of abstraction is so that we can at some point support configuring hardware switches etc. using the same managers. | ||

For more discussion of network architecture, see [[Networking]]. | For more discussion of network architecture, see [[Networking]]. | ||

Revision as of 23:46, 16 February 2013

Contents

caution

This page is outdated

The content of this page has not been updated for a very long time. Sections of this page are incorrect when referring to the current release.

Architectural Overview for OpenStack Compute

Live Notes may be taken for this topic at: http://etherpad.openstack.org/Architecture and http://etherpad.openstack.org/nova-archdoc

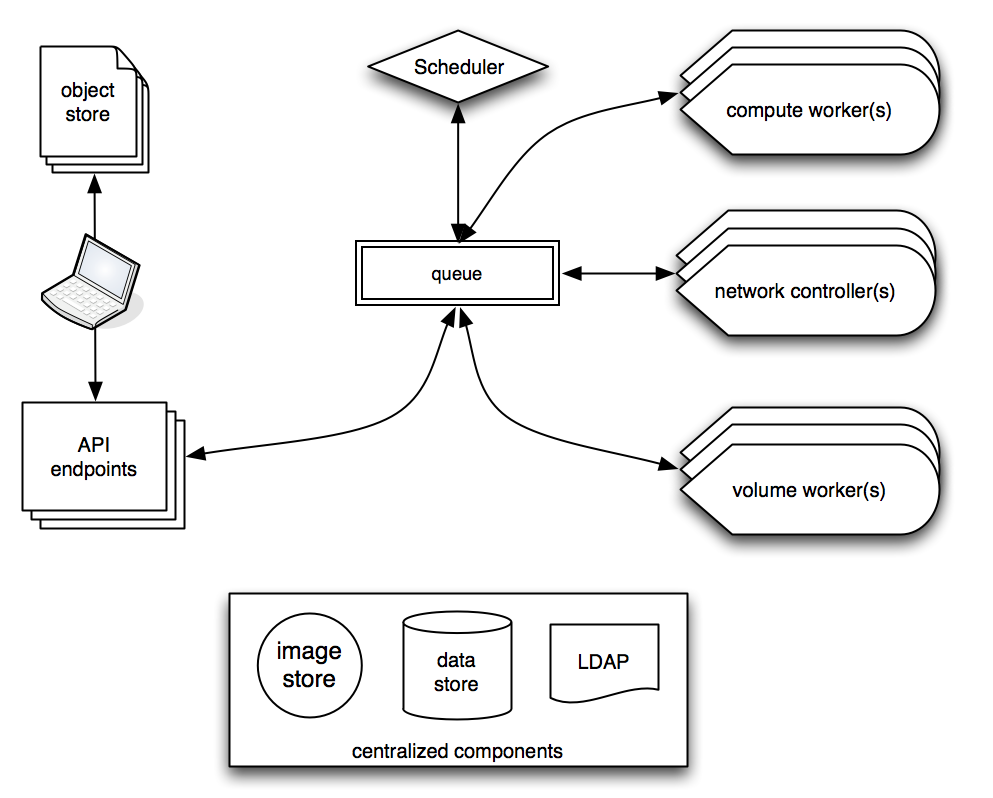

“Small” components, loosely coupled

- Queue based (currently AMQP/RabbitMQ)

- Flexible schema for datastore (currently Redis)

- LDAP (allows for integration with MS Active Directory via translucent proxy)

- Workers & Web hooks (be of the web)

- Asynchronous everything (don't block)

- Components (queue, datastore, http endpoints, ...) should scale independently and allow visibility into internal state (for the pretty charts/operations)

Development goals

- Testing & Continuous Integration

- Fakes (allows development on a laptop)

- Adaptable (goal is to make integration with existing resources at organization easier)

Queue

- Each worker/agent listens on a general topic, and a subtopic for that node. Example would be "compute" & "compute:hostname"

- Messages in the queue are currently Topic, Method, Arguments - which maps to a method in the python class for the worker

- exposed via method calls

- rpc.cast to broadcast the message and not wait for a response

- rpc.call to send a message and wait for the response

Datastore

- Pre-Austin, data is stored in Redis 2.0 (RC)

- Do the work on write - make reads FAST

- maintain indexes / lists of common subsets

- use pools (SETs in redis) that are drained for IPs instead of tracking what is allocated

Delta

- Scheduler does not exist (instances are distributed via the queue to the first worker that consumes the message)

- Object store in Nova is a naive stub which would be replaced with Cloud Files in Production (a simple object store that mimics Cloud Files might be good for development)

- Tornado should be phased out for WSGI-based web framework

Networking

Currently, there are three strategies for networking, implemented by different managers:

- FlatManager -- ip addresses are grabbed from a network and injected into the image on launch. All instances are attached to the same manually configured bridge.

- FlatDHCPManager -- ip addresses are grabbed from a network, and a single bridge is created for all instances. A dhcp server is started to pass out addresses

- VlanManager -- each project gets its own vlan, bridge and network. A dhcpserver is started for each vlan, and all instances are bridged into that vlan.

The implementation of creating bridges, vlans, dhcpservers, and firewall rules is done by the driver linux_net. This layer of abstraction is so that we can at some point support configuring hardware switches etc. using the same managers.

For more discussion of network architecture, see Networking.