Neutron/Networking-vSphere

Contents

Overview

OVSvApp Solution for ESX based deployments

When a cloud operator wants to use OpenStack with vSphere using open source elements, he/she can only do so by relying on nova-network. Currently, there is no viable open source reference implementation for supporting ESX deployments that would help the cloud operator to leverage some of the advanced networking capabilities that Neutron provides. Here, we talk about providing cloud operators with a Neutron supported solution for vSphere deployments in the form of a service VM called OVSvApp VM which steers the ESX tenant VMs' traffic through it. The value-add with this solution is a faster deployment of solutions on ESX environments together with minimum effort required for adding new OpenStack features like DVR, LBaaS, VPNaaS etc. To address the above challenge, the OVSvApp solution allows the customers to host VMs on ESX/ESXi hypervisors together with the flexibility of creating port groups dynamically on Distributed Virtual Switch/Virtual Standard Switch, and then steer its traffic through the OVSvApp VM which provides VLAN & VXLAN underlying infrastructure for tenant VMs communication and Security Group features based on OpenStack.

OVSvApp Benefits:

- Allows vendors to migrate their invested ESX workloads to a cloud.

- Allows vendors to deploy ESX-based Clouds with native OpenStack, with less (or) no learning curve.

- Allows vendors to leverage some of the advanced networking capabilities that Neutron provides.

- Not required to rely on nova-network (which is deprecated).

- Does not require special licenses from any vendors to deploy, run and manage.

- Aligned to OpenStack Kilo release.

- Available upstream in OpenStack Neutron under project “stackforge/networking-vsphere” https://github.com/stackforge/networking-vsphere.

Architecture of OVSvApp Solution

OVSvApp VM:

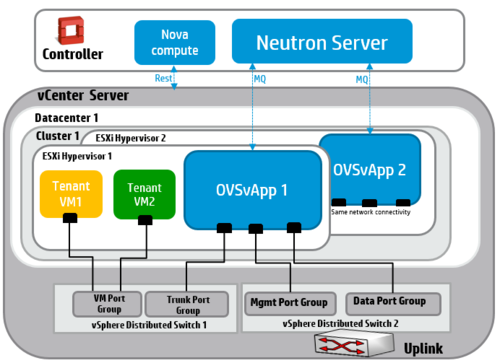

OVSvApp solution comprises of a service VM called OVSvApp VM (in Blue colored) hosted on each ESXi hypervisor within a cluster and two vSphere Distributed Switches (VDS). OVSvApp VM runs Ubuntu 12.04 LTS or above as a guest Operating System and has Open vSwitch 2.1.0 or above installed. It also runs an agent called OVSvApp agent.

For VLAN provisioning, two VDS per datacenter is required and for VXLAN, two VDS per cluster is required. The 1st VDS (named vSphere Distributed Switch 1) do not need any uplinks implying no external network connectivity, but will provide connectivity to tenant VMs and OVSvApp VM. Each tenant VM is associated with a port group (VLAN). The tenant VMs’ data traffic reaches the OVSvApp VM via their respective port group and hence through another port group called Trunk Port group (Port group defined with “VLAN type” as “VLAN Trunking” and “VLAN trunk range” set with range of tenant VMs’ traffic - VLAN ranges, exclusive of management VLAN as explained below) with which OVSvApp VM is associated.

The 2nd VDS (named vSphere Distributed Switch 2) has one or two uplinks and provides management and data connectivity to OVSvApp VM. The OVSvApp VM is also associated with other two port groups namely Management Port group (Port group defined with “VLAN type” as “None” OR “VLAN” with specific VLAN Id in “VLAN ID” OR “VLAN Trunking” with “VLAN trunk range” set with range of management VLANs) and Data Port group (Port group defined with “VLAN type” as “VLAN Trunking” and “VLAN trunk range” set with range of tenant VMs’ traffic - VLAN ranges, exclusive of management VLAN). Management VLAN and Data VLANs can share the same uplink or can be on different uplinks and those uplink ports can be a part of the same VDS or can it can be on separate VDS.

Components of OVSvApp VM

Work in progress

Deployment

Work in progress

References

Work in progress