Difference between revisions of "Cinder/NewLVMbasedDriverForSharedStorageInCinder"

(→Overview) |

|||

| (28 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| − | = | + | = Enhancement of LVM driver to support a volume-group on shared storage volume = |

== Related blueprints == | == Related blueprints == | ||

* https://blueprints.launchpad.net/cinder/+spec/lvm-driver-for-shared-storage | * https://blueprints.launchpad.net/cinder/+spec/lvm-driver-for-shared-storage | ||

* https://blueprints.launchpad.net/nova/+spec/lvm-driver-for-shared-storage | * https://blueprints.launchpad.net/nova/+spec/lvm-driver-for-shared-storage | ||

| + | |||

| + | == Documents: == | ||

| + | * Cinder-Support_LVM_on_a_sharedLU: [[File:Cinder-Support_LVM_on_a_sharedLU.pdf]] | ||

== Goals == | == Goals == | ||

| − | * The goal of this blue print is | + | * The goal of this blue print is support a volume-group on shared storage volume which is visible from multiple nodes. |

| − | |||

| − | |||

== Overview == | == Overview == | ||

| − | + | Currently, many vendor storages have vendor specific cinder driver and these drivers supports rich features to create/delete/snapshot volumes. | |

| + | On the other hands, there are also many storages which do not have specific cinder driver. | ||

| + | LVM is one of an approach to provide light weight features such as volume creation/deletion/extend, snapshot, etc. | ||

| + | By using "cinder LVM driver" with "a volume-group on shared storage volume", cinder will be able to handle these storages. | ||

| − | + | The purpose of this blue print is support of storages which does not have specific cinder driver using LVM and VG on shared storage volume. | |

| − | + | == Approach == | |

| − | + | Current LVM driver uses software iSCSI target (tgtd or LIO) on Cinder node to make logical volumes(LV) accessible from multiple nodes. | |

| + | |||

| + | When a volume-group on a shared storage volume(over fibre, iscsi or whatever) is visible from all nodes such as a cinder node and compute nodes, these nodes can access the volume-group simultaneously(*1) without software iSCSI target. | ||

| + | |||

| + | If LVM driver support this type of volume-group, qemu-kvm on each compute node can attach/detach a created LV to an instance via device path of “/dev/VG Name/LV Name” on each node, without a need of software iSCSI operations.(*2) | ||

| + | |||

| + | So the enhancement will support “a volume-group visible from multiple nodes” instead of iSCSI target. | ||

| + | |||

| + | |||

| + | (*1) Some operations are required to exclusive access control. See the section “Exclusive access control for metadata” | ||

| + | (*2) After running “lvchange -ay /dev/VG Name/LV Name” command on a compute node, the device file to access the LV is created. | ||

== Benefits of this driver == | == Benefits of this driver == | ||

| − | # | + | *General benefits |

| − | # | + | # In regard to one storage, reduce hardware based storage workload by offloading the workload to software based volume operation. |

| − | # | + | # Provide quicker volume creation and snapshot creation without storage workloads. |

| + | # Enable cinder to any kinds of shared storage volumes without specific cinder storage driver. | ||

| + | # Better I/O performance using direct volume access via fibre channel. | ||

| + | |||

| + | === Support target environment === | ||

| + | A volume-group visible from multiple nodes | ||

| + | |||

| + | [[File:SharedLVMsupport.png|border|600px|A volume-group on shared storage volume]] | ||

| + | |||

| + | == Detail of Design == | ||

| + | * The enhancement introduce a feature to supports a volume- group(*3) visible from multiple nodes into the LVM driver. | ||

| + | * Most parts of volume handling features can be inherited from current LVM driver. | ||

| + | * Software iSCSI operations are bypassed. | ||

| + | * Additional works are, | ||

| + | ** [Cinder] | ||

| + | **# Add a new driver class as a subclass of LVMVolumeDriver and driver.VolumeDriver to store a path of the device file “/dev/VG Name/LV Name”. | ||

| + | **# Add a new connector as a subclass of InitiatorConnector for volume migrate. | ||

| + | ** [Nova] | ||

| + | **# Add a new connector as a subclass of LibvirtBaseVolumeDriver to run “lvs” and “lvchange” in order to create/delete a device file of LV. | ||

| + | |||

| + | |||

| + | (*3) The volume_group is necessary to be prepared by storage administrators. | ||

| + | Ex. In case of FC storage. | ||

| + | (a) Create the LU1 using storage management tool. | ||

| + | (b) Register WWNs of control node and compute nodes into Host group to permit an access to the LU1. The LU1 is recognized each node as a SCSI disk(sdx) after SCSI scan or reboot. | ||

| + | (c) Create VG(1) on the LU1. The VG(1) is also recognized on each node after executing “vgscan”command. | ||

| + | (d) Configure the VG1 to the cinder "volume_group“ parameter. | ||

| + | |||

| + | == Prerequisites == | ||

| + | * Use QEMU/KVM as a hypervisor (via libvirt compute driver) | ||

| + | * A volume-group on a volume attached to multiple nodes | ||

| + | * Exclude a volume group from target of lvmetad on compute nodes | ||

| + | ** When compute node attaches created volume to a virtual machine, latest LVM metadata is necessary. However the lvmetad caches LVM metadata and this prevent to obtain latest LVM metadata. | ||

| + | |||

| + | == Exclusive access control for metadata == | ||

| + | * LVM holds management region including metadata of volume-group configuration information. If multiple nodes update the metadata simultaneously, the metadata will be broken. Therefore, exclusive access control is necessary. | ||

| + | * Specifically, operation of updating metadata is permited only cinder node. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

* The operations of updating metadata are followings. | * The operations of updating metadata are followings. | ||

| − | + | ** Volume creation | |

| − | + | *** When Cinder node create a new LV on a volume-group, metadata of LVM is renewed but the update does not notify other compute nodes. Only cinder node knows the update this point. | |

| − | + | ** Volume deletion | |

| − | + | *** Delete a LV on a volume-group from Cinder node. | |

| − | + | ** Volume extend | |

| + | *** Extend a LV on a volume-group from Cinder node. | ||

| + | ** Snapshot creation | ||

| + | *** Create a snapthot of a LV on a volume-group from Cinder node. | ||

| + | ** Snapshot deletion | ||

| + | *** Delete a snapthot of a LV on a volume-group from Cinder node. | ||

| + | |||

| + | * The operations without updating metadata are followings. These operations are permitted every compute node. | ||

| + | ** Volume attachment | ||

| + | *** When attaching a LV to guest instance on a compute nodes, compute node have to reload LVM metadata using "lvscan" or "lvs" because compute node does not know latest LVM metadata. | ||

| + | ***After reloading metadata, compute node recognise latest status of volume-group and LVs. | ||

| + | *** And then, in order to attach new LV, compute nodes need to create a device file such as /dev/"volume-group name"/"LV name" using "lvchane -ay" command. | ||

| + | *** After activation of LV, nova compute can attach the LV into guest VM. | ||

| − | * | + | * Volume detachment |

| − | + | ** After detaching a volume from guest VM, compute node deactivate the LV using "lvchange -an". As a result, unnecessary device file is removed from the compute node. | |

| − | |||

{| class="wikitable" | {| class="wikitable" | ||

| Line 53: | Line 109: | ||

|} | |} | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Configuration == | == Configuration == | ||

| − | In order to enable | + | In order to enable LVM multiple attached driver, need to define theses values at /etc/cinder/cinder.conf |

Example | Example | ||

Latest revision as of 22:20, 13 June 2014

Related blueprints

- https://blueprints.launchpad.net/cinder/+spec/lvm-driver-for-shared-storage

- https://blueprints.launchpad.net/nova/+spec/lvm-driver-for-shared-storage

Documents:

- Cinder-Support_LVM_on_a_sharedLU: File:Cinder-Support LVM on a sharedLU.pdf

Goals

- The goal of this blue print is support a volume-group on shared storage volume which is visible from multiple nodes.

Overview

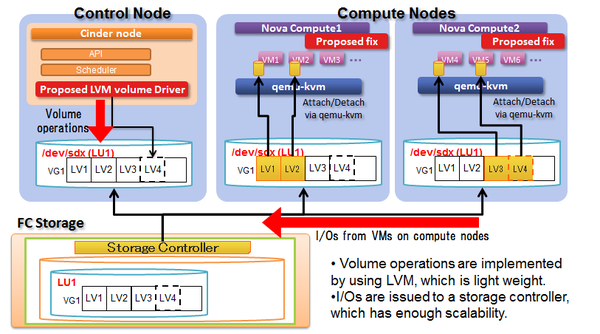

Currently, many vendor storages have vendor specific cinder driver and these drivers supports rich features to create/delete/snapshot volumes. On the other hands, there are also many storages which do not have specific cinder driver. LVM is one of an approach to provide light weight features such as volume creation/deletion/extend, snapshot, etc. By using "cinder LVM driver" with "a volume-group on shared storage volume", cinder will be able to handle these storages.

The purpose of this blue print is support of storages which does not have specific cinder driver using LVM and VG on shared storage volume.

Approach

Current LVM driver uses software iSCSI target (tgtd or LIO) on Cinder node to make logical volumes(LV) accessible from multiple nodes.

When a volume-group on a shared storage volume(over fibre, iscsi or whatever) is visible from all nodes such as a cinder node and compute nodes, these nodes can access the volume-group simultaneously(*1) without software iSCSI target.

If LVM driver support this type of volume-group, qemu-kvm on each compute node can attach/detach a created LV to an instance via device path of “/dev/VG Name/LV Name” on each node, without a need of software iSCSI operations.(*2)

So the enhancement will support “a volume-group visible from multiple nodes” instead of iSCSI target.

(*1) Some operations are required to exclusive access control. See the section “Exclusive access control for metadata” (*2) After running “lvchange -ay /dev/VG Name/LV Name” command on a compute node, the device file to access the LV is created.

Benefits of this driver

- General benefits

- In regard to one storage, reduce hardware based storage workload by offloading the workload to software based volume operation.

- Provide quicker volume creation and snapshot creation without storage workloads.

- Enable cinder to any kinds of shared storage volumes without specific cinder storage driver.

- Better I/O performance using direct volume access via fibre channel.

Support target environment

A volume-group visible from multiple nodes

Detail of Design

- The enhancement introduce a feature to supports a volume- group(*3) visible from multiple nodes into the LVM driver.

- Most parts of volume handling features can be inherited from current LVM driver.

- Software iSCSI operations are bypassed.

- Additional works are,

- [Cinder]

- Add a new driver class as a subclass of LVMVolumeDriver and driver.VolumeDriver to store a path of the device file “/dev/VG Name/LV Name”.

- Add a new connector as a subclass of InitiatorConnector for volume migrate.

- [Nova]

- Add a new connector as a subclass of LibvirtBaseVolumeDriver to run “lvs” and “lvchange” in order to create/delete a device file of LV.

- [Cinder]

(*3) The volume_group is necessary to be prepared by storage administrators. Ex. In case of FC storage. (a) Create the LU1 using storage management tool. (b) Register WWNs of control node and compute nodes into Host group to permit an access to the LU1. The LU1 is recognized each node as a SCSI disk(sdx) after SCSI scan or reboot. (c) Create VG(1) on the LU1. The VG(1) is also recognized on each node after executing “vgscan”command. (d) Configure the VG1 to the cinder "volume_group“ parameter.

Prerequisites

- Use QEMU/KVM as a hypervisor (via libvirt compute driver)

- A volume-group on a volume attached to multiple nodes

- Exclude a volume group from target of lvmetad on compute nodes

- When compute node attaches created volume to a virtual machine, latest LVM metadata is necessary. However the lvmetad caches LVM metadata and this prevent to obtain latest LVM metadata.

Exclusive access control for metadata

- LVM holds management region including metadata of volume-group configuration information. If multiple nodes update the metadata simultaneously, the metadata will be broken. Therefore, exclusive access control is necessary.

- Specifically, operation of updating metadata is permited only cinder node.

- The operations of updating metadata are followings.

- Volume creation

- When Cinder node create a new LV on a volume-group, metadata of LVM is renewed but the update does not notify other compute nodes. Only cinder node knows the update this point.

- Volume deletion

- Delete a LV on a volume-group from Cinder node.

- Volume extend

- Extend a LV on a volume-group from Cinder node.

- Snapshot creation

- Create a snapthot of a LV on a volume-group from Cinder node.

- Snapshot deletion

- Delete a snapthot of a LV on a volume-group from Cinder node.

- Volume creation

- The operations without updating metadata are followings. These operations are permitted every compute node.

- Volume attachment

- When attaching a LV to guest instance on a compute nodes, compute node have to reload LVM metadata using "lvscan" or "lvs" because compute node does not know latest LVM metadata.

- After reloading metadata, compute node recognise latest status of volume-group and LVs.

- And then, in order to attach new LV, compute nodes need to create a device file such as /dev/"volume-group name"/"LV name" using "lvchane -ay" command.

- After activation of LV, nova compute can attach the LV into guest VM.

- Volume attachment

- Volume detachment

- After detaching a volume from guest VM, compute node deactivate the LV using "lvchange -an". As a result, unnecessary device file is removed from the compute node.

| Operations | Volume create | Volume delete | Volume extend | Snapshot create | Snapshot delete | Volume attach | Volume detach |

|---|---|---|---|---|---|---|---|

| Cinder node | x | x | x | x | x | - | - |

| Compute node | - | - | - | - | - | x | x |

| Cinder node with compute | x | x | x | x | x | x | x |

Configuration

In order to enable LVM multiple attached driver, need to define theses values at /etc/cinder/cinder.conf

Example

[LVM_shared] volume_group=cinder-volumes-shared volume_driver=cinder.volume.drivers.lvm.LVMSharedDriver volume_backend_name=LVM_shared