Tricircle

The Tricircle is to provide networking automation across Neutron in multi-region OpenStack deployments.

Contents

Use Cases

To understand the motivation of the Tricircle project, we should understand the use cases with end user, and it would help you understand this project, please refer to presentation material: https://docs.google.com/presentation/d/1Zkoi4vMOGN713Vv_YO0GP6YLyjLpQ7fRbHlirpq6ZK4/

0. Telecom application cloud level redundancy in OPNFV Beijing Summit

Shared Networks to Support VNF High Availability Across OpenStack Multi Region Deployment This slides was presented in OPNFV Beijing Summit, 2017. slides: https://docs.google.com/presentation/d/1WBdra-ZaiB-K8_m3Pv76o_jhylEqJXTTxzEZ-cu8u2A/ Video: https://www.youtube.com/watch?v=tbcc7-eZnkY OPNFV Summit vIMS Multisite Demo(Youtube): https://www.youtube.com/watch?v=zS0wwPHmDWs Video Conference High Availability across multiple OpenStack clouds(Youtube): https://www.youtube.com/watch?v=nK1nWnH45gI

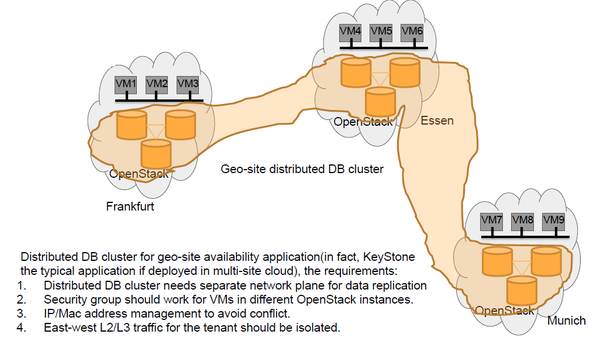

1. Application high availability running over cloud

In telecom and other industries(for example, MySQL Galera cluster), normally applications tend to be designed as Active-Active, Active-Passive or N-to-1 node configurations. Because those applications need to be available as much as possible. Once those applications are planned to be migrated to cloud infrastructure, active instance(s) need(s) to be deployed in one OpenStack instance first. After that, passive or active instances(s) will be deployed in another (other) OpenStack instance(s) in order for achieving 99.999% availability, if it’s required.

The reason why this deployment style is required is that in general cloud system only achieve 99.99% availability. [1] [2]

To achieve required high availability, design of network architecture (especially Layer 2 and Layer 3) needs across Neutron to be considered for application state replication or heartbeat.

The above picture shows an application with Galera DB cluster as backend which are geographically distributed into multiple OpenStack instances.

[1] https://aws.amazon.com/cn/ec2/sla/

[2] https://news.ycombinator.com/item?id=2470298

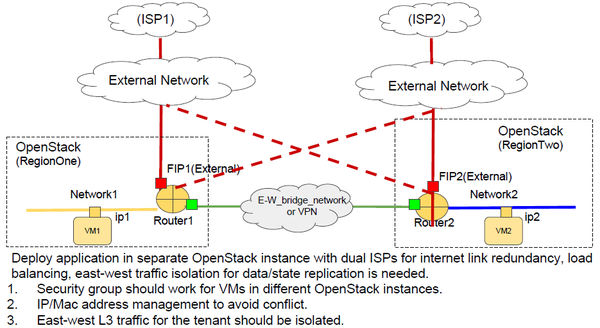

2. Dual ISPs Load Balancing for internet link

Deploy application in separate OpenStack instance with dual ISPs for internet link redundancy, load balancing, east-west traffic isolation for data/state replication is needed.

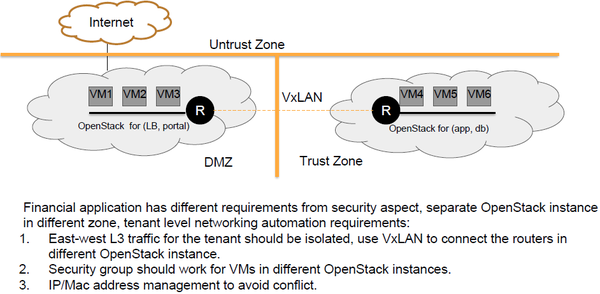

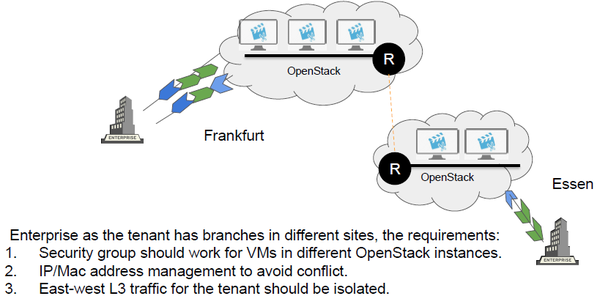

3. Isolation of East-West traffic

In financial industry, more than one OpenStack instances will be deployed, some OpenStack instance will be put in DMZ zone, and the other ones in trust zone, so that application or part of the application could be put in different level security zone. Although the tenant’s resources are provisioned in different OpenStack instances, east-west traffic among the tenant’s resources should be isolated.

Bandwidth sensitive, heavy load App like CAD modeling asked for the cloud close to the end user in distributed edge site for better user experience, multiple OpenStack instances will be deployed into edge sites, but the east-west communication with isolation between the tenant’s resources are also required.

Similar requirements could also be found in the ops session in OpenStack Barcelona summit "Control Plane Design (multi-region)" Line 25~26, 47~50: https://etherpad.openstack.org/p/BCN-ops-control-plane-design

4. Cross Neutron L2 network for NFV area

In NFV(Network Function Virtualization) area, network functions like router, NAT, LB etc will be virtualized, The cross Neutron L2 networking capability like IP address space management, IP allocation and L2 network segment global management provided by the Tricircle can help VNFs(virtualized network function) across site inter-connection. For example, vRouter1 in site1, but vRouter2 in site2, these two VNFs could be in one L2 network across site.

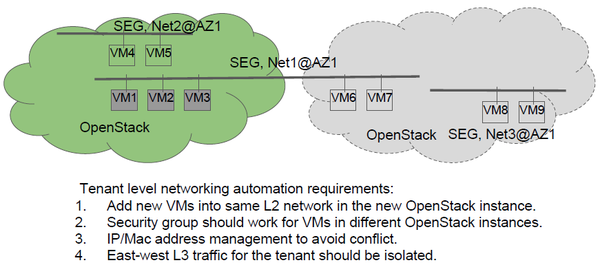

5. Cloud Capacity Expansion

When an OpenStack cloud is created and many resources has been provisioned on it, the capacity of one of the OpenStack deployments may not be enough. A new OpenStack deployment needs to be added to this cloud. But tenants still want to add VMs to existing network. And they don’t want to add a new network, router or whatever required resources.

Architecture

Now the Tricircle is dedicated for networking automation across Neutron in multi-region OpenStack deployments. The design blueprint has been developed with ongoing improvement in https://docs.google.com/document/d/1zcxwl8xMEpxVCqLTce2-dUOtB-ObmzJTbV1uSQ6qTsY/,

From the control plane view (cloud management view ), Tricircle is to make Neutron(s) in multi-region OpenStack clouds working as one cluster, and enable the creation of global network/router etc abstract networking resources across multiple OpenStack clouds. From the data plane view (end user resources view), all VMs(also could be bare metal servers or containers) are provisioned in different cloud but can be inter-connected via the global abstract networking resources, of course, with tenant level isolation.

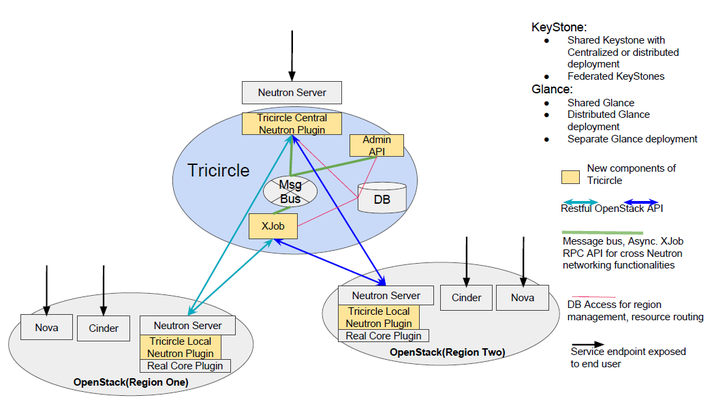

The software design is as follows:

Shared KeyStone (centralized or distributed deployment) or federated KeyStones could be used for identity management for the Tricrcle and multiple OpenStack instances.

- Tricircle Local Neutron Plugin

- It runs under Neutron server in the same process like OVN/Dragonflow Neutron plugin.

- The Tricircle Local Neutron Plugin serve for cross Neutron networking automation triggering. It is a shim layer between real core plugin and Neutron API server

- In the Tricircle context, The Tricircle Local Neutron Plugin could be called “local plugin” for short.

- The Neutron server with the Tricircle Local Neutron Plugin installed is also called “local Neutron” or “local Neutron server”. Nova/Cinder which work together with local Neutron also could be called local Nova/local Cinder, compared to the central Neutron.

- Tricircle Central Neutron Plugin

- It runs under Neutron server in the same process like OVN/Dragonflow Neutron plugin.

- The Tricircle Central Neutron Plugin serve for tenant level L2/L3 networking automation across multi-OpenStack instances.

- In the Tricircle context, The Tricircle Central Neutron Plugin could be called “central plugin” for short.

- The Neutron server with the Tricircle Central Neutron Plugin installed is also called “central Neutron” or “central Neutron server”.

- Admin API

- Manage the mappings between OpenStack instances and availability zone.

- Retrieve object uuid routing.

- Expose API for maintenance.

- XJob

- Receive and process cross OpenStack functionalities and other async jobs from Admin API or Tricircle Central Neutron Plugin.

- For example, when booting an instance for the first time for the project, router, security group rule, FIP and other resources may have not already been created in the bottom OpenStack instance, these resources could be created in async way to accelerate response for the first instance booting request. Not like network, subnet, security group resources they must be created before an instance booting.

- Admin API, Tricircle Central Neutron plugin could send an async job to XJob through message bus with RPC API provided by XJob.

- Database

- The Tricircle has its own database to store pods, jobs and resource routing tables for the Tricircle Central Neutron plugin, Admin API and XJob.

- The Tricircle Central Neutron Plugin will also reuse database of central Neutron server to do the global management of tenant's resources like IP/MAC/network/router.

- The Tricircle Local Neutron Plugin is a shim layer between real core plugin and Neutron API server, so Neutron DB will be still there for Neutron API server and real core plugin.

For Glance deployment, there are several choice:

- Shared Glance, if all OpenStack instances are located inside a high bandwidth, low latency site.

- Shared Glance with distributed back-end, if OpenStack instances are located in several sites.

- Distributed Glance deployment, Glance service is deployed distributed in multiple site with distributed back-end

- Separate Glance deployment, each site is installed with separate Glance instance and back-end, no cross site image sharing is needed.

Value

The motivation to develop the Tricircle open source project is to meet the demands which are required in the use cases mentioned above:

- Leverage Neutron API for cross Neutron networking automation, eco-system like CLI, SDK, Heat, Murano, Magum etc, all of these could be reserved seamlessly.

- support modularized capacity expansion in large scale cloud, just add more and more OpenStack instance, and these OpenStack instances are inter-connected in tenant level.

- L2/L3 networking automation across OpenStack instances.

- Tenant's VMs communicate with each other via L2 or L3 networking across OpenStack instances.

- Security group applied across OpenStack instances.

- Tenant level IP/mac addresses management to avoid conflict across OpenStack instances.

- Tenant level quota control across OpenStack instances.

Installation and Play

refer to the docuementation in https://docs.openstack.org/developer/tricircle/ for single node/multi-nodes setup and networking guide.

How to read the source code

To read the source code, there is one guide: https://wiki.openstack.org/wiki/TricircleHowToReadCode

Resources

- Design documentation: Tricircle Design Blueprint

- Wiki: https://wiki.openstack.org/wiki/tricircle

- Documentation(Installation, Configuration, Networking guide): https://docs.openstack.org/tricircle/latest/

- Source: https://github.com/openstack/tricircle

- Bugs: http://bugs.launchpad.net/tricircle

- Blueprints: https://launchpad.net/tricircle

- Review Board: https://review.openstack.org/#/q/project:openstack/tricircle

- Announcements: https://wiki.openstack.org/wiki/Meetings/Tricircle#Announcements

- Weekly meeting IRC channel: #openstack-meeting, irc.freenode.net on every Wednesday starting from UTC 1:00 to UTC 2:00

- Weekly meeting IRC log: https://wiki.openstack.org/wiki/Meetings/Tricircle#Meeting_minutes_and_logs

- Tricircle project IRC channel: #openstack-tricircle on irc.freenode.net

- Tricircle project IRC channel log: http://eavesdrop.openstack.org/irclogs/%23openstack-tricircle/

- Mail list: openstack-dev@lists.openstack.org, with [openstack-dev][tricircle] in the mail subject

- New contributor's guide: http://docs.openstack.org/infra/manual/developers.html

- Documentation: http://docs.openstack.org/developer/tricircle

- Tricircle discuss zone: https://wiki.openstack.org/wiki/TricircleDiscuzZone

- Tricircle big-tent application defense: https://review.openstack.org/#/c/338796 (A lots of comment and discussion to learn about Tricircle from many aspects)

Tricircle is designed to use the same tools for submission and review as other OpenStack projects. As such we follow the OpenStack development workflow. New contributors should follow the getting started steps before proceeding, as a Launchpad ID and signed contributor license are required to add new entries.

Video Resources

- Tricircle project update(Pike, OpenStack Sydney Summit), video: https://www.youtube.com/watch?v=baSu-eoUE1E

- Tricircle project update(Pike, OpenStack Sydney Summit), slides: https://docs.google.com/presentation/d/1JlGaMPDvnv42QV5isUl7Y1JHuTjBmAqR5fGzIcizlvg

- Move mission critical application to multi-site, what we learned: https://www.youtube.com/watch?v=l4Q2EoblDnY

- Shared Networks to Support VNF High Availability Across OpenStack Multi Region Deployment slides: https://docs.google.com/presentation/d/1WBdra-ZaiB-K8_m3Pv76o_jhylEqJXTTxzEZ-cu8u2A/ Video: https://www.youtube.com/watch?v=tbcc7-eZnkY

- OPNFV Summit vIMS Multisite Demo(Youtube): https://www.youtube.com/watch?v=zS0wwPHmDWs

- Video Conference High Availability across multiple OpenStack clouds(Youtube): https://www.youtube.com/watch?v=nK1nWnH45gI

History

During the big-tent application of Tricircle: https://review.openstack.org/#/c/338796/, the proposal is to move API-gateway part away from Tricircle, and form two independent and decoupled projects:

Tricircle: Dedicated for cross Neutron networking automation in multi-region OpenStack deployments, run without or with Trio2o.

Trio2o: Dedicated to provide API gateway for those who need single Nova/Cinder API endpoint in multi-region OpenStack deployment, run without or with Tricircle.

Splitting blueprint: https://blueprints.launchpad.net/tricircle/+spec/make-tricircle-dedicated-for-networking-automation-across-neutron

The wiki for Tricircle before splitting is linked here: https://wiki.openstack.org/wiki/tricircle_before_splitting

Meeting minutes and logs

all meeting logs and minutes could be found in

2017: http://eavesdrop.openstack.org/meetings/tricircle/2017/

2016: http://eavesdrop.openstack.org/meetings/tricircle/2016/

2015: http://eavesdrop.openstack.org/meetings/tricircle/2015/

To do list

Queens:

- Queens Etherpad: https://etherpad.openstack.org/p/tricircle-queens-ptg

Pike:

- Pike Etherpad: https://etherpad.openstack.org/p/tricircle-pike-design-topics

- Boston On-boarding Etherpad: https://etherpad.openstack.org/p/BOS-forum-tricircle-onboarding

Ocata:

- To do list in Ocata: https://etherpad.openstack.org/p/ocata-tricircle-work-session

Newton:

- To do list is in the etherpad: https://etherpad.openstack.org/p/TricircleToDo

- Splitting Tricircle into two projects: https://etherpad.openstack.org/p/TricircleSplitting

Team Member

Contact team members in IRC channel: #openstack-tricircle

Current active contributors

You can find active contributors from http://stackalytics.com:

Review: http://stackalytics.com/?release=all&module=tricircle-group&metric=marks

Code of lines: http://stackalytics.com/?release=all&module=tricircle-group&metric=loc

Email: http://stackalytics.com/?release=all&module=tricircle-group&metric=emails