Neutron/DVR

Contents

Executive summary

There are countless blogs and tutorials that explain what Neutron is and what it can do. This document does not intend to be a primer for OpenStack Networking. The sole objective of this document is to set the context behind why the Neutron community focused some of its efforts on improving certain routing capabilities offered by the open source framework, and what users can expect to see when they get their hands on its latest release, aka Juno.

Neutron Routing

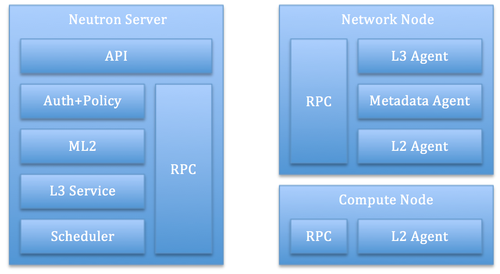

Neutron provides an API abstraction that allows tenants and cloud administrators to manage logical routers. These logical artifacts can be used to connect logical networks together and to the outside world (e.g. the Internet) providing both security and NAT capabilities. The reference implementation for this API uses the Linux IP stack and iptables to perform L3 forwarding and NAT. The diagram below shows the software architecture that underpins the Neutron reference platform for L3.

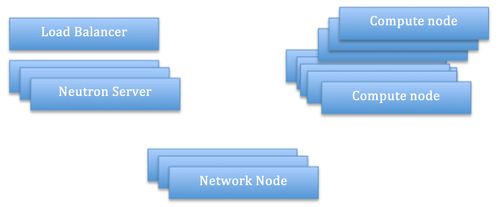

The architecture diagram above shows the internal components of a Neutron system that need to interact with each other in order to provide L3 services to compute instances connected via Neutron L2 networks. The DHCP component has been omitted on purpose. A typical deployment architecture is shown below. Multiple active instances of Neutron Servers can run behind a load balancer, whereas multiple network nodes can interact with the Server instances via the RPC bus and can be used to distribute the logical routers load across, based on configurable scheduling policies determined by the scheduling component running on the Neutron Server.

With this deployment model the Neutron Server cluster represents a unit that provides High Availability and Fault Tolerance (HA and FT hereinafter): in the face of failures of a single server, management requests will be served by other members of the group; furthermore, if the group is unable to cope with the load, a new member can be added to increase scalability. However, when looking at the Network node, FT and HA options are limited. In the face of failures of a single network node, all the routers allocated on that node are lost; furthermore, a single node represents a choke point for L3 forwarding and NAT. So what are the options for FT/HA with this type of architecture? Essentially the only option available is the introduction of an active/passive replica per node (more details can be found in [3]). The management of the replica group is done out of band via clustering mechanisms (e.g. Pacemaker). This option is far from ideal because of the increased management complexity. Alternatively, if some downtime can be tolerated, out of band fail-over strategies can be adopted to allow the re-provision of routers belonging to a failed node to an active node of the network node group. The drawback of this solution is that, depending on the load of the node at the time it failed, recovery may take quite some time.

The road to Juno

As discussed in the previous section, the routing solution provided by the Neutron reference implementation suffers from single points of failures and scalability problems. So what can be done to address these issues? As far as redundancy and high availability are concerned, built-in mechanisms for router provisioning, like VRRP ([1]), can be exploited. In this case, the deployment architecture is fundamentally the same as the one described above, however with VRRP each logical router will have to be translated into a VRRP group assigned to multiple Network nodes. They can achieve both fault-tolerance as well as high availability. However, this solution still faces a couple of problems: a) the replica factor (typically 3) will impose higher system requirements (e.g. more hardware will be needed); and b) DNAT-ted north/south traffic, and more importantly east/west traffic, will still need to flow through single choke points (as compute nodes is typically a higher degree of magnitude compared to that of network nodes). Alternatively, an architecture that can address the aforementioned problems is one where the L3 forwarding and NAT functions are distributed across the compute nodes themselves: this is what Distributed Virtual Routing ([2]) is about, and what it is discussed in more detail in the next section.

Juno and Distributed Routing

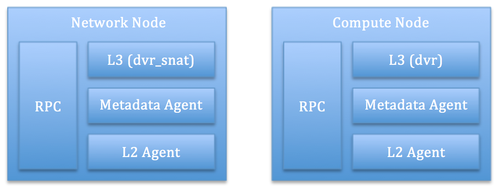

With DVR, L3 forwarding and NAT are distributed to the compute nodes, and this has quite an impact on the deployment architectures described so far; what does this means exactly? This means that with DVR every compute node needs to act as a network node, that provides both L3 and NAT. Does this mean that we can get rid of a pool of centralized network nodes? Not quite! Reason being there may be scenarios where certain network functions do require flowing through a network element. Also, distributing SNAT across the compute fabric would mean consumption of precious IPv4 ‘external’ address space. As far as network and compute nodes are concerned, the logical architecture with DVR therefore becomes like the one below:

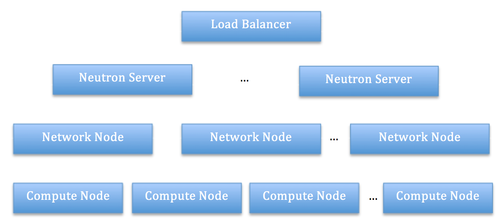

The two nodes look exactly alike, at least from a system requirements standpoint: they will need to run the same software and will need to have the same access to networking resources. However the Network Node will be in charge of North/South SNAT, whereas each Compute Node will provide North/South DNAT as well as East/West L3 forwarding. This is the reason why the two operating modes (i.e. dvr_snat vs dvr) are different in the diagram above. This logical architecture assumes that the DHCP function is taken care of by a network element that is separate from both the Network node and the Compute node (even though running the DHCP agent on the dvr_snat agent(s) will work just as well). Now, let us see what a deployment architecture looks like: this might not seem different from the one shown before, however now, this architecture offers some interesting FT and HA characteristics: East/West and North/South DNAT traffic no longer flows through a central node, thus providing better load distribution. Furthermore there is no longer a single point of failure as far as networking goes: a failure of the networking functions localized to the compute host will make the compute instances unreachable the same way as if the compute host were to go down bringing the compute instances down with it. This is still not ideal, however a compute host is still a unit of failure for OpenStack, so this architecture has not made it any worse. As far as North/South SNAT is concerned, this will still need to flow through a central Network Node; having said that, multiple network nodes can still be run, however a failure of a network node will bring down all the routers associated with it, causing instances running on multiple compute nodes to lose external connectivity at once. A more robust architecture would consist in one where each Network Node is replicated via VRRP, thus eradicating the last SPOF standing. Unfortunately as far as Juno goes, we are not quite there yet.

Beyond Juno

This document described the limitations of the Neutron architecture for routing functions until the Juno release. The architecture relied on a single network element that carried out both L3 forwarding and NAT functions thus representing a single point of failure for an entire cloud deployment. Even though the element could be scaled out, ad hoc strategies needed to be pursued to provide fault tolerance and high availability. A VRRP based implementation (not discussed in depth in this document) is available since the Juno release, and mitigates some of the limitations of the initial architecture. DVR is the strategy discussed in more detail, where the architecture addresses both scalability and high availability for some L3 functions. The architecture is not fully fault tolerant (for instance North/South SNAT traffic is still prone to failures of a single node), but it does offer interesting failure modes. The two approaches can be combined to represent the silver bullet in routing for OpenStack Networking, and this is going to be the focus of development for the forthcoming Neutron releases. Having said that, when looking at each single alternative more closely, there are still issues to be solved, and as far as DVR goes, some of them are outlined below:

- Router conversion: allowing existing routers to be converted into a distributed router;

- Router migration: allowing the ‘snat’ part of a distributed router to be migrated from one network node to another.

- High-level services support: allowing services like VPN, Firewall and Load Balancer to work smoothly with distributed routers;

- External networks: allowing the use of multiple external networks with distributed routers;

- IPv6: allowing IPv6 networks to work with distributed routers;

- VLAN support: allowing distributed routers to work with a VLAN underlay;

- Distributed routing in other vendor plugins;

Configuration variables

- Neutron Server:

- router_distributed = True ==> It controls the default router type: when set to True, routers will be created distributed by default.

- L3 Agent:

- agent_mode = [dvr_snat | dvr | legacy ] ==> To enable the L3 agent to operate respectively on the Network Node or Compute Node (legacy is the default for bw compatibility). A dvr_snat L3 agent can handle centralized routers as well as distributed routers.

- L2 Agent:

- enable_distributed_routing = True ==> To enable the L2 agent to operate in DVR mode. An L2 agent in dvr mode work seamlessly.

The API Extension

DVR routing is exposed through an API extension attribute on the router resource. This attributed is only visible to admin users. When creating a router (regardless of the user role), Neutron will rely on a system wide configuration (router_distributed as noted above), whose default currently allows to create 'centralized' routers, aka legacy routers. An administrator can override the default router type if he/she wishes to.