Manila/Networking/Gateway mediated

Contents

Architecture

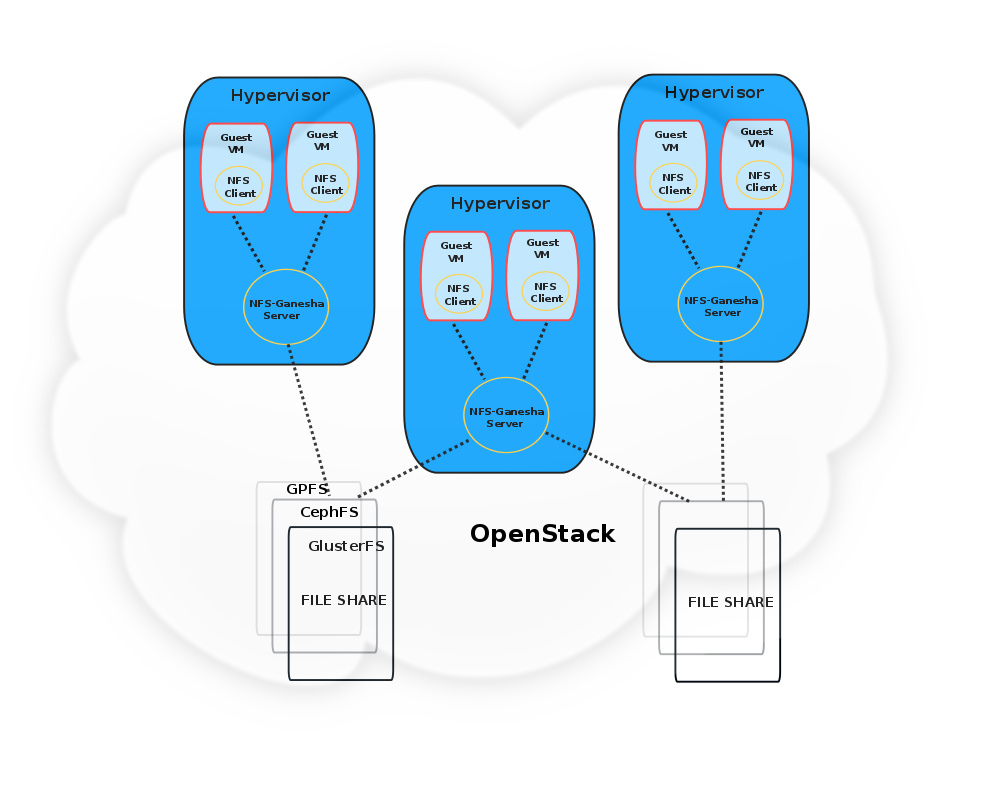

This document outlines an approach to achieving multi-tenancy using Manila OpenStack and the architecture/design considerations wrt enabling Multi-tenancy in the cloud using this approach. This model is based on the Gateway mediated approach to Manila Networking. Here "gateway" is to be understood as the storage gateway, ie. the machine which is member a permanent network which contains the storage backends, and manages/mediates access from tenant machines to the storage backends.

The approach taken is a gateway mediated one where the decision to allow tenant access to the intended Manila share is made in the gateway. Typically, each gateway can have multiple guest instances each of which is considered a tenant. The proposed design involves running a user-space NFS server on the gateway. Tenants rely on NFS clients to connect to this NFS server and consume any exported shares. The NFS server in turn is assumed to be capable enough, to talk to different storage backends and serve requests made by its clients.

We propose to use nfs-ganesha[1][2] as the user-space NFS daemon in this architecture. This is based on the following: nfs-ganesha is easily extensible to various filesystem backends and has a modular/plugin-based architecture. A plugin is commonly referred to as File System Abstraction Layer (FSAL) in NFS-Ganesha parlance. Currently, nfs-ganesha has support for a variety of FSALs including ones for CephFS, GlusterFS, Lustre, ZFS, VFS, GPFS etc. nfs-ganesha also supports various NFS protocol versions like NFSv2,v3,v4,v4.1,v4.2 and pNFS. This makes it ideal for future use-case extensibility and also from the point of view of accomodating the maximum possible filesystem backends, including any new vendor developed ones.

When a mount request is received by the nfs-ganesha server, it checks whether the client/tenant is allowed to access the related export or share. Based on the NFS share ACL configurations, the exported share operation will succeed or fail. If the mount succeeds, all further operations performed from the client will succeed and Ganesha would route such requests to the storage backend through the corresponding filesystem FSAL. So tenant share separation is made possible based on NFS ACLs and access permissions. Further, nfs-ganesha also supports Kerberos based authentication in case Manila shares need to be exported and consumed across nodes in an Openstack compute cluster when nfs-ganesha would be mounted on each gateway in the cluster in Multi-head configuration.

Advantages:

- Works with all guest operating systems that have a nfs client built in. Well standardized and widely available support.

- Does not require any VM/guest plugin installation or any Openstack components to be modified to work with this approach.

- Works across the majority of the prevalent filesystem backends and/or Storage Arrays without modifications.

- This approach most closely mirrors how guests interact with storage controllers in Cinder. The storage controller only needs to know about compute nodes and the gateway deals with the problem of presenting the storage to the guest.

- Security is enforced by the gateway. Backends need not support any security to protect tenant data from other tenants (or even enforce protection between instances of one tenant).

- Further tenant separation is based on share permissions based on NFS ACLs and authentication. This is easily enforced.

- No dependence or interaction with Neutron

- NFS server on the gateway performs caching. So not all requests from the instances need to be served by the storage layer. Thereby data access is sometimes faster, and the storage layer is less burdened.

- Being a user-space server, nfs-ganesha's cache-inode layer can grow arbitrarily large, limited mostly (only) by the underlying hardware. This supports greater scalability in terms of supporting larger number of compute instances and share operations/requests.

- Extensible to support other multi-tenant use-cases like share reservations, QoS requirements etc.

Disadvantages:

- Resources are consumed by nfs-ganesha as it runs on the gateway. Resource consumption by ganesha has been seen to be moderate across general workloads so we don't see this as a major impact to the overall architecture currently. Further tests need to be carried out to ascertain this impact if any.

- High Availability for tenant share access via nfs-ganesha could necessitate additional configuration and state maintenance. However, this availability support/infrastructure is mostly available as a separate Ganesha solution w.r.t. different filesystem backends.

References:

[1] https://github.com/nfs-ganesha/nfs-ganesha/wiki

[2] https://forge.gluster.org/nfs-ganesha-and-glusterfs-integration/pages/Home