WatcherArchitecture

This page is intended to describe Watcher components and how they integrate with one another. This is currently a work in progress.

Contents

Overview of the Watcher Architecture

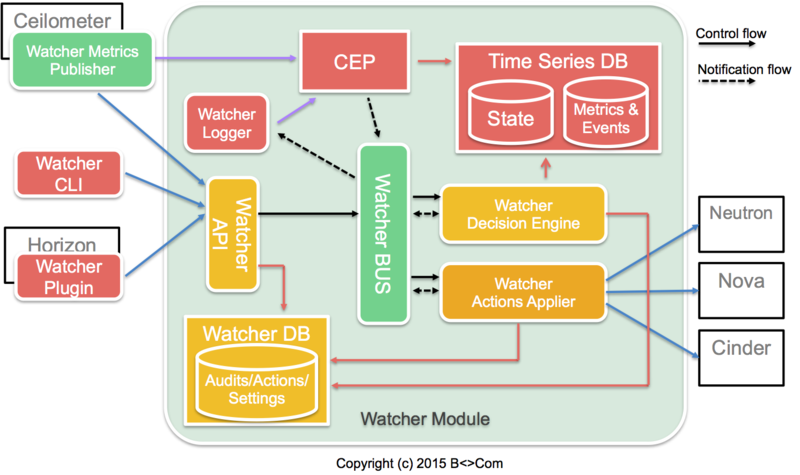

The following diagram shows the global architecture of the Watcher system:

Description of the Watcher technical components

This paragraph lists the different technical components of the Watcher system. The components are sorted in alphabetical order.

Complex Event Processing (CEP)

CEP solutions have been developed to address the requirements of applications that analyze and react to events in real time.

It is able to analyze, aggregate, correlate, filter events and detect "short-live" opportunities or optimizations and respond to them as quickly as possible by triggering actions.

For performance reasons, there may be several instances of CEP Engine running simultaneously, each instance processing a certain type of metric/events. It is not possible to rely on a classical stateless load-balancing mechanism (with every CEP instance hosting the same rules) because some metrics/events are linked in time and the CEP must be able to build coherent streams. Therefore, it is more necessary to always send a given type of events/metrics to the same CEP instance. This is the reason why several metrics collector endpoints will be provided in the Watcher module.

In the Watcher system, the CEP will trigger two types of actions:

- write relevant events/metrics into the Time Series database. Only the relevant metrics for Watcher must be stored in the time series database. The CEP can also consolidate/aggregate metrics/events and generate higher level metrics/events needed by the Watcher Decision Engine.

- send relevant events to the Watcher Decision Engine component whenever this event may influence the result of current optimization strategy because an Openstack cluster is not a static system. For instance, it may be important to notify the Decision Engine that a new compute node has been added to the cluster or that a new instance has been requested by a customer project.

The CEP is also in charge of verifying the quality of the data which will be used by the Decision Engine.

Time Series Databases

These databases stores all the time stamped information regarding cluster and resources history (state, metrics, events, ...), and regarding Watcher behavior itself (logs, state changes, events and metrics). Those information are used by:

- the decision engine to know at any time the current cluster state and its evolution in a given time-frame.

- by CEP to compute new events/metrics or handle actions

Watcher Actions Applier

This component is in charge of executing the plan of actions built by the Decision Engine.

If the actions plan is described as a BPMN 2.0 workflow, this component could be a BPMN workflow engine.

For each action of the workflow, this component may call directly the component responsible for this kind of action (e.g., Nova API for an instance migration) or indirectly via some publish/subscribe pattern on the message bus (asynchronous workers).

It notifies continuously of the current progress of each ongoing Action Plan (and atomic Actions), sending status messages on the bus. Those events may be used by the CEP to trigger new actions (or even a new audit in case the automatic mode is enabled).

This component is also connected to the Watcher Database in order to:

- get the description of the action plan to execute

- persist its current state so that if it is restarted, it can restore each Action plan context and restart from the last known safe point of each ongoing workflow

Watcher API

This component implements the REST API provided by the Watcher system to the external world. It enables a cluster administrator to control and monitor the Watcher system via any interaction mechanism connected to this API:

- CLI

- Horizon plugin

- Python SDK

Watcher Database

This database stores all the watcher business objects which can be requested by the Watcher API:

- Audit templates

- Audits

- Action plans

- Actions history

- Watcher settings:

- metrics/events collector endpoints for each type of metric

- manual/automatic mode

- Business Objects states

It may be any relational database or a key-value database.

Business objects are read/created/updated/deleted from/to the Watcher database using a common Python package which provides a high-level Service API.

The advantage of this approach is that it provides synchronous execution of such operations so that each Watcher component can make sure the data has been written in the Watcher database before executing other actions. The counterpart is that the Watcher database must always be up & running to make sure that the read/write operation can be executed as soon as a Watcher component needs it.

Watcher Decision Engine

This component is responsible for computing a list of potential optimization actions in order to fulfill the goals of an audit.

It uses the following input data:

- current, previous and predicted state of the cluster (hosts, instances, network, ...)

- evolution of metrics within a time frame

It first selects the most appropriate optimization strategy depending on several factors:

- the optimization goals that must be fulfilled (servers consolidation, energy consumption, license optimization, ...)

- the deadline that was provided by the OpenStack cluster admin for delivering an action plan

- the "aggressiveness" level regarding potential optimization actions:

- is it allowed to do a lot of instance migrations ?

- is it allowed to consume a lot of bandwidth on the admin network ?

- is it allowed to violate initial placement constraint such as affinity/anti-affinity, region, ... ?

The strategy is then executed and generates a list of Meta-Actions in order to fulfill the goals of the Audit.

A Meta-Action is a generic optimization task which is independent from the target cluster implementation (OpenStack, ...). For example, an instance migration is a Meta-Action which corresponds, in the OpenStack context, to a set of technical actions on the Nova, Cinder and Neutron components.

Using Meta-Actions instead of technical actions brings two advantages in Watcher:

- a loose coupling between the Watcher Decision Engine and the Watcher Applier

- a simplification of the optimization algorithms which don't need to know the underlying technical cluster implementation

Beyond that, the Meta-Actions which are computed by the optimization strategy are not necessarily ordered in time (it depends on the selected Strategy). Therefore, the Actions Planner module of Decision Engine reorganizes the list of Meta-Actions into an ordered sequence of technical actions (migrations, ...) such that all security, dependency, and performance requirements are met. An ordered sequence of technical actions is called an "Action Plan".

In other words, the Actions Planner module translates Meta-Actions into technical actions on the OpenStack modules (Nova, Cinder, ...) and builds an appropriate workflow (with any appropriate scheduling format such as BPMN 2.0) which defines how-to schedule in time those different technical actions and for each action what are the prerequisite conditions.

A very simple Action Plan for example would consist in allowing only one migration of instance at a time, in order to make sure it does not consume too much bandwidth of the admin network.

Another Action Plan could consist in migrating all instances from a compute node before changing its power supply ACPI configuration.

The Decision Engine saves the generated Action Plan(s) in the Watcher Database which is loaded later by the Watcher Actions Applier.

Like every Watcher component, the Decision Engine notifies its current status (learning phase, current status of each Audit, ...) on the message/notification bus.

Watcher Logger

This component is responsible of transmitting events coming from Watcher Message/Notification Bus to CEP.

Watcher Message/Notification Bus

The message/notification bus handles asynchronous communications between the different Watcher components.

Watcher is using a message bus which may be a dedicated internal bus (RabbitMQ, NanoMsg, ...) or the same bus as the one used by the OpenStack core modules (RabbitMQ). On this bus, for each Watcher component, two topics are used:

- one topic named "Control" for triggering tasks on the component

- one topic named "Notification" for notifying the current status/events of the component .

The CEP will be used for integration purpose between the different components.

It will trigger tasks on the "Control" topic when one or several notification(s) are received from the "Notification" topic.

It will also trigger new events on the "Notification" topic by computing several notifications.

Watcher Metrics Publisher

The metrics publisher is a component which collects and computes some metrics or events and publishes it to an endpoint of the CEP.

The metrics publisher can be a Ceilometer publisher, which already provides many metrics related to compute nodes, instances, storage and network as can be seen here: http://docs.openstack.org/admin-guide-cloud/content/section_telemetry-measurements.html

Technologies

Watcher uses a number of underlying technologies:

- Riemann (http://http://riemann.io/): Riemann filters, combines, and acts on flows of events to understand your systems.

- InfluxDB (http://influxdb.com/): An open-source distributed time series database with no external dependencies.

License

Copyright (c) 2015 B<>Com. Watcher is licensed under the Apache License v2.