Difference between revisions of "TripleO/TuskarJunoPlanning"

(→Requirements) |

|||

| Line 26: | Line 26: | ||

=== Requirements === | === Requirements === | ||

| + | |||

| + | ==== Heat ==== | ||

| + | |||

| + | * Diskimage builder resource (question raised wrt ability to spawn VM to host it in seed/undercloud), also OS::GlanceImageUploader resource (''may not be required for Juno'') | ||

==== Tuskar-UI ==== | ==== Tuskar-UI ==== | ||

| Line 31: | Line 35: | ||

* update deployment workflow to accomodate cloud services | * update deployment workflow to accomodate cloud services | ||

| − | == Overcloud Planning and | + | == Overcloud Planning and Deployment == |

| − | In Icehouse, the planning stage of overcloud deployment is represented by data stored in Tuskar database tables. For Juno, we would like to remove the database from Tuskar. Instead, the planning stage of a deployment will be represented by the full Heat template that would be used to deploy it. Since Heat does not intend to be a template store, this template will be stored in Swift instead. | + | In Icehouse, the planning stage of overcloud deployment is represented by data stored in Tuskar database tables. For Juno, we would like to remove the database from Tuskar. Instead, the planning stage of a deployment will be represented by the full Heat template that would be used to deploy it. Since Heat does not intend to be a template store, this template will be stored in Swift instead. When the overcloud is ready to be deployed, the Tuskar service will pull the template out of Swift. |

[[File:tuskar-arch-juno.png|Tuskar Architecture]] | [[File:tuskar-arch-juno.png|Tuskar Architecture]] | ||

| Line 44: | Line 48: | ||

* Environment file - a second file which specifies extra environment details that the stack should be launched with (https://blueprints.launchpad.net/horizon/+spec/heat-environment-file) | * Environment file - a second file which specifies extra environment details that the stack should be launched with (https://blueprints.launchpad.net/horizon/+spec/heat-environment-file) | ||

* Allow stacks to be updated without forcing the user to re-provide all parameters (https://bugs.launchpad.net/heat/+bug/1224828) | * Allow stacks to be updated without forcing the user to re-provide all parameters (https://bugs.launchpad.net/heat/+bug/1224828) | ||

| − | * nested resource templates ( | + | |

| + | * nested resource templates (''may not be required for Juno'') | ||

| + | * Stack preview (preview what would happen via stack-create) (https://blueprints.launchpad.net/heat/+spec/preview-stack) (''may not be required for Juno'') | ||

| + | * Stack check (sync state of stack with the real state of underlying resources, e.g persist out-of-band failures in stack resource states) (https://blueprints.launchpad.net/heat/+spec/stack-check) (''may not be required for Juno'') | ||

| + | * Stack converge - "fixes" stack and returns to known state (https://blueprints.launchpad.net/heat/+spec/stack-convergence) (''may not be required for Juno'') | ||

==== TripleO ==== | ==== TripleO ==== | ||

| Line 58: | Line 66: | ||

=== Requirements === | === Requirements === | ||

| + | |||

| + | ==== Heat ==== | ||

| + | |||

| + | * Ceilometer native auth for alarm notifications (and possibly metrics in future) (https://blueprints.launchpad.net/ceilometer/+spec/trust-alarm-notifier) | ||

| + | * hooks to do cleanup on scale-down (e.g host evacuation etc) (https://blueprints.launchpad.net/heat/+spec/update-hooks) | ||

| + | * choose victim on scale-down, or specify strategy for choosing (e.g oldest first or newest first) (https://blueprints.launchpad.net/heat/+spec/autoscaling-parameters) | ||

| + | * support complex conditionals when choosing victim on scale-down (e.g get notification or poll metric via ceilometer related to occupancy or other application metrics); possibly handled by above, if we can get ceilometer to pass us appropriate data when signalling the scaling policy, TBC (''may not be required for Juno'') | ||

| + | * Method to inhibit autoscaling/alarms during abandon/adopt (and suspend/resume?) | ||

== High Availability == | == High Availability == | ||

| Line 95: | Line 111: | ||

:* tag nodes for nova scheduler | :* tag nodes for nova scheduler | ||

* scalability | * scalability | ||

| + | |||

| + | ==== Heat ==== | ||

| + | |||

| + | * Ironic resource plugin (''may not be required by us'') | ||

== Metric Graphs == | == Metric Graphs == | ||

Revision as of 04:52, 25 March 2014

Contents

Overview

This planning document is born from discussions from the TripleO mid-cycle Icehouse meetup in Sunnyvale (TripleO/TuskarJunoInitialDiscussion). The ideas iterated there were then individually fleshed out and detailed, with much input coming from conversations with other OpenStack projects.

Our principal concerns duing the Juno cycle are:

- integrating further with other OpenStack services, using their capabilities to enhance our TripleO management experience

- ensuring that Tuskar does not try to implement functionality that is better located in other projects

This document details our high-level goals for Juno. It does so at multiple levels; for each we provide:

- a description of the goal

- an explanation of the various OpenStack interactions needed

- a list of project requirements and/or blueprints needed to achieve those interactions

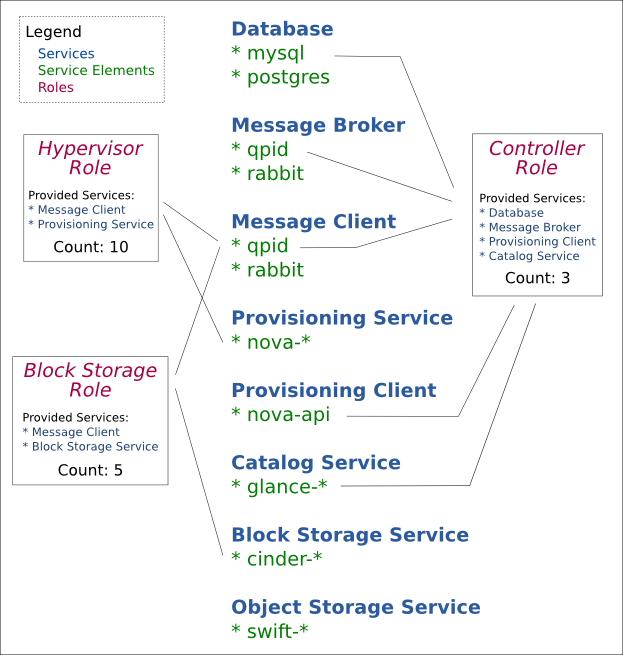

Cloud Service Representation

A cloud service represents a cloud function - provisioning service, storage service, message broker, database server, etc. A cloud service is fulfilled by a cloud service element; for example, a user can fulfill the message broker cloud service by either choosing the qpid-server element or the rabbitmq-server element.

With the cloud service concept in play, overcloud roles are updated as follows: instead of being associated with images, they are associated with a list of cloud service elements. This provides greater flexibility for the Tuskar user when designing the scalable components of a cloud. For example, instead of being limited to a Controller role, the user can separate out the network components of that role into a Network role and scale it individually.

For Juno, Tuskar will provide role defaults or suggestions corresponding to current default roles, as well as an "All-in-One" role.

Requirements

Heat

- Diskimage builder resource (question raised wrt ability to spawn VM to host it in seed/undercloud), also OS::GlanceImageUploader resource (may not be required for Juno)

Tuskar-UI

- update deployment workflow to accomodate cloud services

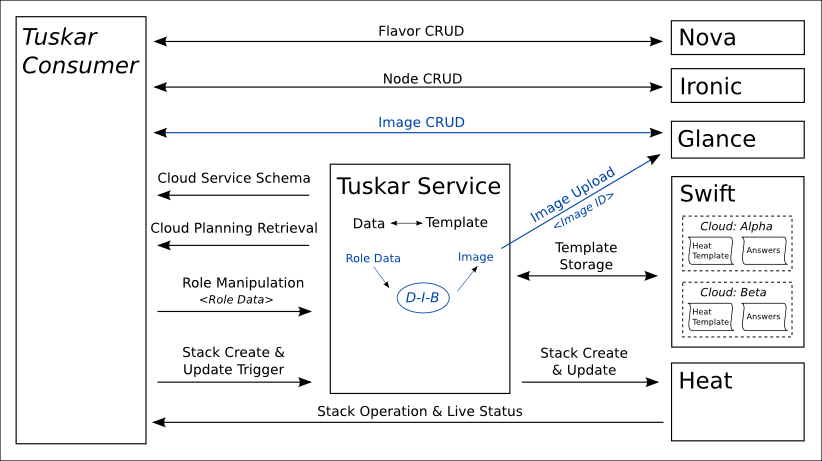

Overcloud Planning and Deployment

In Icehouse, the planning stage of overcloud deployment is represented by data stored in Tuskar database tables. For Juno, we would like to remove the database from Tuskar. Instead, the planning stage of a deployment will be represented by the full Heat template that would be used to deploy it. Since Heat does not intend to be a template store, this template will be stored in Swift instead. When the overcloud is ready to be deployed, the Tuskar service will pull the template out of Swift.

Requirements

Heat

- Stack update action (https://blueprints.launchpad.net/horizon/+spec/heat-stack-update)

- Environment file - a second file which specifies extra environment details that the stack should be launched with (https://blueprints.launchpad.net/horizon/+spec/heat-environment-file)

- Allow stacks to be updated without forcing the user to re-provide all parameters (https://bugs.launchpad.net/heat/+bug/1224828)

- nested resource templates (may not be required for Juno)

- Stack preview (preview what would happen via stack-create) (https://blueprints.launchpad.net/heat/+spec/preview-stack) (may not be required for Juno)

- Stack check (sync state of stack with the real state of underlying resources, e.g persist out-of-band failures in stack resource states) (https://blueprints.launchpad.net/heat/+spec/stack-check) (may not be required for Juno)

- Stack converge - "fixes" stack and returns to known state (https://blueprints.launchpad.net/heat/+spec/stack-convergence) (may not be required for Juno)

TripleO

- update Heat templates in TripleO for: HOT, provider resources, software config

Tuskar

- rebuild Tuskar to save and retrieve Heat templates from Swift

- update CLI as necessary

Auto-Scaling

Requirements

Heat

- Ceilometer native auth for alarm notifications (and possibly metrics in future) (https://blueprints.launchpad.net/ceilometer/+spec/trust-alarm-notifier)

- hooks to do cleanup on scale-down (e.g host evacuation etc) (https://blueprints.launchpad.net/heat/+spec/update-hooks)

- choose victim on scale-down, or specify strategy for choosing (e.g oldest first or newest first) (https://blueprints.launchpad.net/heat/+spec/autoscaling-parameters)

- support complex conditionals when choosing victim on scale-down (e.g get notification or poll metric via ceilometer related to occupancy or other application metrics); possibly handled by above, if we can get ceilometer to pass us appropriate data when signalling the scaling policy, TBC (may not be required for Juno)

- Method to inhibit autoscaling/alarms during abandon/adopt (and suspend/resume?)

High Availability

Requirements

TripleO

- Deploy HA Overcloud

- glusterfs

- pacemaker, corosync

- neutron (?)

- heat-engine A/A

- qpid proton (assuming amqp 1.0 have merged into oslo.messaging and oslo.messaging have merged in each core project. If not, will use rabbitmq)

- etc etc

Tuskar-UI

- deployment workflow support for HA architecture

Node Management (Ironic)

Requirements

Ironic

- Ironic graduation

- CI jobs

- Nova driver

- Serial console

- Migration path

- User documentation

- Autodiscovery of nodes

- Ceilometer

- Tagging

- tag nodes for nova scheduler

- scalability

Heat

- Ironic resource plugin (may not be required by us)

Metric Graphs

Requirements

Ceilometer

- Combine samples for different meters in a transformer to produce a single derived meter

- Rollup of course-grained statistics for UI queries

- Configurable data retention based on rollups

- Overarching "health" metric for nodes

To ensure scalability:

- Eliminate central agent SPoF

- SNMP batch mode, one bulk command per node per polling cycle

For additional data:

- Acquire hardware-oriented metrics via IPMI (e.g., voltage, fan speeds, etc.)

- Keystone v3 usage would avoid IPMI credentials; allowing pollster-style interaction

- Look into consistent hashing; see if it can be reused in ceilometer -- though it requires stateful DB

Tuskar-UI

- Add Ceilometer-based graphs

User Interfaces

Requirements

Tuskar-CLI

- create a tuskar-cli plugin for OpenStackClient

Tuskar-UI

- increase the modularity of views

- create a mechanism for asynchronous communication