Difference between revisions of "Spec-QuantumMidoNetPlugin"

m (Text replace - "__NOTOC__" to "") |

|||

| (35 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | ||

= Quantum [[MidoNet]] Plugin = | = Quantum [[MidoNet]] Plugin = | ||

| Line 15: | Line 15: | ||

* making your entire IaaS networking layer completely distributed and fault-tolerant | * making your entire IaaS networking layer completely distributed and fault-tolerant | ||

| − | Please note that, at the time of this writing, MidoNet only works with | + | Please note that, at the time of this writing, MidoNet only works with libvirt/KVM. |

'''Implementation Overview: ''' | '''Implementation Overview: ''' | ||

| − | In the MidoNet virtual topology that gets constructed in the integration with Quantum, there is one virtual router that must always exist, called provider virtual router. It is a virtual router belonging to the service provider, and it is capable of routing traffic among the tenant routers as well as routing traffic into and out of the provider network. The virtual ports on this router are mapped to the interfaces on the 'edge' servers that are connected to the service provider's uplink routers. Additionally, to enable metadata service, another virtual router called metadata virtual router and a virtual bridge called metadata bridge must exist. The metadata bridge is connected to the metadata router, and there is a virtual port on this bridge that is mapped to an interface on the host that the nova-api (metadata server) is running on, effectively allowing the metadata traffic from the VMs to traverse through the virtual network to reach the metadata server. Note that there are additional steps needed to configure the metadata host so that it accepts both the VM traffic and the management traffic properly, but that is out of the scope of | + | In the MidoNet virtual topology that gets constructed in the integration with Quantum, there is one virtual router that must always exist, called provider virtual router. It is a virtual router belonging to the service provider, and it is capable of routing traffic among the tenant routers as well as routing traffic into and out of the provider network. The virtual ports on this router are mapped to the interfaces on the 'edge' servers that are connected to the service provider's uplink routers. Additionally, to enable metadata service, another virtual router called metadata virtual router and a virtual bridge called metadata bridge must exist. The metadata bridge is connected to the metadata router, and there is a virtual port on this bridge that is mapped to an interface on the host that the nova-api (metadata server) is running on, effectively allowing the metadata traffic from the VMs to traverse through the virtual network to reach the metadata server. Note that there are additional steps needed to configure the metadata host so that it accepts both the VM traffic and the management traffic properly, but that is out of the scope of this document. It is expected that these virtual routers has already been set up and configured for the MidoNet plugin to function properly. The initial setup of the virtual topology looks as follows: |

| − | [[Image: | + | [[Image:image1.png]] |

| − | |||

| − | |||

| + | The files of the plugin are shown below: | ||

<pre><nowiki> | <pre><nowiki> | ||

| − | midonet/__init__.py | + | quantum/quantum/plugins/midonet/__init__.py |

| − | midonet/ | + | quantum/quantum/plugins/midonet/midonet_lib.py |

| − | midonet/plugin.py | + | quantum/quantum/plugins/midonet/plugin.py |

| − | midonet/ | + | quantum/etc/plugins/midonet/midonet.ini |

| − | midonet/ | + | quantum/tests/unit/midonet/__init__.py |

| + | quantum/tests/unit/midonet/test_midonet_plugin.py | ||

</nowiki></pre> | </nowiki></pre> | ||

| + | 'quantum/quantum/plugins/midonet/plugin.py' is the MidoNet Quantum plugin code, and 'quantum/quantum/plugins/midonet/midonet_lib.py' is a helper library to make calls to MidoNet API. | ||

| + | The plugin depends on the MidoNet Python client library, which is in GitHub: https://github.com/midokura/python-midonetclient. | ||

MidoNetPluginV2 class in 'midonet/plugin.py' extends db_base_plugin_v2.QuantumDbPluginV2, l3_db.L3_NAT_db_mixin, and portsecurity_db.PortSecurityDbMixin, and implements all the necessary methods to provide the Quantum L2, L3 and security groups features: | MidoNetPluginV2 class in 'midonet/plugin.py' extends db_base_plugin_v2.QuantumDbPluginV2, l3_db.L3_NAT_db_mixin, and portsecurity_db.PortSecurityDbMixin, and implements all the necessary methods to provide the Quantum L2, L3 and security groups features: | ||

| − | |||

<pre><nowiki> | <pre><nowiki> | ||

| − | |||

class MidonetPluginV2(db_base_plugin_v2.QuantumDbPluginV2, l3_db.L3_NAT_db_mixin, portsecurity_db.PortSecurityDbMixin): | class MidonetPluginV2(db_base_plugin_v2.QuantumDbPluginV2, l3_db.L3_NAT_db_mixin, portsecurity_db.PortSecurityDbMixin): | ||

| − | |||

</nowiki></pre> | </nowiki></pre> | ||

| + | When a tenant creates a network in Quantum, a tenant virtual bridge is created in MidoNet. Just like a Quantum network, a virtual bridge has ports. When a VM is attached to Quantum network's port, it is also attached to MidoNet's virtual bridge port. VMs attached to a virtual bridge have L2 connectivity. When a tenant creates a router in Quantum, a tenant virtual router is created in MidoNet. Just like l3_agent in Quantum, tenant virtual router acts as the gateway for the tenant networks, and it can also do NAT to implement the floating IP feature. Thus with MidoNet plugin, there is no need to run l3_agent. The tenant router is linked to the provider router in 'router_gateway_set' method, and the tenant bridge is linked to the tenant router in 'router_interface_add' method, connecting the VMs to the internet. Tenant router is also linked to metadata router, and this happens when it is first created. Quantum's external networks are treated slightly differently in that they are linked directly to the provider router (therefore called provider bridges). Once the routers and bridges are linked, the virtual topology would look as follows: | ||

| − | + | [[Image:image2.png]] | |

| − | + | The plugging of VMs is handled by both Nova's libvirt VIF driver and MidoNet agent that is running on each compute host. VIF driver is responsible for creating a tap interface for the VM, and the MidoNet agent is responsible for binding the tap interface to the appropriate virtual port. To enable the MidoNet libvirt VIF driver, you need to specify its path in Nova's configuration file (shown in the Configuration variables section). It is not one of Nova's available default VIF drivers because it requires that the VM's interface type to be 'generic ethernet' in libvirt, but as of G-3, this interface type is not supported. You would have to get the VIF driver from GitHub: https://github.com/midokura/midonet-openstack. It is VIF driver's responsibility to notify MidoNet agent to bind the interface to a port using MidoNet API, which would effectively plug a VM into MidoNet virtual network. The diagram below shows what happens on the compute host: | |

| − | + | [[Image:image3.png]] | |

| − | + | The reason why MidoNet requires 'generic ethernet' interface type is that MidoNet uses only the kernel module of OpenvSwitch (datapath), with MidoNet agent replacing the OpenvSwitch user space daemon. Currently, to run a custom software switch with libvirt, it is required that the VM interface type be marked as 'generic ethernet' so that libvirt does not try to add it to other well-known software switches. | |

| − | MidoNet comes with its own DHCP implementation, so the Quantum's DHCP agent does not need to run. | + | MidoNet comes with its own DHCP implementation, so the Quantum's DHCP agent does not need to run. Each virtual bridge has MidoNet's DHCP service is associated. MidoNet DHCP service stores the subnet entries which are mapped to Quantum subnets (However, MidoNet currently only supports one subnet per network). The Quantum subnets are registered to MidoNet DHCP service when created. The MidoAgent running on the hypervisor host intercepts the DHCP requests and responds appropriately. The following diagram shows what MidoNet DHCP service looks like on a compute host: |

| − | + | [[Image:image4.png]] | |

| − | As mentioned before, MidoNet plugin extends SecurityGroupDbMixin, and implements all the security groups features using MidoNet's packet filtering API. Because MidoNet handles the entire security groups implementation, Quantum's security group agent does not need to run. The | + | As mentioned before, MidoNet plugin extends SecurityGroupDbMixin, and implements all the security groups features using MidoNet's packet filtering API. Because MidoNet handles the entire security groups implementation, Quantum's security group agent does not need to run. The filter rules are applied on the virtual port as shown below: |

| − | + | [[Image:image5.png]] | |

As a final implementation note, the plugin will support metadata server, but not with overlapping IP address support for G-3. | As a final implementation note, the plugin will support metadata server, but not with overlapping IP address support for G-3. | ||

| − | |||

| − | |||

| − | |||

| − | |||

'''Configuration variables:''' | '''Configuration variables:''' | ||

1. To specify MidoNet plugin in Quantum (quantum.conf): | 1. To specify MidoNet plugin in Quantum (quantum.conf): | ||

| − | |||

<pre><nowiki> | <pre><nowiki> | ||

| Line 76: | Line 71: | ||

</nowiki></pre> | </nowiki></pre> | ||

| − | + | 2. MidoNet plugin specific configuration parameters (midonet.ini): | |

| − | 2. MidoNet plugin specific configuration parameters ( | ||

| − | |||

<pre><nowiki> | <pre><nowiki> | ||

| − | |||

[midonet] | [midonet] | ||

midonet_uri = <MidoNet API server URI> | midonet_uri = <MidoNet API server URI> | ||

username = <MidoNet admin username> | username = <MidoNet admin username> | ||

password = <MidoNet admin password> | password = <MidoNet admin password> | ||

| + | project_id = <ID of the project that MidoNet admin user belongs to> | ||

provider_router_id = <Virtual provider router ID> | provider_router_id = <Virtual provider router ID> | ||

metadata_router_id = <Virtual metadata router ID> | metadata_router_id = <Virtual metadata router ID> | ||

| − | |||

</nowiki></pre> | </nowiki></pre> | ||

| − | + | 3. Set MidoNet libvirt VIF driver in Nova's configuration (nova.conf): | |

| − | 3. Set MidoNet | ||

| − | |||

<pre><nowiki> | <pre><nowiki> | ||

| − | + | libvirt_vif_driver=midonet.nova.virt.libvirt.vif.MidonetVifDriver | |

| − | libvirt_vif_driver= | ||

| − | |||

</nowiki></pre> | </nowiki></pre> | ||

| − | |||

'''Test Cases: ''' | '''Test Cases: ''' | ||

| − | + | Currently, 'quantum/tests/unit/midonet/test_midonet_plugin.py' is the only unit test module for [[MidoNet]] plugin. In this module, simple CRUD operations on network are tested. More test cases will be added in the future. | |

Latest revision as of 23:29, 17 February 2013

Quantum MidoNet Plugin

'Scope:'

The goal of this blueprint is to implement Quantum plugin for MidoNet virtual networking platform.

Use Cases':'

To provide and enable MidoNet virtual networking technology as one of the options for those using Quantum as cloud networking orchestration.

Some of the benefits that come from using MidoNet in your IaaS cloud are:

- the ability to scale IaaS networking into the thousands of compute hosts

- the ability to offer L2 isolation which is not bounded by the VLAN limitation (4096 unique VLANs)

- making your entire IaaS networking layer completely distributed and fault-tolerant

Please note that, at the time of this writing, MidoNet only works with libvirt/KVM.

Implementation Overview:

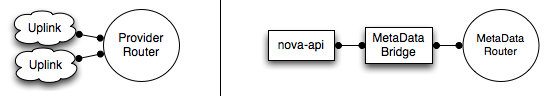

In the MidoNet virtual topology that gets constructed in the integration with Quantum, there is one virtual router that must always exist, called provider virtual router. It is a virtual router belonging to the service provider, and it is capable of routing traffic among the tenant routers as well as routing traffic into and out of the provider network. The virtual ports on this router are mapped to the interfaces on the 'edge' servers that are connected to the service provider's uplink routers. Additionally, to enable metadata service, another virtual router called metadata virtual router and a virtual bridge called metadata bridge must exist. The metadata bridge is connected to the metadata router, and there is a virtual port on this bridge that is mapped to an interface on the host that the nova-api (metadata server) is running on, effectively allowing the metadata traffic from the VMs to traverse through the virtual network to reach the metadata server. Note that there are additional steps needed to configure the metadata host so that it accepts both the VM traffic and the management traffic properly, but that is out of the scope of this document. It is expected that these virtual routers has already been set up and configured for the MidoNet plugin to function properly. The initial setup of the virtual topology looks as follows:

The files of the plugin are shown below:

quantum/quantum/plugins/midonet/__init__.py quantum/quantum/plugins/midonet/midonet_lib.py quantum/quantum/plugins/midonet/plugin.py quantum/etc/plugins/midonet/midonet.ini quantum/tests/unit/midonet/__init__.py quantum/tests/unit/midonet/test_midonet_plugin.py

'quantum/quantum/plugins/midonet/plugin.py' is the MidoNet Quantum plugin code, and 'quantum/quantum/plugins/midonet/midonet_lib.py' is a helper library to make calls to MidoNet API. The plugin depends on the MidoNet Python client library, which is in GitHub: https://github.com/midokura/python-midonetclient.

MidoNetPluginV2 class in 'midonet/plugin.py' extends db_base_plugin_v2.QuantumDbPluginV2, l3_db.L3_NAT_db_mixin, and portsecurity_db.PortSecurityDbMixin, and implements all the necessary methods to provide the Quantum L2, L3 and security groups features:

class MidonetPluginV2(db_base_plugin_v2.QuantumDbPluginV2, l3_db.L3_NAT_db_mixin, portsecurity_db.PortSecurityDbMixin):

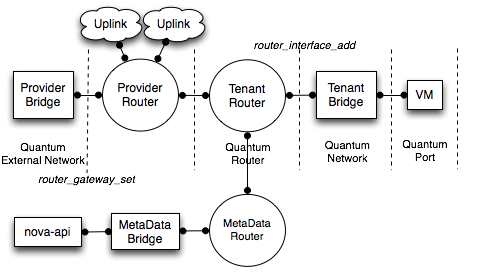

When a tenant creates a network in Quantum, a tenant virtual bridge is created in MidoNet. Just like a Quantum network, a virtual bridge has ports. When a VM is attached to Quantum network's port, it is also attached to MidoNet's virtual bridge port. VMs attached to a virtual bridge have L2 connectivity. When a tenant creates a router in Quantum, a tenant virtual router is created in MidoNet. Just like l3_agent in Quantum, tenant virtual router acts as the gateway for the tenant networks, and it can also do NAT to implement the floating IP feature. Thus with MidoNet plugin, there is no need to run l3_agent. The tenant router is linked to the provider router in 'router_gateway_set' method, and the tenant bridge is linked to the tenant router in 'router_interface_add' method, connecting the VMs to the internet. Tenant router is also linked to metadata router, and this happens when it is first created. Quantum's external networks are treated slightly differently in that they are linked directly to the provider router (therefore called provider bridges). Once the routers and bridges are linked, the virtual topology would look as follows:

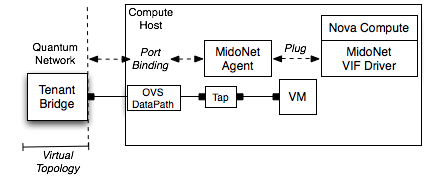

The plugging of VMs is handled by both Nova's libvirt VIF driver and MidoNet agent that is running on each compute host. VIF driver is responsible for creating a tap interface for the VM, and the MidoNet agent is responsible for binding the tap interface to the appropriate virtual port. To enable the MidoNet libvirt VIF driver, you need to specify its path in Nova's configuration file (shown in the Configuration variables section). It is not one of Nova's available default VIF drivers because it requires that the VM's interface type to be 'generic ethernet' in libvirt, but as of G-3, this interface type is not supported. You would have to get the VIF driver from GitHub: https://github.com/midokura/midonet-openstack. It is VIF driver's responsibility to notify MidoNet agent to bind the interface to a port using MidoNet API, which would effectively plug a VM into MidoNet virtual network. The diagram below shows what happens on the compute host:

The reason why MidoNet requires 'generic ethernet' interface type is that MidoNet uses only the kernel module of OpenvSwitch (datapath), with MidoNet agent replacing the OpenvSwitch user space daemon. Currently, to run a custom software switch with libvirt, it is required that the VM interface type be marked as 'generic ethernet' so that libvirt does not try to add it to other well-known software switches.

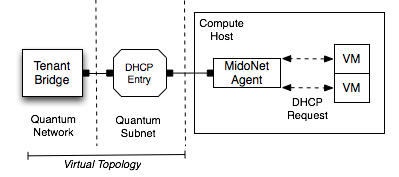

MidoNet comes with its own DHCP implementation, so the Quantum's DHCP agent does not need to run. Each virtual bridge has MidoNet's DHCP service is associated. MidoNet DHCP service stores the subnet entries which are mapped to Quantum subnets (However, MidoNet currently only supports one subnet per network). The Quantum subnets are registered to MidoNet DHCP service when created. The MidoAgent running on the hypervisor host intercepts the DHCP requests and responds appropriately. The following diagram shows what MidoNet DHCP service looks like on a compute host:

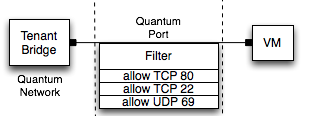

As mentioned before, MidoNet plugin extends SecurityGroupDbMixin, and implements all the security groups features using MidoNet's packet filtering API. Because MidoNet handles the entire security groups implementation, Quantum's security group agent does not need to run. The filter rules are applied on the virtual port as shown below:

As a final implementation note, the plugin will support metadata server, but not with overlapping IP address support for G-3.

Configuration variables:

1. To specify MidoNet plugin in Quantum (quantum.conf):

core_plugin = quantum.plugins.midonet.plugin.MidonetPluginV2

2. MidoNet plugin specific configuration parameters (midonet.ini):

[midonet] midonet_uri = <MidoNet API server URI> username = <MidoNet admin username> password = <MidoNet admin password> project_id = <ID of the project that MidoNet admin user belongs to> provider_router_id = <Virtual provider router ID> metadata_router_id = <Virtual metadata router ID>

3. Set MidoNet libvirt VIF driver in Nova's configuration (nova.conf):

libvirt_vif_driver=midonet.nova.virt.libvirt.vif.MidonetVifDriver

Test Cases:

Currently, 'quantum/tests/unit/midonet/test_midonet_plugin.py' is the only unit test module for MidoNet plugin. In this module, simple CRUD operations on network are tested. More test cases will be added in the future.