Shares Service

Contents

Introduction

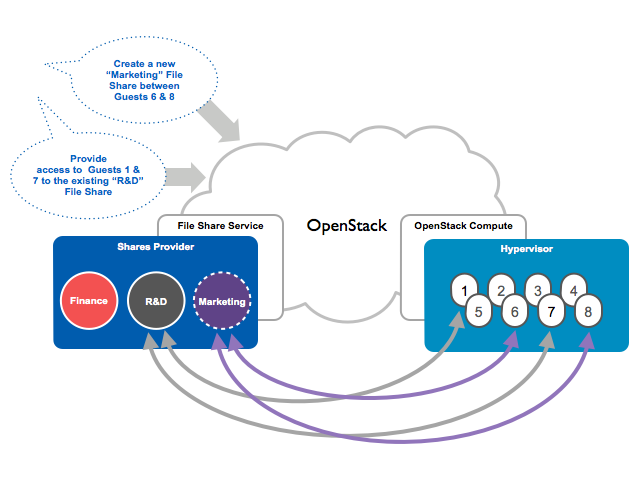

This page documents the concept and vision for establishing a shared file system service for OpenStack. The development name for this project is Manila. We propose and are in the process of implementing a new OpenStack service (originally based on Cinder). Cinder presently functions as the canonical storage provisioning control plane in OpenStack for block storage as well as delivering a persistence model for instance storage. The File Share Service prototype, in a similar manner, provides coordinated access to shared or distributed file systems. While the primary consumption of file shares would be across OpenStack Compute instances, the service is also intended to be accessible as an independent capability in line with the modular design established by other OpenStack services. The design and prototype implementation provide extensibility for multiple backends (to support vendor or file system specific nuances / capabilities) but is intended to be sufficiently abstract to accommodate any of a variety of shared or distributed file system types. The team's intention is to introduce the capability as an OpenStack incubated project in the Juno timeframe, graduate it and submit for consideration as a core service as early as the as of yet unnamed "K" release.

According to IDC in its “Worldwide File-Based Storage 2012-2016 Forecast” (doc#235910, July 2012), file-based storage continues to be a thriving market, with spending on File-based storage solutions to reach north of $34.6 billion in 2016. Of the 27 Exabyte’s (EB) of total disk capacity estimated to have shipped in 2012, IDC projected that nearly 18 EB were of file-based capacity, accounting for over 65% of all disk shipped by capacity. A diversity of applications, from server virtualization to relational or distributed databases to collaborative content creation, often depend on the performance, scalability and simplicity of management associated with file-based systems, and the large ecosystem of supporting software products. OpenStack is commonly contemplated as an option for deploying classic infrastructure in an "as a Service" model, but without specific accommodation for shared file systems represents an incomplete solution.

Projects

Project Plan

Manila

| Source code | https://github.com/stackforge/manila |

| Bug tracker | https://bugs.launchpad.net/manila |

| Feature tracker | https://blueprints.launchpad.net/manila |

Python Manila Client

| Source code | https://github.com/stackforge/python-manilaclient |

| Bug tracker | https://bugs.launchpad.net/python-manilaclient |

| Feature tracker | https://blueprints.launchpad.net/python-manilaclient |

Meetings

Manila Project Agenda & Meetings

Incubation / Graduation

Track progress towards Manila Incubation & Graduation.

Design

Use Cases (DRAFT)

Coordinate and provide shared access to previously (externally) established share / export

Create a new commonly accessible (across a defined set of instances) share / export

3) Bare-metal / non-virtualized consumption

Accommodate and provide mechanisms for last-mile consumption of shares by consumers of the service that aren't mediated by Nova.

4) Cross-tenant sharing

Coordinate shares across tenants

Wrap Manila around pre-existing shares / exports so that they can be provisioned.

Blueprints

The original master blueprint is here: File Shares Service

The service was initially conceived as an addition of a separate File Share Service, albeit delivered within the Cinder (OpenStack Block Storage) project given the opportunity to make use of common code. In June of 2013, however, the decision was made to establish an independent development project to accommodate:

- Creation of file system shares (e.g. the create API needs to support a "protocol" & a permissions "mask" and "ownership" parameters)

- Deletion of file systems shares

- List, show, provide and deny access, & list share access rules to file system shares

- Create, list, and delete snapshots / clones of file systems shares

- Coordination of mounting file system shares

- Unmounting file systems shares

Implementation status: development underway in [[1]]

Manila API Documentation Manila API Roadmap Manila Database Specification

NOTE: Manila started as a fork of Cinder given that it provides facility for many of the concepts that a File Shares service would depend upon. The present Cinder concepts of capacity, target (server in NAS parlance), initiator (likewise the client when referring to shared file systems) are common conceptually (if not entirely semantically) and broadly applicable to both block and file-based storage. Specific Cinder capabilities (such as the filter scheduler, the notion of type, and extra specs) likewise apply to provisioning shared file systems as well. The initial prototype of the File Share Service is thus based on an evolution of Cinder. Manila, however, addresses a variety of additional concerns that aren't relevant to Cinder operation. After discussion within the Cinder community and consultation with members of the Technical Committee it was determined that establishing a separate project expressly designed and developed to deliver shared / distributed file systems as a service was the most viable approach. The intention is to move any commonality between Cinder and the File Share Service into Oslo where sensible.

2) Manila Reference Provider(s)

Creation of a reference Manila provider (commonly also referred to as a driver) for shared file system use under the proposed expanded API. As an example, a NetApp provider for this would be able to advertise, accept, and respond to requests for NFSv3, NFSv4, NFSv4.1 (w/ pNFS), & contemporary CIFS / SMB protocols (eg versions 2, 2.1, 3). Additional modification of python-cinderclient will be necessary to provide for the expanded array of request parameters. Both a vendor independent reference and NetApp-specific backend are part of the aforementioned prototype and are part of the submission.

Implementation status: completed.

Allowing one Cinder node to manage multiple share backends. A backend can run either or both of share and volume services. Support for shares in filter scheduler allows the cloud administrator to manage large-scale share storage by filtering backends based on predefined parameters.

Implementation status: completed.

4) End to End Experience (Automated Mounting)

A proposal for handling injection / updates of mounts to instantiated guests operating in the Nova context. A listener / agent that could either be interacted directly or more likely poll or receive updates from instance metadata changes would represent one possible solution. The possible use of either cloud-init or VirtFS (which would attach shared file systems to instances in a manner similar to Cinder block storage) is also under consideration.

Additional discussion here: Manila Storage Integration Patterns

Implementation status: Scoping.

5) The last mile problem

Accommodation for a variety of use cases / networking topologies (ranging from flat networks, to Neutron SDNs, to hypervisor mediated options) for connecting from shares to instances are discussed here: Manila Networking

Implementation status: not started.

6) Tenant and Administrative UI

Manila must expose both administrative and tenant Horizon interfaces.

Implementation status: in design phase... the initial Horizon UI will borrow heavily from the existing Cinder tenant facing and administrative UI.

Reference

Please refer to the OpenStack Glossary for a standard definition of terms. Additionally, the following may clarify, add to, or differ slightly from those definitions:

Volume:

- Persistent (non-ephemeral) block-based storage. Note that this definition may (and often does) conflict with vendor-specific definitions.

File System:

- A system for organizing and storing data in directories and files. A shared file system provides (and arbitrates) concurrent access from multiple clients generally over IP networks and via established protocols.

NFS:

CIFS:

GlusterFS:

Ceph:

Subpages

- Manila/API

- Manila/Concepts

- Manila/Debugging

- Manila/Etherpads

- Manila/Graduation

- Manila/IPv6

- Manila/IcehouseDevstack

- Manila/Ideas Backlog

- Manila/Incubation Application

- Manila/JunoSummitPresentation

- Manila/Kilo Network Changes

- Manila/Kilo Network Spec

- Manila/ManilaFileShareAccessOfAD

- Manila/ManilaWithGREtunnels

- Manila/ManilaWithVXLANtunnels

- Manila/Manila Storage Integration Patterns

- Manila/Meetings

- Manila/MountAutomation

- Manila/Networking

- Manila/Networking/Gateway mediated

- Manila/Program Application

- Manila/ProjectPlan

- Manila/Provide private data storage API for drivers

- Manila/QoS

- Manila/Quobyte

- Manila/Replication Design Notes

- Manila/Replication Use Cases

- Manila/SAP enterprise team

- Manila/SteinCycle

- Manila/TrainCycle

- Manila/design

- Manila/design/

- Manila/design/access groups

- Manila/design/manila-auth-access-keys

- Manila/design/manila-ceph-native-driver

- Manila/design/manila-generic-groups

- Manila/design/manila-liberty-consistency-groups

- Manila/design/manila-liberty-consistency-groups/api-schema

- Manila/design/manila-mitaka-data-replication

- Manila/design/manila-newton-hpb-support

- Manila/docs

- Manila/docs/API-roadmap

- Manila/docs/HOWTO use manila with horizon

- Manila/docs/HOWTO use tempest with manila

- Manila/docs/Manila Developer Setup Fedora19

- Manila/docs/Setting up DevStack with Manila on Fedora 20

- Manila/docs/db

- Manila/issue-neutron-on-localhost-ipv6

- Manila/specs/scenario-tests

- Manila/specs/split-storage-actions-computing-and-networking

- Manila/usecases