Sahara/NextGenArchitecture

Currently Sahara is the single one service that works in one Python process. All of the REST API, DB interop, provisioning mechanism are working together. It produces many problems with high I/O load while cluster creation, lack of the HA and etc.

There should be two phases of improving Sahara Architecture.

Contents

- 1 Phase 1: Split Sahara to different services

- 2 Service sahara-api (s-api)

- 3 Service sahara-engine (s-engine)

- 4 Task execution framework

- 5 Conductor module

- 6 Phase 2: Implement HA for provisioning tasks

- 7 Main operations execution flows

- 8 Additional notes

- 9 Some notes and FAQ

- 10 Potential metrics to determine architecture upgrade success

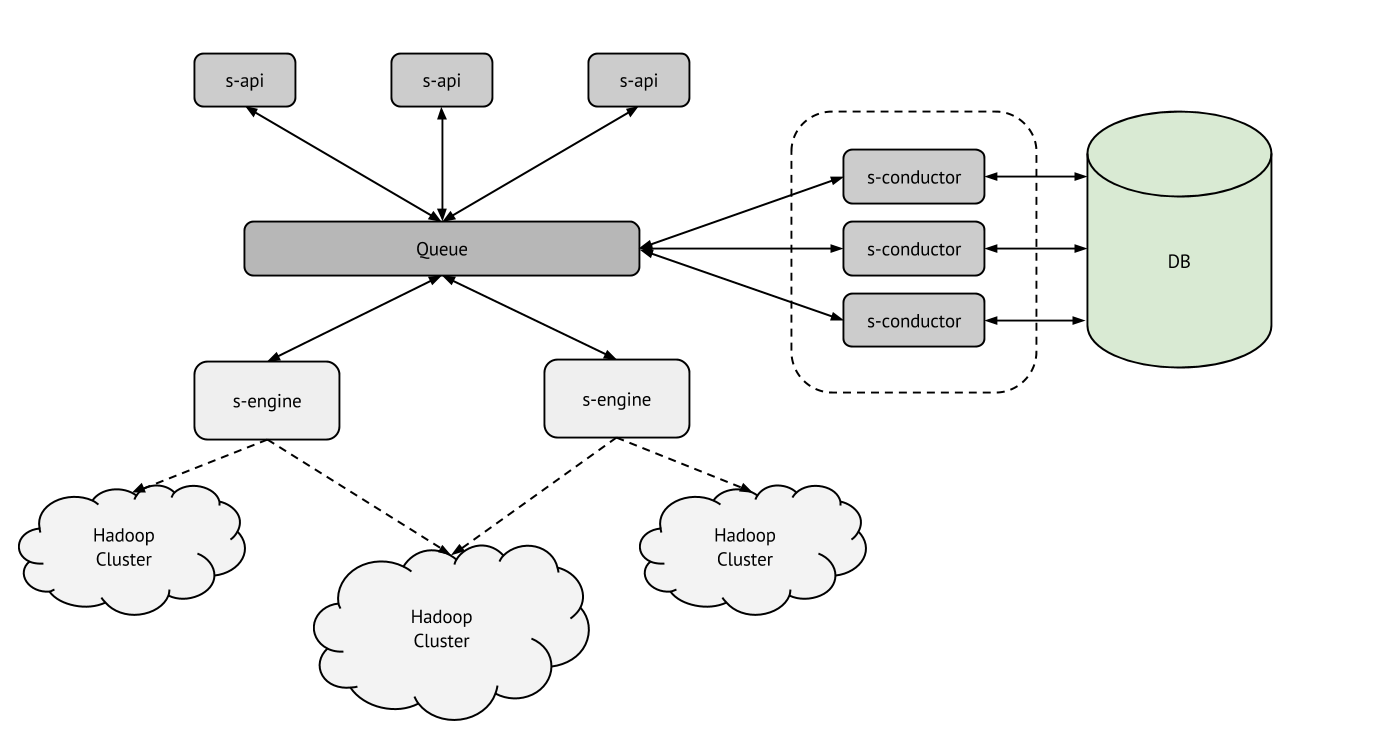

Phase 1: Split Sahara to different services

Sahara should be split into two horizontally scalable services:

- sahara-api (s-api);

- sahara-engine (s-engine).

Also DB access layer is to be decoupled from the main code as 'conductor' module

In this phase the following problems should be solved:

- Decouple UI from provisioning:

- UI should be responsive even if provisioning part is fully busy;

- launching large clusters:

- need to use several engines to provision one Hadoop cluster;

- split cluster creation to many atomic tasks;

- very important to support parallel ssh to many nodes from many engines;

- starting many clusters in one time:

- need to utilize more than one process;

- need to utilize more than one node;

- long-running tasks:

- we should avoid waiting for a long time on one engine to minimize risk of interruption;

- new Provisioning Plugins SPI:

- it should make provisioning easier for plugins;

- it should generate tasks that could be executed simultaneously;

- support EDP:

- it’ll require long-running tasks;

- Sahara should support execution of several simultaneously running tasks (several jobs on one cluster);

- something else?.

The next several sections explains proposed Sahara services’ meanings and responsibilities.

Service sahara-api (s-api)

It should be horizontally scalable and use RPC to run tasks.

Responsibilities:

- REST API:

- http endpoint/method validation;

- objects existence validation;

- jsonschema-based validation;

- plugin or operation-specific validation:

- sahara-side validation (for example, it should be restricted to delete templates that are used in other templates or clusters);

- ask plugin to validate operation;

- create initial task for operation;

- this service will be endpoint for Sahara and should be registered in Keystone, in case of many sahara-api we should use HAProxy as endpoint that will balance load between several sahara-api;

- interop with OpenStack components:

- auth using Keystone (in future we'l need to take trusts or something else to support long-running tasks and autoscaling);

- validation using Nova, Glance, Cinder, Swift, etc.

Service sahara-engine (s-engine)

Sahara engine service should execute tasks and create new ones. It could call plugins to make something. Responsibilities:

- run tasks and long-running tasks;

- interop with other OpenStack components:

- create different resources (instances, volumes, ips, etc.) using Nova, Glance, Cinder.

Task execution framework

We should create draft of Tasks Execution framework and implement it to use benefits of horizontally scalable architecture.

Etherpad for discussing and drafting it: https://etherpad.openstack.org/savanna_tasks_framework

Conductor module

This module should take responsibility to work with database and hide db from all other components.

Responsibilities:

- database interop;

- no interop with other Sahara and OpenStack components.

Phase 2: Implement HA for provisioning tasks

All important operations in Sahara should be reliable, it means that Sahara should support replayings transactions and rollbacks for all tasks. Here is a library for doing it https://wiki.openstack.org/wiki/TaskFlow in stackforge. Looks like that it could be used for Phase 2 of improving Sahara Architecture. To solve reliability and consistency problems we should possibly add one more Sahara service for tasks/workflows coordination - sahara-taskmanager (s-taskmanager) that should horizontally scalable too.

In this phase the following problems should be solved:

- support for workflows;

- reliability for tasks/workflows execution;

- reliability for long-running tasks.

Main operations execution flows

General flow tips

- DB objects will be used only in one module ('savanna/db/sqlalchemy') that is responsible for DB interoperability;

- all imports of db-related classes should be located only in this module ('savanna/db/sqlalchemy');

- in sahara-api and sahara-engine we'll operate with simple resource objects (dicts in fact);

- TBD.

Create Template

- [s-api] parse incoming request;

- [s-api] perform schema and basic validation;

- [s-api:plugin] perform advanced validation of template;

- [s-api:conductor] store template in db;

- [s-api] return response to user.

Launch Cluster

- [s-api] parse incoming request;

- [s-api] perform schema and basic validation;

- [s-api:plugin] perform advanced validation of cluster creation;

- [s-api:conductor] store cluster object in DB;

- [s-api] return response to user;

- [s-api] call sahara-engine to launch cluster;

- [message queue / local mode];

- [s-engine-1] start preparing infrastructure for cluster;

- [s-engine-1:plugin] update infra requirements in cluster (for example, add management node, update some properties of instances, set initial scripts, etc.);

- [s-engine-1] spawn tasks for creating all infra and wait for their completion;

- [message queue / local mode];

- [s-engine-2 | s-engine-3 | s-engine-X] run task from MQ, it could be create instance and run some commands on it, create and attach cinder volume, etc;

- [s-engine-1] all infra preparations are done, go on;

- [s-engine-1:plugin] configure cluster by creating tasks for uploading file and scripts to the cluster nodes;

- [message queue / local mode];

- [s-engine-2 | s-engine-3 | s-engine-X] run task from MQ, it could be upload file, run script and etc.;

- [s-engine-1:plugin] spawn tasks for launching needed services on nodes (cluster launch);

- [s-engine-2 | s-engine-3 | s-engine-X] run task from MQ, it could be run some service;

- [s-engine-1:conductor] update cluster status;

Scale Cluster

The same behavior as in the "Launch Cluster" operation.

Additional notes

All-in-one installation

We want to make "savanna-all" binary to support all-in-one Savanna execution for small OpenStack clusters and dev/qa needs.

Some notes and FAQ

Why not provision agents to Hadoop cluster’s to provision all other stuff?

There are several cons for such behaviour:

- there will problems with scaling agents for launching large clusters;

- we can have problems with migrating agents while cluster scaling;

- agents are unexpected instance resources consumers (it could influence on Hadoop configurations);

- these agents will need to communicate with all other services (same message queue, same database, etc.), users will have an ability to login to these instances, so it’s a security vulnerability;

- we will need to support various Linux distros with different python and lib versions.

Why not implement HA now?

Our main focus for now it to implement EDP and improve Sahara performance by support of using several engines to provision one Hadoop cluster and several Hadoop clusters simultaneously. So, we want to split this hard work to several steps and move on step by step.

Why do we need long-running operations?

There are several long-running operations that we should execute, for example, decommissioning when we decreasing cluster size.

How services will interop between each other?

Oslo RPC framework and Message Queue (RabbitMQ, Qpid, etc.) will be used to interop between services.

Potential metrics to determine architecture upgrade success

- s-api:

- number of handled requests per second (per all request groups);

- s-engine:

- number of simultaneously launching Hadoop clusters per sahara controller;

- speed of creation one Hadoop cluster with specific number of nodes on specific number of controllers;

- cluster creation time with specific number of nodes (excluding instances boot time).