Difference between revisions of "Sahara/EDP"

(Created page with "== EDP (Elastic Data Processing) Components and workflow == === Key Features === Starting the job: * Simple REST API and UI * TODO: mockups * Job can be entered through UI/AP...") |

m (Sergey Lukjanov moved page Savanna/EDP to Sahara/EDP: Savanna project was renamed due to the trademark issues.) |

||

| (7 intermediate revisions by 3 users not shown) | |||

| Line 3: | Line 3: | ||

Starting the job: | Starting the job: | ||

| − | * Simple REST API and UI | + | :* Simple REST API and UI |

| − | * | + | :* Mockups: https://wiki.openstack.org/wiki/Savanna/UIMockups/JobCreation |

| − | * Job can be entered through UI/API or pulled through VCS | + | :* Job can be entered through UI/API or pulled through VCS |

| − | * Configurable data source | + | :* Configurable data source |

| − | |||

Job execution modes: | Job execution modes: | ||

| − | * Run job on one of the existing cluster | + | :* Run job on one of the existing cluster |

| − | ** Expose information on cluster load | + | :** Expose information on cluster load |

| − | ** Provide hints for optimizing data locality TODO: more details | + | :** Provide hints for optimizing data locality TODO: more details |

| − | * Create new transient cluster for the job | + | :* Create new transient cluster for the job |

Job structure: | Job structure: | ||

| − | * Individual job via jar file, Pig or Hive script | + | :* Individual job via jar file, Pig or Hive script |

| − | * Oozie workflow | + | :* Oozie workflow |

| − | * In future to support EMR job flows import | + | :* In future to support EMR job flows import |

Job execution tracking and monitoring | Job execution tracking and monitoring | ||

| − | * Any existent components that can help to visualize? (Twitter Ambrose) | + | :* Any existent components that can help to visualize? (Twitter Ambrose) |

| − | * Terminate job | + | :* Terminate job |

| − | * Auto-scaling functionality | + | :* Auto-scaling functionality |

=== Main EDP Components === | === Main EDP Components === | ||

| Line 33: | Line 32: | ||

==== Savanna Dispatcher Component ==== | ==== Savanna Dispatcher Component ==== | ||

This component is responsible for provisioning a new cluster, scheduling job on new or existing cluster, resizing cluster and gathering information from clusters about current jobs and utilization. Also, it should provide information to help to make a right decision where to schedule job, create a new cluster or use existing one. For example, current loads on clusters, their proximity to the data location etc. | This component is responsible for provisioning a new cluster, scheduling job on new or existing cluster, resizing cluster and gathering information from clusters about current jobs and utilization. Also, it should provide information to help to make a right decision where to schedule job, create a new cluster or use existing one. For example, current loads on clusters, their proximity to the data location etc. | ||

| − | UI Component | + | |

| − | Integration into OpenStack Dashboard - Horizon. It should provide instruments for job creation, monitoring etc. | + | ==== UI Component ==== |

| − | + | ||

| − | Cluster Level Coordination Component | + | Integration into OpenStack Dashboard - Horizon. It should provide instruments for job creation, monitoring etc. Hue already provides part of this functionality: submit jobs (jar file, Hive, Pig, Impala), view job status and output. |

| + | |||

| + | ==== Cluster Level Coordination Component ==== | ||

Expose information about jobs on a specific cluster. Possible this component should be represent by existing Hadoop projects Hue and Oozie. | Expose information about jobs on a specific cluster. Possible this component should be represent by existing Hadoop projects Hue and Oozie. | ||

| − | + | === User Workflow === | |

| − | - User selects or creates a job to run | + | :- User selects or creates a job to run |

| − | - User chooses data source for appropriate type for this job | + | :- User chooses data source for appropriate type for this job |

| − | - Dispatcher provides hints to user about a better way to scheduler this job (on existing clusters or create a new one) | + | :- Dispatcher provides hints to user about a better way to scheduler this job (on existing clusters or create a new one) |

| − | - User makes a decision based on the hint from dispatcher | + | :- User makes a decision based on the hint from dispatcher |

| − | - Dispatcher (if needed) creates or resizes existing cluster and schedules job to it | + | :- Dispatcher (if needed) creates or resizes existing cluster and schedules job to it |

| − | - Dispatcher periodically pull status of job and shows it on UI | + | :- Dispatcher periodically pull status of job and shows it on UI |

| + | |||

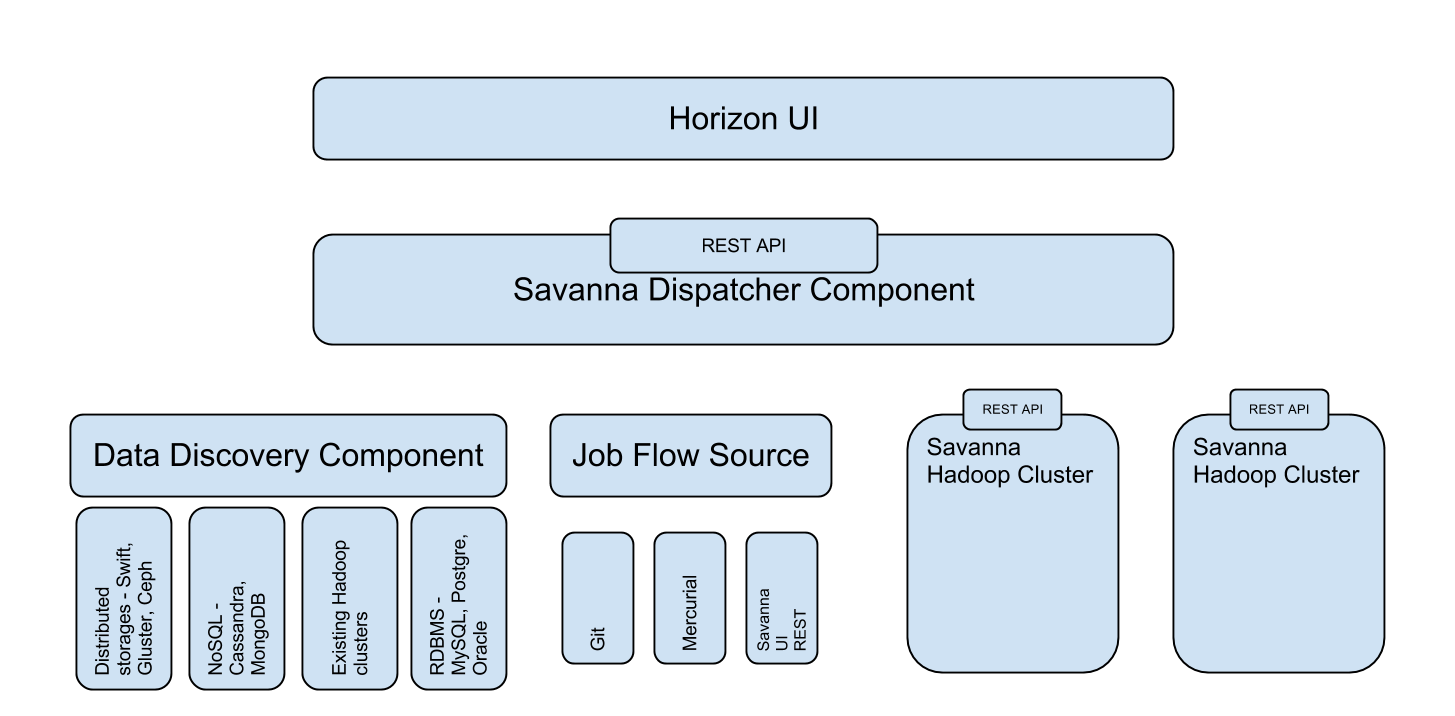

| + | === EDP diagram === | ||

| + | [[File:EDP diagram.png]] | ||

| + | |||

| + | === Sequence diagrams === | ||

| + | This is a place to add [https://wiki.openstack.org/wiki/Savanna/EDP_Sequences sequence diagrams] to aid discussion during development | ||

Latest revision as of 15:41, 7 March 2014

Contents

EDP (Elastic Data Processing) Components and workflow

Key Features

Starting the job:

- Simple REST API and UI

- Mockups: https://wiki.openstack.org/wiki/Savanna/UIMockups/JobCreation

- Job can be entered through UI/API or pulled through VCS

- Configurable data source

Job execution modes:

- Run job on one of the existing cluster

- Expose information on cluster load

- Provide hints for optimizing data locality TODO: more details

- Create new transient cluster for the job

- Run job on one of the existing cluster

Job structure:

- Individual job via jar file, Pig or Hive script

- Oozie workflow

- In future to support EMR job flows import

Job execution tracking and monitoring

- Any existent components that can help to visualize? (Twitter Ambrose)

- Terminate job

- Auto-scaling functionality

Main EDP Components

Data discovery component

EDP can have several sources of data for processing. Data can be pulled from Swift, GlusterFS or NoSQL database like Cassandra or HBase. To provide an unified access to this data we’ll introduce a component responsible for discovering data location and providing right configuration for Hadoop cluster. It should have a pluggable system.

Job Source

Users would like to execute different types of jobs: jar file, Pig and Hive scripts, Oozie job flows, etc. Job description and source code can be supplied in a different way. Some users just want to insert hive script and run it. Other users want to save this script in Savanna internal database for later use. We also need to provide an ability to run a job from source code stored in vcs.

Savanna Dispatcher Component

This component is responsible for provisioning a new cluster, scheduling job on new or existing cluster, resizing cluster and gathering information from clusters about current jobs and utilization. Also, it should provide information to help to make a right decision where to schedule job, create a new cluster or use existing one. For example, current loads on clusters, their proximity to the data location etc.

UI Component

Integration into OpenStack Dashboard - Horizon. It should provide instruments for job creation, monitoring etc. Hue already provides part of this functionality: submit jobs (jar file, Hive, Pig, Impala), view job status and output.

Cluster Level Coordination Component

Expose information about jobs on a specific cluster. Possible this component should be represent by existing Hadoop projects Hue and Oozie.

User Workflow

- - User selects or creates a job to run

- - User chooses data source for appropriate type for this job

- - Dispatcher provides hints to user about a better way to scheduler this job (on existing clusters or create a new one)

- - User makes a decision based on the hint from dispatcher

- - Dispatcher (if needed) creates or resizes existing cluster and schedules job to it

- - Dispatcher periodically pull status of job and shows it on UI

EDP diagram

Sequence diagrams

This is a place to add sequence diagrams to aid discussion during development