Difference between revisions of "Sahara/ClusterHA"

< Sahara

(→Design) |

(→Summary) |

||

| (8 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | == | + | ==Summary== |

It Shall provided system level HA. So even if a component fails during Hadoop provisioning, the system shall be able to complete the Hadoop provisioning without defect or error. | It Shall provided system level HA. So even if a component fails during Hadoop provisioning, the system shall be able to complete the Hadoop provisioning without defect or error. | ||

| Line 6: | Line 6: | ||

==User stories== | ==User stories== | ||

| − | + | * Operator gets the list of failed Cluster through savanna web | |

| − | + | * Operator clicks the resume icon | |

| − | + | * The cluster will be recreated by using this operation. | |

| − | |||

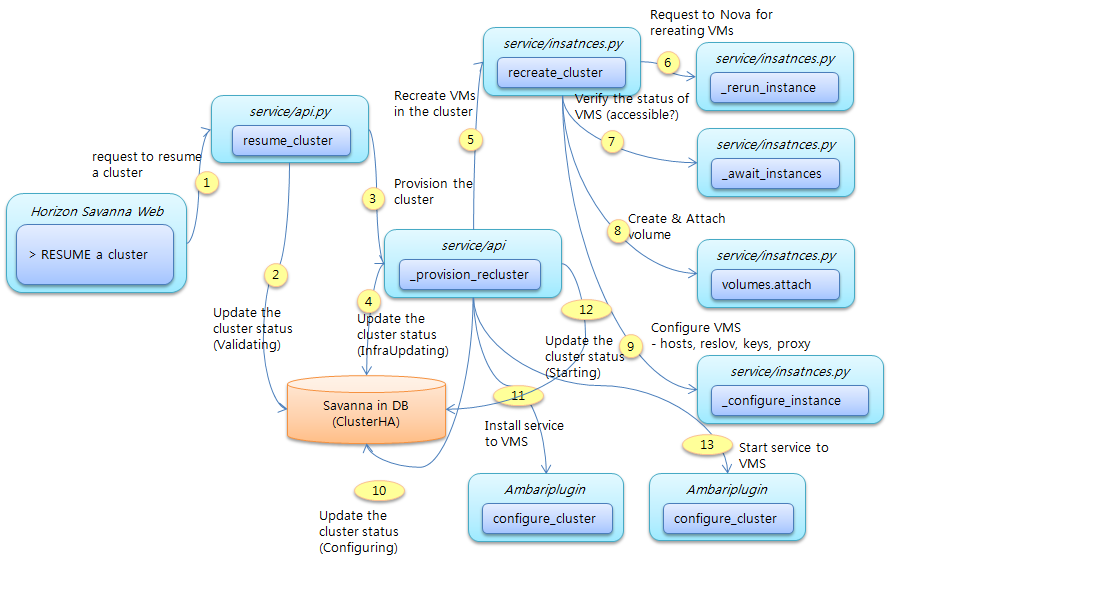

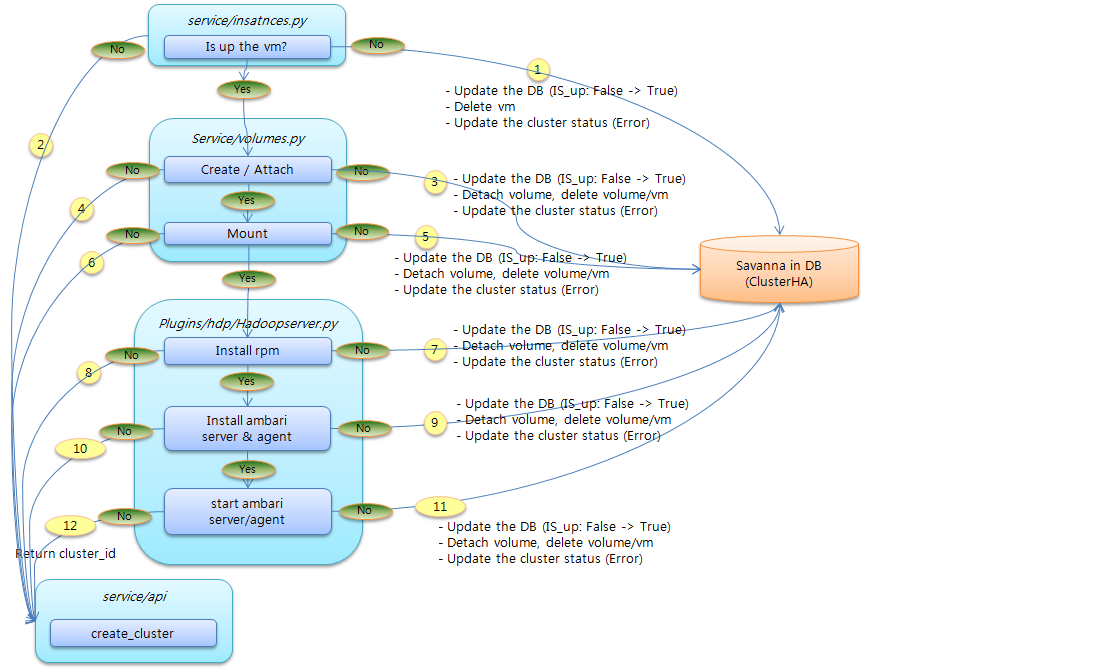

== Design == | == Design == | ||

| + | [[File:ClusterHA1.png|174KBpx|center|ClusterHA1.png]] | ||

| + | [[File:ClusterHA2.png|220KBpx|center|ClusterHA2.png]] | ||

==Implementation== | ==Implementation== | ||

| − | * | + | *Check the cluster status |

| − | * | + | ** Instance (is up? accessible?) |

| − | * | + | ** Volume creation, attachment and mount |

| − | * | + | ** ambari server/agent installment and configuring |

| − | * | + | * If the error is generated, below steps will be done. |

| − | * | + | ** Update the DB which is used by ClusterHA module. (Table name: ClusterHA) |

| − | * | + | ** Delete a Instance |

| − | * | + | ** Detach a volume and deleting a volume |

| + | ** Jump to return the value (cluter_id, status) | ||

| + | * Resume cluster creation | ||

==Code Changes== | ==Code Changes== | ||

| − | + | * service/api.py | |

| + | * service/instances.py | ||

| + | * service/volumes.py | ||

| + | * plugins/hdp/hadooserver.py | ||

| + | * plugins/hdp/ambariplugin.py | ||

| + | * conductor/api.py | ||

| + | * conductor/manager.py | ||

| + | * db/api.py | ||

| + | * db/sqlalchemy/api.py | ||

| + | * db/sqlalchemy/models.py | ||

| + | ...etc... | ||

==Test/Demo Plan== | ==Test/Demo Plan== | ||

Latest revision as of 22:46, 30 October 2014

Contents

Summary

It Shall provided system level HA. So even if a component fails during Hadoop provisioning, the system shall be able to complete the Hadoop provisioning without defect or error.

Release Note

When this is implemented, the system shall be able to complete the Hadoop provisioning without defect or error.

User stories

- Operator gets the list of failed Cluster through savanna web

- Operator clicks the resume icon

- The cluster will be recreated by using this operation.

Design

Implementation

- Check the cluster status

- Instance (is up? accessible?)

- Volume creation, attachment and mount

- ambari server/agent installment and configuring

- If the error is generated, below steps will be done.

- Update the DB which is used by ClusterHA module. (Table name: ClusterHA)

- Delete a Instance

- Detach a volume and deleting a volume

- Jump to return the value (cluter_id, status)

- Resume cluster creation

Code Changes

- service/api.py

- service/instances.py

- service/volumes.py

- plugins/hdp/hadooserver.py

- plugins/hdp/ambariplugin.py

- conductor/api.py

- conductor/manager.py

- db/api.py

- db/sqlalchemy/api.py

- db/sqlalchemy/models.py

...etc...

Test/Demo Plan

This need not be added or completed until the specification is nearing beta.

Unresolved issues

TBD