Difference between revisions of "Rally/RoadMap"

m (→Deployers) |

(→Benchmarking Engine) |

||

| Line 1: | Line 1: | ||

| − | = Benchmarking | + | = Benchmarking = |

| + | TBD | ||

| − | == | + | == Context == |

| + | TBD | ||

| − | + | == Runners == | |

| − | + | TBD | |

| − | |||

| − | |||

| − | + | == Scenarios == | |

| + | TBD | ||

| − | + | == Production Read Clean Up == | |

| − | + | TBD | |

| − | |||

| + | == Non Admin support == | ||

| + | TBD | ||

| − | + | == Pre Created Users == | |

| − | + | TBD | |

| − | == | + | = CLI = |

| + | TBD | ||

| − | + | = Rally-as-a-Service = | |

| + | TBD | ||

| − | + | = Verification = | |

| + | TBD | ||

| − | + | = CI/CD= | |

| + | TBD | ||

| − | + | = Unit & Functional testing = | |

| − | + | TBD | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

= Data processing = | = Data processing = | ||

Revision as of 14:12, 16 July 2014

Contents

Benchmarking

TBD

Context

TBD

Runners

TBD

Scenarios

TBD

Production Read Clean Up

TBD

Non Admin support

TBD

Pre Created Users

TBD

CLI

TBD

Rally-as-a-Service

TBD

Verification

TBD

CI/CD

TBD

Unit & Functional testing

TBD

Data processing

At this moment only thing that we have is getting tables with: min, max, avg. As a first step good=) But we need more!

Data aggregation

to be done...

Graphics & Plots

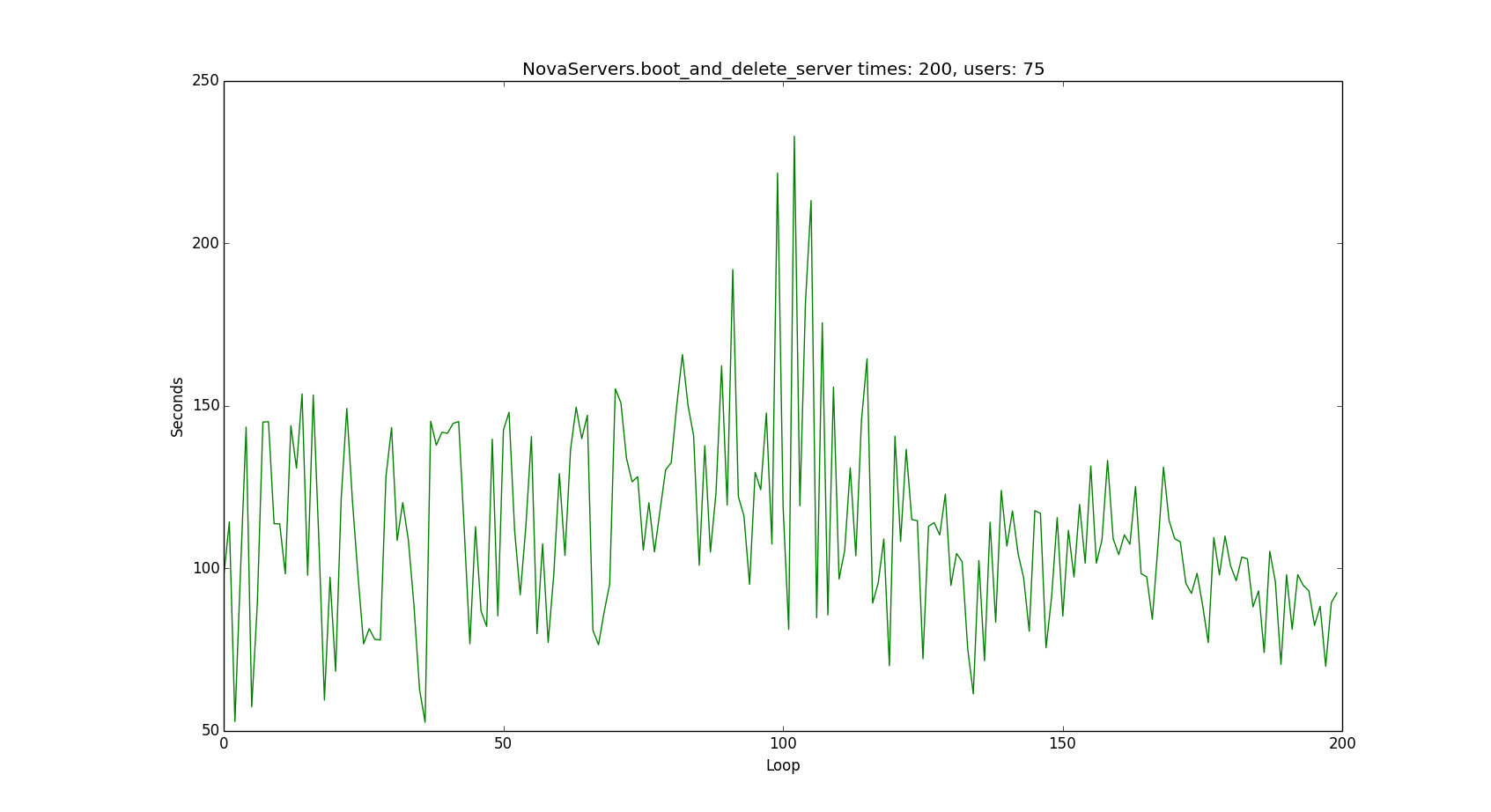

- Simple plot Time of Loop / Iteration

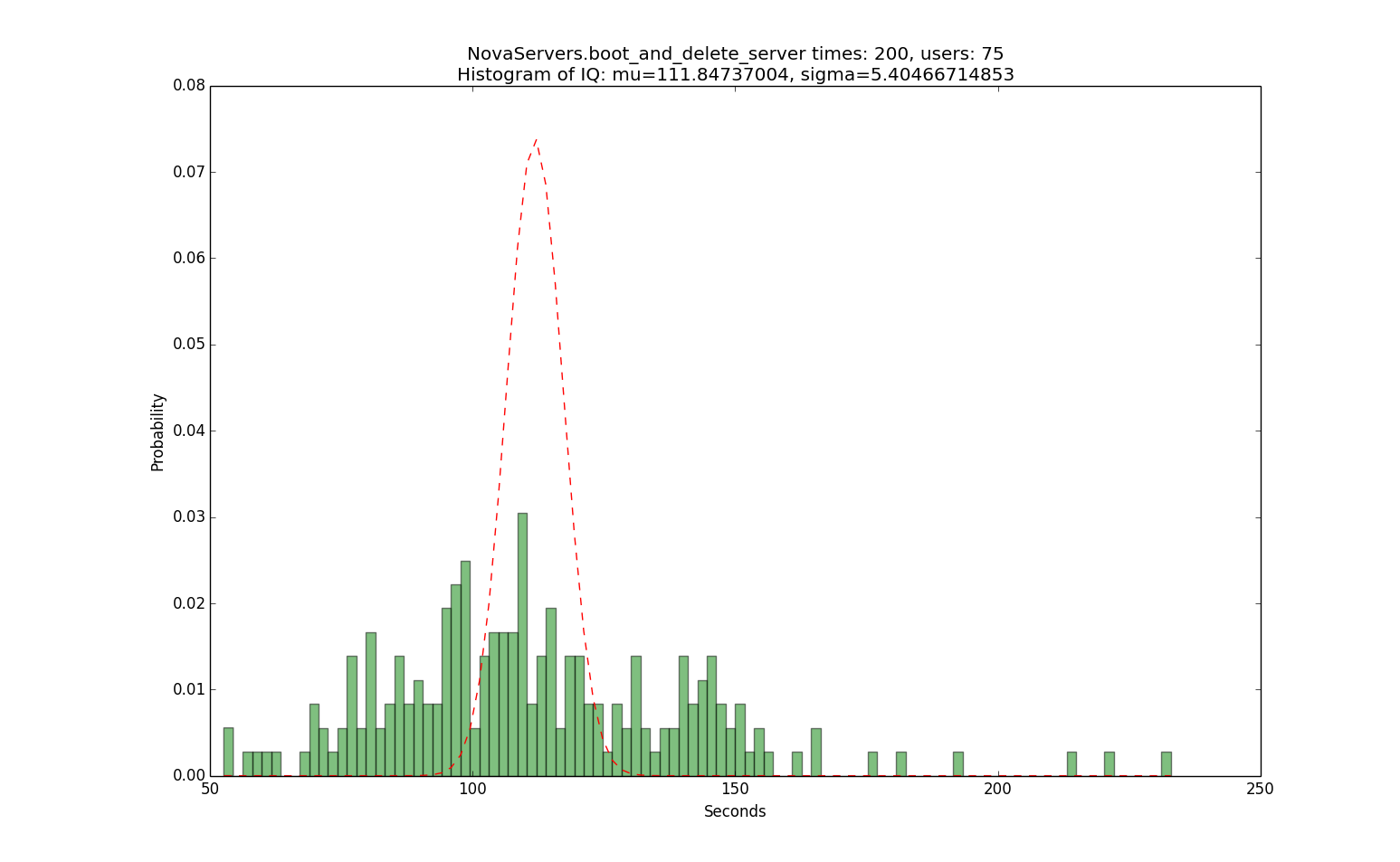

- Histogram of loop times

Profiling

Improve & Merge Tomograph into upstream

To collect profiling data we use a small library Tomograph that was created to be used with OpenStack (needs to be improved as well). Profiling data is collected by inserting profiling/log points in OpenStack source code and adding event listeners/hooks on events from 3rd party libraries that support such events (e.g. sqlalchemy). Currently our patches are applied to OpenStack code during cloud deployment. For easier maintenance and better profiling results profiler could be integrated as an oslo component and in such case it’s patches could be merged to upstream. Profiling itself would be managed by configuration options.

Improve Zipkin or use something else

Currently we use Zipkin as a collector and visualization service but in future we plan to replace it with something more suitable in terms of load (possibly Ceilometer?) and improve visualization format (need better charts).

Some early results you can see here:

Make it work out of box

Few things should be done:

- Merge into upstream Tomograph that will send LOGs to Zipkin

- Bind Tomograph with Benchmark Engine

- Automate installation of Zipkin from Rally

Server providing

- Improve VirshProvider

- Implement netinstall linux on VM (currently implemented only cloning existing VM).

- Add support zfs/lvm2 for fast cloning

- Implement LxcProvider

- This provider about to be used for fast deployment large amount of instances.

- Support zfs clones for fast deployment.

- Implement AmazonProvider

- Get your VMs from Amazon

Deployers

- Implement MultihostEngine - this engine will deploy multihost configuration using existing engines.

- Implement Dev FUEL based engine - deploy OpenStack using FUEL on existing servers or VMs

- Implement Full FUEL based engine - deploy OpenStack with Fuel on bare metal nodes.

- Implement Tripple-O based engine - deploy OpenStack on bare metal nodes using Tripple-O.