Difference between revisions of "Rally"

(→Weekly updates: September 15) |

(→Weekly updates: September 26, 2014) |

||

| Line 129: | Line 129: | ||

== Weekly updates == | == Weekly updates == | ||

| − | '''Each week we write up on a special [[Rally/Updates|weekly updates page]] what sort of things have been accomplished in Rally during the past week and what are our plans for the next one. Below you can find the most recent report (September | + | '''Each week we write up on a special [[Rally/Updates|weekly updates page]] what sort of things have been accomplished in Rally during the past week and what are our plans for the next one. Below you can find the most recent report (September 26, 2014).''' |

| − | + | Here is a brief overview of what has happened in Rally recently: | |

| − | + | * We have [https://review.openstack.org/111977 refactored] the code responsible for '''atomic actions processing''' in Rally benchmarks. Let's remind you that each benchmark scenario in Rally consists of a series of atomic actions, whereas the running time of each atomic action is measured in the same way as that of the whole scenario. After refactoring, we now ensure that Rally '''doesn't skip atomic actions that failed''' and '''distinguishes different runs of two atomic actions with the same name''' (see an example of how a typical results table could look [http://paste.openstack.org/show/114111/ before] and looks [http://paste.openstack.org/show/114112/ after] refactoring). | |

| − | * | + | * Another direction of refactoring was the [https://review.openstack.org/118243 SLA code]; it has been modified so that now SLA results are '''stored in the DB''' along with other benchmarking data. |

| − | * We | + | * Check out a nice [https://github.com/stackforge/rally/blob/master/doc/user_stories/nova/boot_server.rst user story] about '''VMs boot performance''' in Nova with Rally. It shows how Rally can be used in practice to catch reals bugs and improve OpenStack performance. |

| − | + | * We work hard on achieving '''100% test coverage''' in Rally: last week, additional unit tests for [https://review.openstack.org/122127 contexts] and [https://review.openstack.org/122729 scenarios] have been merged. | |

| − | + | Our current priorities are further '''refactoring''' steps, including those in critical parts of Rally (e.g. [https://review.openstack.org/119297 temporary user creation] and [https://review.openstack.org/116269 cloud cleanup] code); we also strive towards making Rally bug-free and continuously issue different bugfixing patches. | |

Revision as of 10:05, 26 September 2014

Contents

What is Rally?

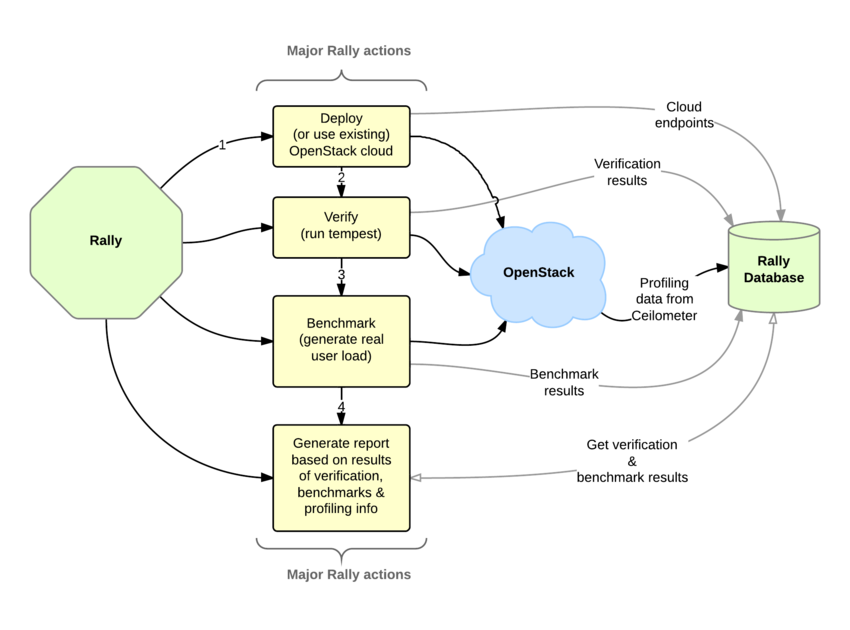

If you are here, you are probably familiar with OpenStack and you also know that it's a really huge ecosystem of cooperative services. When something fails, performs slowly or doesn't scale, it's really hard to answer different questions on "what", "why" and "where" has happened. Another reason why you could be here is that you would like to build an OpenStack CI/CD system that will allow you to improve SLA, performance and stability of OpenStack continuously.

The OpenStack QA team mostly works on CI/CD that ensures that new patches don't break some specific single node installation of OpenStack. On the other hand it's clear that such CI/CD is only an indication and does not cover all cases (e.g. if a cloud works well on a single node installation it doesn't mean that it will continue to do so on a 1k servers installation under high load as well). Rally aims to fix this and help us to answer the question "How does OpenStack work at scale?". To make it possible, we are going to automate and unify all steps that are required for benchmarking OpenStack at scale: multi-node OS deployment, verification, benchmarking & profiling.

- Deploy engine is not yet another deployer of OpenStack, but just a pluggable mechanism that allows to unify & simplify work with different deployers like: DevStack, Fuel, Anvil on hardware/VMs that you have.

- Verification - (work in progress) uses tempest to verify the functionality of a deployed OpenStack cloud. In future Rally will support other OS verifiers.

- Benchmark engine - allows to create parameterized load on the cloud based on a big repository of benchmarks.

For more information about how it works take a look at Rally Architecture

Use Cases

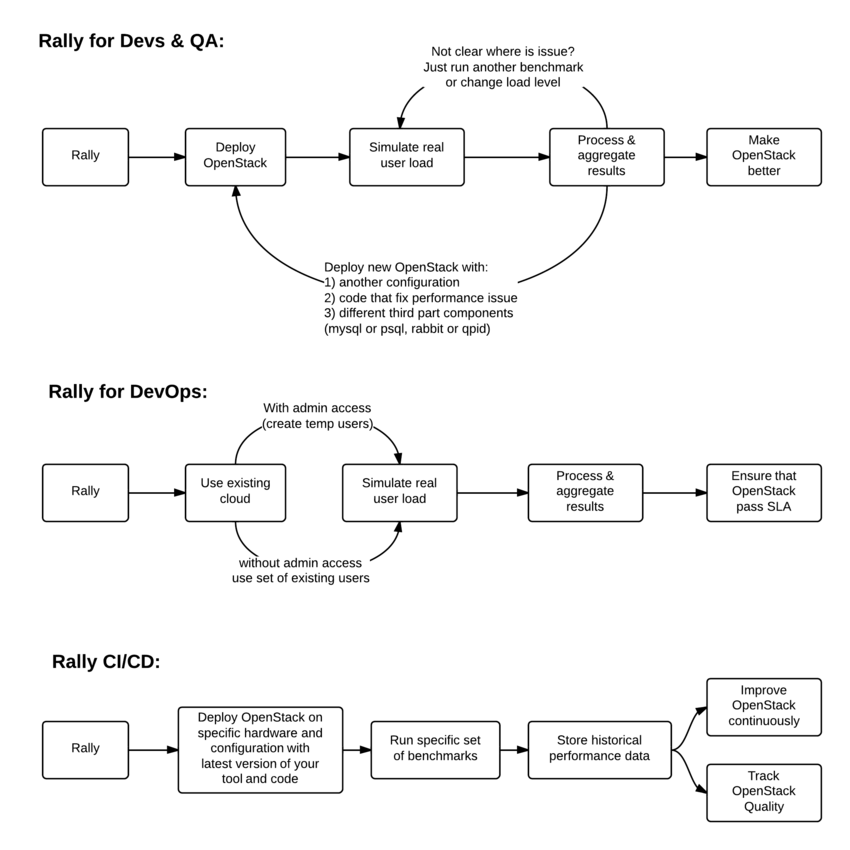

Before diving deep in Rally architecture let's take a look at 3 major high level Rally Use Cases:

Typical cases where Rally aims to help are:

- Automate measuring & profiling focused on how new code changes affect the OS performance;

- Using Rally profiler to detect scaling & performance issues;

- Investigate how different deployments affect the OS performance:

- Find the set of suitable OpenStack deployment architectures;

- Create deployment specifications for different loads (amount of controllers, swift nodes, etc.);

- Automate the search for hardware best suited for particular OpenStack cloud;

- Automate the production cloud specification generation:

- Determine terminal loads for basic cloud operations: VM start & stop, Block Device create/destroy & various OpenStack API methods;

- Check performance of basic cloud operations in case of different loads.

Architecture

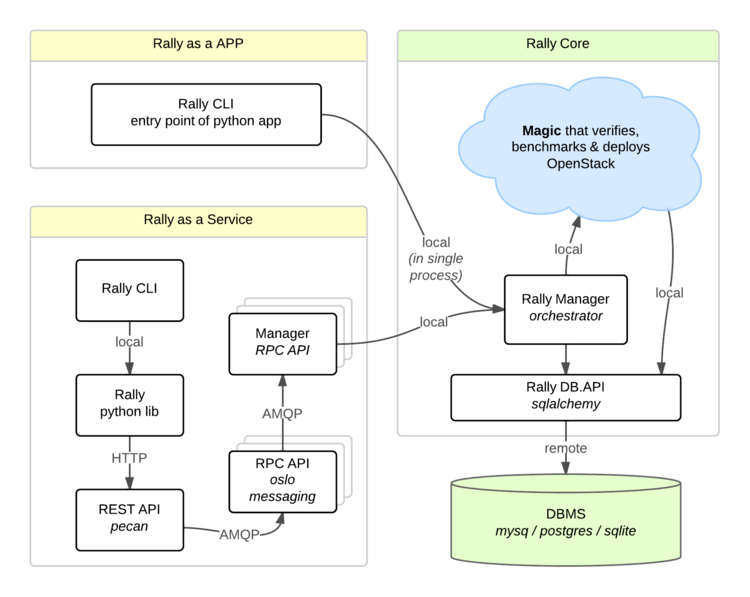

Usually OpenStack projects are as-a-Service, so Rally provides this approach and a CLI driven approach that does not require a daemon:

- Rally as-a-Service: Run rally as a set of daemons that present Web UI (work in progress) so 1 RaaS could be used by whole team.

- Rally as-an-App: Rally as a just lightweight CLI app (without any daemons), that makes it simple to develop & much more portable.

How is this possible? Take a look at diagram below:

So what is behind Rally?

Rally Components

Rally consists of 4 main components:

- Server Providers - provide servers (virtual servers), with ssh access, in one L3 network.

- Deploy Engines - deploy OpenStack cloud on servers that are presented by Server Providers

- Verification - component that runs tempest (or another pecific set of tests) against a deployed cloud, collects results & presents them in human readable form.

- Benchmark engine - allows to write parameterized benchmark scenarios & run them against the cloud.

But why does Rally need these components?

It becomes really clear if we try to imagine: how I will benchmark cloud at Scale, if ...

TO BE CONTINUED

Rally in action

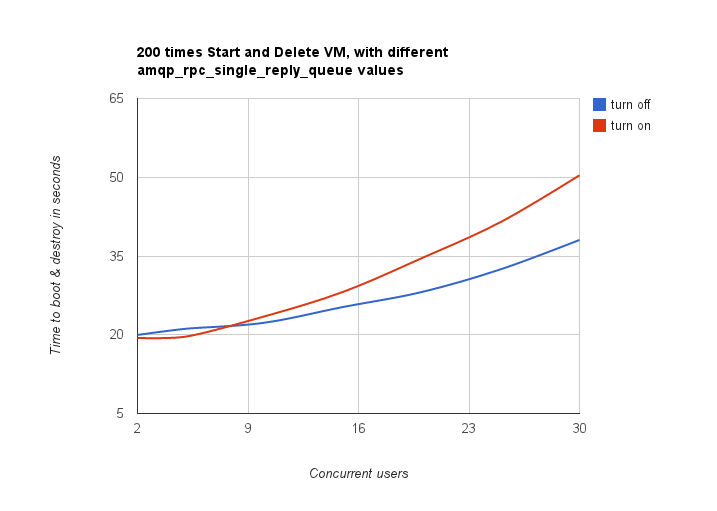

How amqp_rpc_single_reply_queue affects performance

To show Rally's capabilities and potential we used NovaServers.boot_and_destroy scenario to see how amqp_rpc_single_reply_queue option affects VM bootup time. Some time ago it was shown that cloud performance can be boosted by setting it on so naturally we decided to check this result. To make this test we issued requests for booting up and deleting VMs for different number of concurrent users ranging from one to 30 with and without this option set. For each group of users a total number of 200 requests was issued. Averaged time per request is shown below:

So apparently this option affects cloud performance, but not in the way it was thought before.

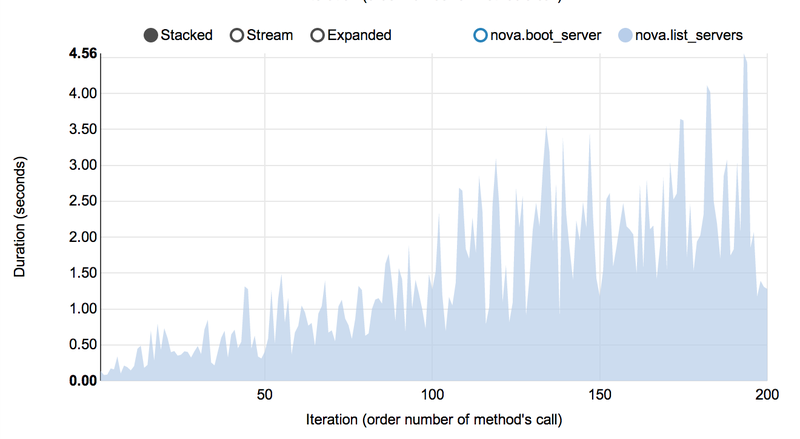

Performance of Nova instance list command

Context: 1 OpenStack user

Scenario: 1) boot VM from this user 2) list VM

Runner: Repeat 200 times.

As a result, on every next iteration user has more and more VMs and performance of VM list is degrading quite fast:

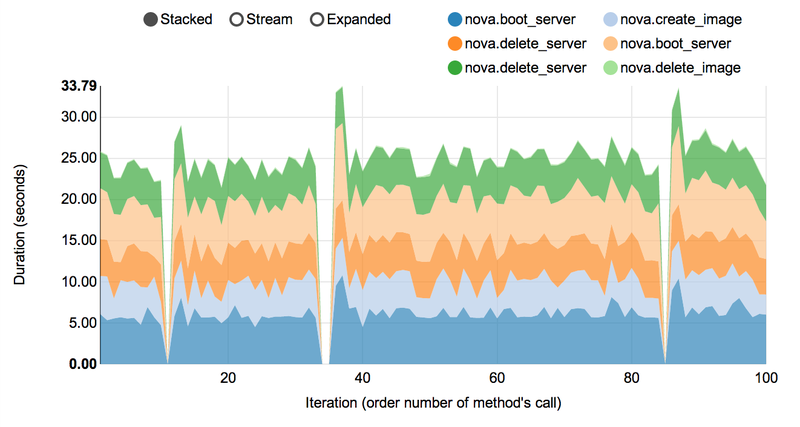

Complex scenarios & detailed information

For example NovaServers.snapshot contains a lot of "atomic" actions:

- boot VM

- snapshot VM

- delete VM

- boot VM from snapshot

- delete VM

- delete snapshot

Fortunately Rally collects information about duration of all these operation for every iteration.

As a result we are generating beautiful graphs:

How To

Actually there are only 3 steps that should be interesting for you:

- Install Rally

- Use Rally

- Add rally performance jobs to your project

- Main concepts of Rally

- Improve Rally

Weekly updates

Each week we write up on a special weekly updates page what sort of things have been accomplished in Rally during the past week and what are our plans for the next one. Below you can find the most recent report (September 26, 2014).

Here is a brief overview of what has happened in Rally recently:

- We have refactored the code responsible for atomic actions processing in Rally benchmarks. Let's remind you that each benchmark scenario in Rally consists of a series of atomic actions, whereas the running time of each atomic action is measured in the same way as that of the whole scenario. After refactoring, we now ensure that Rally doesn't skip atomic actions that failed and distinguishes different runs of two atomic actions with the same name (see an example of how a typical results table could look before and looks after refactoring).

- Another direction of refactoring was the SLA code; it has been modified so that now SLA results are stored in the DB along with other benchmarking data.

- Check out a nice user story about VMs boot performance in Nova with Rally. It shows how Rally can be used in practice to catch reals bugs and improve OpenStack performance.

- We work hard on achieving 100% test coverage in Rally: last week, additional unit tests for contexts and scenarios have been merged.

Our current priorities are further refactoring steps, including those in critical parts of Rally (e.g. temporary user creation and cloud cleanup code); we also strive towards making Rally bug-free and continuously issue different bugfixing patches.

We encourage you to take a look at new patches in Rally pending for review and to help us make Rally better.

Source code for Rally is hosted at GitHub: https://github.com/stackforge/rally

You can track the overall progress in Rally via Stackalytics: http://stackalytics.com/?release=juno&metric=commits&project_type=all&module=rally

Open reviews for Rally: https://review.openstack.org/#/q/status:open+rally,n,z

Stay tuned.

Regards,

The Rally team

Rally in the World

| Date | Authors | Title | Location |

|---|---|---|---|

| 29/May/2014 |

|

Rally: OpenStack Tempest Testing Made Simple(r) | https://www.mirantis.com/blog |

| 01/May/2014 |

|

KVM and Docker LXC Benchmarking with OpenStack | http://bodenr.blogspot.ru/ |

| 01/Mar/2014 |

|

Benchmark as a Service OpenStack-Rally | OpenStack Meetup Bangalore |

| 28/Feb/2014 |

|

Benchmarking OpenStack With Rally | http://www.thegeekyway.com/ |

| 26/Feb/2014 |

|

Benchmarking OpenStack at megascale: How we tested Mirantis OpenStack at SoftLayer | http://www.mirantis.com/blog/ |

| 07/Nov/2013 |

|

Benchmark OpenStack at Scale | Openstack summit Hong Kong |

Project Info

Useful links

- Source code

- Rally trello board

- Project space

- Blueprints

- Bugs

- Patches on review

- Meeting logs

- IRC logs, server: irc.freenode.net channel: #openstack-rally

NOTE: To be a member of trello board please write email to (boris at pavlovic.me) or ping in IRC boris-42

How to track project status?

The main directions of work in Rally are documented via blueprints. The most high-level ones are *-base blueprints, while more specific tasks are defined in derived blueprints (for an example of such a dependency tree, see the base blueprint for Benchmarks). Each “base” blueprint description contains a link to a google doc with detailed informations about its contents.

While each blueprint has an assignee, single patchsets that implement it may be owned by different developers. We use a Trello board to track the distribution of tasks among developers. The tasks are structured there both by labels (corresponding to top-level blueprints) and by their completion progress. Please note the Good for start category containing very simple tasks, which can serve as a perfect introduction to Rally for newcomers.

Where can I discuss & propose changes?

- Our IRC channel: IRC server

#openstack-rallyon irc.freenode.net; - Weekly Rally team meeting: held on Tuesdays at 1700 UTC in IRC, at the

#openstack-meetingchannel (irc.freenode.net); - Openstack mailing list: openstack-dev@lists.openstack.org (see subscription and usage instructions);

- Rally team on Launchpad: Answers/Bugs/Blueprints.