Difference between revisions of "Qonos-scheduling-service"

m (→Conceptual Overview) |

Adrian Otto (talk | contribs) (Redirected page to Qonos) |

||

| (15 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| + | #REDIRECT [[Qonos]] | ||

| + | |||

* '''Launchpad Entry''': QonoS scheduling service | * '''Launchpad Entry''': QonoS scheduling service | ||

* '''Created''': 3 May 2013 | * '''Created''': 3 May 2013 | ||

| Line 7: | Line 9: | ||

== Summary == | == Summary == | ||

| − | This document describes the design and API of QonoS, a distributed high-availability scheduling service that has been implemented for the cloud | + | This document describes the design and API of QonoS, a distributed high-availability scheduling service that has been implemented for the cloud [https://github.com/rackspace-titan/qonos QonoS] was first described in [[Scheduled-images-service]] (29 October 2012). QonoS is currently used as the scheduling component of a scheduled images service that is invoked by a Nova extension, so many of the examples in this document discuss that use case. |

Service responsibilities include: | Service responsibilities include: | ||

| Line 14: | Line 16: | ||

* Handle rescheduling failed jobs | * Handle rescheduling failed jobs | ||

* Maintain persistent schedules | * Maintain persistent schedules | ||

| + | |||

| + | |||

| + | QonoS was designed to work with OpenStack and uses OpenStack common components. | ||

=== Conceptual Overview === | === Conceptual Overview === | ||

The system consists of: | The system consists of: | ||

| − | * | + | * a REST API |

* a database | * a database | ||

* one or more schedulers, and | * one or more schedulers, and | ||

* one or more workers. | * one or more workers. | ||

| + | The API handles communication, both external requests and internal communication. It creates the schedule for a request and stores it in the database. | ||

The '''scheduler''' examines schedules and creates jobs. | The '''scheduler''' examines schedules and creates jobs. | ||

| Line 31: | Line 37: | ||

==== Job Lifecycle ==== | ==== Job Lifecycle ==== | ||

| − | Jobs have the following | + | Jobs may have the following status values: |

| − | + | {| class="wikitable" | |

| − | + | |- | |

| − | + | ! Status !! Definition | |

| − | + | |- | |

| − | + | | <code>queued</code> || The job is ready to be processed by a worker | |

| − | + | |- | |

| + | | <code>processing</code> || The job has been picked up by a worker | ||

| + | |- | ||

| + | | <code>done</code> || The worker processing this job has decided that the job has been successfully completed | ||

| + | |- | ||

| + | | <code>timeout</code> || The worker processing this job has decided the job is taking too long and has stopped processing it. A job in this state can be picked up by another worker. | ||

| + | |- | ||

| + | | <code>error</code> || The worker notes that something went wrong, but the job could be retried | ||

| + | |- | ||

| + | | <code>canceled</code> || The worker decides that the job can't be done and should not be retried | ||

| + | |} | ||

==== Job Timeouts ==== | ==== Job Timeouts ==== | ||

| Line 46: | Line 62: | ||

==== Job Failures ==== | ==== Job Failures ==== | ||

Job failures are reported as job faults and stored in the database. | Job failures are reported as job faults and stored in the database. | ||

| + | |||

| + | ==== Scalability and Reliability ==== | ||

| + | |||

| + | Workers and schedulers are independently scalable and reliable given the infrastructure support running multiple instances. | ||

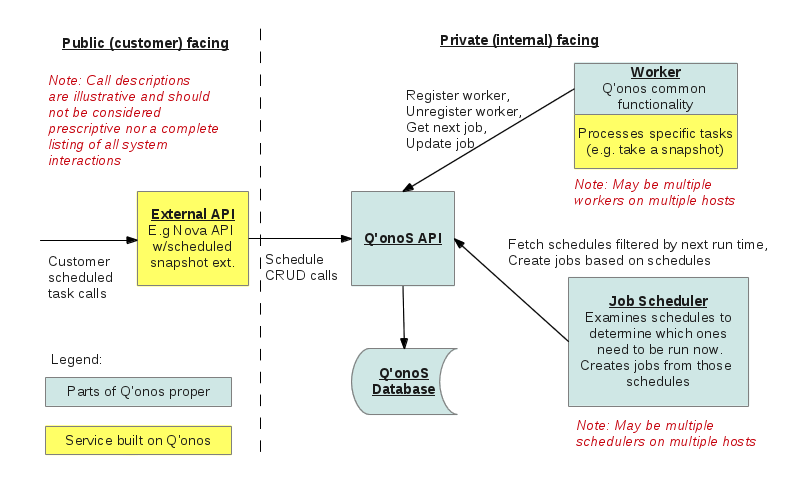

=== Overall System Diagram === | === Overall System Diagram === | ||

| Line 51: | Line 71: | ||

[[File:Qonos Diagram.png]] | [[File:Qonos Diagram.png]] | ||

| − | === | + | == Design == |

| + | === API === | ||

| + | ==== Schedules ==== | ||

| + | ===== Create Schedule ===== | ||

| + | <pre> | ||

| + | POST <version>/schedules | ||

| + | {"schedule": | ||

| + | { | ||

| + | "tenant": "tenant_username", | ||

| + | "action": "snapshot", | ||

| + | "minute": 30, | ||

| + | "hour": 2, | ||

| + | "day": 3, | ||

| + | "day_of_week": 5, | ||

| + | "day_of_month": 23, | ||

| + | "metadata": | ||

| + | { | ||

| + | "instance_id": "some_uuid", | ||

| + | "retention": "3" | ||

| + | } | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | ===== List schedules ===== | ||

| + | <pre> | ||

| + | GET <version>/schedules | ||

| + | { | ||

| + | "schedules": | ||

| + | [ | ||

| + | { | ||

| + | # schedule as above | ||

| + | }, | ||

| + | { | ||

| + | # schedule as above | ||

| + | }, | ||

| + | ... | ||

| + | ] | ||

| + | } | ||

| + | </pre> | ||

| − | + | ====== Query filters ====== | |

| + | * <tt>next_run_after</tt> - only list schedules with next_run value >= this value | ||

| + | * <tt>next_run_before</tt> - only list schedules with next_run value <= this value | ||

| − | === | + | ====== Example ====== |

| + | List schedules which start in the next five minutes | ||

| + | <pre> | ||

| + | GET <version>/schedules?next_run_after={Current_DateTime}&next_run_before={Current_DateTime+5_Minutes} | ||

| + | GET <version>/schedules?next_run_after=2012-05-16T15:27:36Z&next_run_before=2012-05-16T15:32:36Z | ||

| + | </pre> | ||

| − | + | ===== Get a specific schedule ===== | |

| + | <pre> | ||

| + | GET /v1/schedules/{id} | ||

| + | </pre> | ||

| − | + | ===== Update a schedule ===== | |

| + | <pre> | ||

| + | PUT <version>/schedules/{id} | ||

| + | {"schedule": | ||

| + | { | ||

| + | "minute": 45, | ||

| + | "hour": 3 | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| − | + | ===== Delete a schedule ===== | |

| + | <pre> | ||

| + | DELETE <version>/schedules/{id} | ||

| + | </pre> | ||

| − | |||

| − | === | + | ==== Jobs ==== |

| − | + | ===== Create job from schedule ===== | |

| − | + | <pre> | |

| − | + | POST <version>/jobs | |

| + | {"job": {"schedule_id": "some_uuid"}} | ||

| + | </pre> | ||

| + | The action, tenant_id, and metadata gets copied from the schedule to the job. | ||

| + | ===== Get a specific job ===== | ||

<pre> | <pre> | ||

| + | GET <version>/jobs/{id} | ||

{ | { | ||

| − | + | "job":{ | |

| − | + | { | |

| − | + | "id": "{some_uuid}", | |

| − | + | "created_at": "{DateTime}", | |

| − | + | "updated_at": "{DateTime}", | |

| − | + | "schedule_id": "{some_uuid}", | |

| − | + | "worker_id": "{some_uuid}", | |

| − | + | "tenant": "tenant_username", | |

| + | "action": "snapshot", | ||

| + | "status": "queued", | ||

| + | "retry_count": 0, | ||

| + | "hard_timeout": "{DateTime}", | ||

| + | "timeout": "{DateTime}", | ||

| + | |||

| + | "metadata": | ||

| + | { | ||

| + | "key1": "value1", | ||

| + | "key2", "value2" | ||

| + | } | ||

| + | } | ||

} | } | ||

</pre> | </pre> | ||

| − | + | ===== List current jobs ===== | |

| − | + | <pre> | |

| − | + | GET <version>/jobs | |

| − | + | { | |

| − | + | "jobs": | |

| − | + | [ | |

| + | { | ||

| + | # job as above | ||

| + | }, | ||

| + | { | ||

| + | # job as above | ||

| + | }, | ||

| + | ... | ||

| + | ] | ||

| + | } | ||

| + | </pre> | ||

| − | + | ===== Update status of a job ===== | |

| − | + | <pre> | |

| − | + | PUT <version>/jobs/{id}/status | |

| − | + | { | |

| − | + | "status": | |

| − | + | { | |

| − | + | "status": "some_status", | |

| − | + | "timeout": "{datetime of next timeout}" (optional) | |

| − | + | "error_message":"some message" (optional) | |

| − | + | } | |

| − | + | } | |

| − | + | </pre> | |

| − | |||

| − | |||

| − | + | NOTE: The <tt>error_message</tt> field is only looked for if the status is ERROR. In the event of an ERROR status, an entry is created in the job_faults table capturing as much info as possible from the job. If an error_message is provided, it is included in the job fault entry. | |

| − | |||

| + | ===== Delete(finish) a specific job ===== | ||

<pre> | <pre> | ||

| − | + | DELETE <version>/jobs/{id} | |

| − | |||

| − | |||

| − | DELETE / | ||

| − | |||

</pre> | </pre> | ||

| − | |||

| − | |||

| − | ==== | + | ==== Metadata ==== |

| + | ===== Set schedule/job metadata ===== | ||

| + | <pre> | ||

| + | PUT <version>/schedules/{id}/metadata | ||

| + | or | ||

| + | PUT <version>/jobs/{id}/metadata | ||

| + | </pre> | ||

| + | Note: The resulting metadata for a schedule/job will exactly match what is provided. | ||

| + | <pre> | ||

| + | { | ||

| + | "metadata": | ||

| + | { | ||

| + | "each": "someval", | ||

| + | "meta": "someval", | ||

| + | "key": "someval", | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | ===== List all metadata for a schedule/job ===== | ||

<pre> | <pre> | ||

| − | GET / | + | GET <version>/schedules/{id}/metadata |

| − | + | or | |

| − | + | GET <version>/jobs/{id}/metadata | |

| − | GET | + | { |

| − | + | "metadata": | |

| − | + | { | |

| − | + | "instance_id": "some_uuid", | |

| − | + | "retention": "3" | |

| − | + | } | |

| + | } | ||

</pre> | </pre> | ||

| − | + | ==== Workers ==== | |

| − | + | ===== Register worker with API ===== | |

| − | |||

| − | |||

| − | |||

| − | ==== | ||

| − | |||

<pre> | <pre> | ||

| − | + | POST <version>/workers | |

| − | + | {"worker": | |

| − | + | { "host": "a.host.name"} | |

| − | + | } | |

| − | |||

| − | |||

| − | |||

| − | |||

</pre> | </pre> | ||

| + | ===== List workers registered with API ===== | ||

| + | <pre> | ||

| + | GET <version>/workers | ||

| − | + | # Not shown - id, created_at, and updated_at fields for each worker | |

| − | + | { | |

| + | "workers": | ||

| + | [ | ||

| + | { | ||

| + | "host": "a.host.name", | ||

| + | }, | ||

| + | { | ||

| + | "host": "a.host.name2"", | ||

| + | }, | ||

| + | ... | ||

| + | ] | ||

| + | } | ||

| + | </pre> | ||

| − | API - | + | ===== Get a specific worker registered with API ===== |

| + | <pre> | ||

| + | GET <version>/workers/{id} | ||

| + | # Not shown - id, created_at, and updated_at fields for each worker | ||

| + | { | ||

| + | "worker": | ||

| + | { | ||

| + | "host": "a.host.name" | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| − | + | ===== Unregister worker with API ===== | |

| + | <pre> | ||

| + | DELETE <version>/workers/{id} | ||

| + | </pre> | ||

| − | + | ===== Grab next job for worker ===== | |

| + | This can also be interpreted as "Assign a new job to the worker". | ||

| + | Note: this call doesn't map cleanly to normal RESTful practices since it is a POST but it has a return | ||

| + | <pre> | ||

| + | POST <version>/workers/{id}/jobs | ||

| + | </pre> | ||

| + | ====== Request Body ====== | ||

| + | <pre> | ||

| + | {"job":{"action":"snapshot"}} | ||

| + | </pre> | ||

| + | ====== Response Body ====== | ||

| + | If an appropriate job is found: | ||

| + | <pre> | ||

| + | { | ||

| + | "job": | ||

| + | { | ||

| + | # job as returned below | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | If no job is found: | ||

| + | <pre> | ||

| + | { | ||

| + | "job": None | ||

| + | } | ||

| + | </pre> | ||

| − | === | + | == Example Usage == |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | As an example, consider a Nova API extension that allows users to request that daily snapshots automatically be taken of a server. | |

| − | |||

| − | |||

# User makes request to Nova extension | # User makes request to Nova extension | ||

| − | # Nova extension | + | # Nova extension picks a random time for the snapshot of this server to be taken (or it could use some more sophisticated algorithm, key thing is we want the requests to be uniformly distributed) and makes a create schedule request to the QonoS API. |

| − | + | # The QonoS API adds a schedule entry to the database | |

| − | # | + | # Scheduler polls API for schedules needing action |

| − | # | + | # Scheduler creates job entry through API |

| − | # | + | # Worker polls the API for the next available job |

| − | # Worker | + | # Worker executes the job (i.e., requests that a snapshot be taken of the specified server) |

| − | # Worker waits for completion while updating | + | # Worker waits for completion while updating the job (this indicates the Worker has not died) |

| − | # Worker | + | # Worker deletes the job (indicating that the job has been completed) |

| − | # Worker polls | + | # Worker polls the API for the next available job |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

== Code Repository == | == Code Repository == | ||

* https://github.com/rackspace-titan/qonos | * https://github.com/rackspace-titan/qonos | ||

| − | |||

| − | |||

| − | |||

Latest revision as of 23:09, 3 May 2013

Redirect to:

- Launchpad Entry: QonoS scheduling service

- Created: 3 May 2013

- Contributors: Alex Meade, Eddie Sheffield, Andrew Melton, Iccha Sethi, Nikhil Komawar, Brian Rosmaita

Contents

Summary

This document describes the design and API of QonoS, a distributed high-availability scheduling service that has been implemented for the cloud QonoS was first described in Scheduled-images-service (29 October 2012). QonoS is currently used as the scheduling component of a scheduled images service that is invoked by a Nova extension, so many of the examples in this document discuss that use case.

Service responsibilities include:

- Create scheduled tasks

- Perform scheduled tasks

- Handle rescheduling failed jobs

- Maintain persistent schedules

QonoS was designed to work with OpenStack and uses OpenStack common components.

Conceptual Overview

The system consists of:

- a REST API

- a database

- one or more schedulers, and

- one or more workers.

The API handles communication, both external requests and internal communication. It creates the schedule for a request and stores it in the database.

The scheduler examines schedules and creates jobs.

A job describes a task that must be performed.

A worker performs a task. It obtains a task by polling the API and picking up the first task it is capable of handling.

Job Lifecycle

Jobs may have the following status values:

| Status | Definition |

|---|---|

queued |

The job is ready to be processed by a worker |

processing |

The job has been picked up by a worker |

done |

The worker processing this job has decided that the job has been successfully completed |

timeout |

The worker processing this job has decided the job is taking too long and has stopped processing it. A job in this state can be picked up by another worker. |

error |

The worker notes that something went wrong, but the job could be retried |

canceled |

The worker decides that the job can't be done and should not be retried |

Job Timeouts

There are two kinds of timeouts:

- hard timeout: once reached, the job is no longer available for retries

- soft timeout: is renewed by the worker, indicates that the worker is still doing the task (similar to a heartbeat)

Job Failures

Job failures are reported as job faults and stored in the database.

Scalability and Reliability

Workers and schedulers are independently scalable and reliable given the infrastructure support running multiple instances.

Overall System Diagram

Design

API

Schedules

Create Schedule

POST <version>/schedules

{"schedule":

{

"tenant": "tenant_username",

"action": "snapshot",

"minute": 30,

"hour": 2,

"day": 3,

"day_of_week": 5,

"day_of_month": 23,

"metadata":

{

"instance_id": "some_uuid",

"retention": "3"

}

}

}

List schedules

GET <version>/schedules

{

"schedules":

[

{

# schedule as above

},

{

# schedule as above

},

...

]

}

Query filters

- next_run_after - only list schedules with next_run value >= this value

- next_run_before - only list schedules with next_run value <= this value

Example

List schedules which start in the next five minutes

GET <version>/schedules?next_run_after={Current_DateTime}&next_run_before={Current_DateTime+5_Minutes}

GET <version>/schedules?next_run_after=2012-05-16T15:27:36Z&next_run_before=2012-05-16T15:32:36Z

Get a specific schedule

GET /v1/schedules/{id}

Update a schedule

PUT <version>/schedules/{id}

{"schedule":

{

"minute": 45,

"hour": 3

}

}

Delete a schedule

DELETE <version>/schedules/{id}

Jobs

Create job from schedule

POST <version>/jobs

{"job": {"schedule_id": "some_uuid"}}

The action, tenant_id, and metadata gets copied from the schedule to the job.

Get a specific job

GET <version>/jobs/{id}

{

"job":{

{

"id": "{some_uuid}",

"created_at": "{DateTime}",

"updated_at": "{DateTime}",

"schedule_id": "{some_uuid}",

"worker_id": "{some_uuid}",

"tenant": "tenant_username",

"action": "snapshot",

"status": "queued",

"retry_count": 0,

"hard_timeout": "{DateTime}",

"timeout": "{DateTime}",

"metadata":

{

"key1": "value1",

"key2", "value2"

}

}

}

List current jobs

GET <version>/jobs

{

"jobs":

[

{

# job as above

},

{

# job as above

},

...

]

}

Update status of a job

PUT <version>/jobs/{id}/status

{

"status":

{

"status": "some_status",

"timeout": "{datetime of next timeout}" (optional)

"error_message":"some message" (optional)

}

}

NOTE: The error_message field is only looked for if the status is ERROR. In the event of an ERROR status, an entry is created in the job_faults table capturing as much info as possible from the job. If an error_message is provided, it is included in the job fault entry.

Delete(finish) a specific job

DELETE <version>/jobs/{id}

Metadata

Set schedule/job metadata

PUT <version>/schedules/{id}/metadata

or

PUT <version>/jobs/{id}/metadata

Note: The resulting metadata for a schedule/job will exactly match what is provided.

{

"metadata":

{

"each": "someval",

"meta": "someval",

"key": "someval",

}

}

List all metadata for a schedule/job

GET <version>/schedules/{id}/metadata

or

GET <version>/jobs/{id}/metadata

{

"metadata":

{

"instance_id": "some_uuid",

"retention": "3"

}

}

Workers

Register worker with API

POST <version>/workers

{"worker":

{ "host": "a.host.name"}

}

List workers registered with API

GET <version>/workers

# Not shown - id, created_at, and updated_at fields for each worker

{

"workers":

[

{

"host": "a.host.name",

},

{

"host": "a.host.name2"",

},

...

]

}

Get a specific worker registered with API

GET <version>/workers/{id}

# Not shown - id, created_at, and updated_at fields for each worker

{

"worker":

{

"host": "a.host.name"

}

}

Unregister worker with API

DELETE <version>/workers/{id}

Grab next job for worker

This can also be interpreted as "Assign a new job to the worker". Note: this call doesn't map cleanly to normal RESTful practices since it is a POST but it has a return

POST <version>/workers/{id}/jobs

Request Body

{"job":{"action":"snapshot"}}

Response Body

If an appropriate job is found:

{

"job":

{

# job as returned below

}

}

If no job is found:

{

"job": None

}

Example Usage

As an example, consider a Nova API extension that allows users to request that daily snapshots automatically be taken of a server.

- User makes request to Nova extension

- Nova extension picks a random time for the snapshot of this server to be taken (or it could use some more sophisticated algorithm, key thing is we want the requests to be uniformly distributed) and makes a create schedule request to the QonoS API.

- The QonoS API adds a schedule entry to the database

- Scheduler polls API for schedules needing action

- Scheduler creates job entry through API

- Worker polls the API for the next available job

- Worker executes the job (i.e., requests that a snapshot be taken of the specified server)

- Worker waits for completion while updating the job (this indicates the Worker has not died)

- Worker deletes the job (indicating that the job has been completed)

- Worker polls the API for the next available job