Difference between revisions of "PCI passthrough SRIOV support"

m (→Common PCI SRIOV design) |

|||

| (120 intermediate revisions by 7 users not shown) | |||

| Line 1: | Line 1: | ||

| + | '''!!!This is a design discussion document, not for end user reference!!! ''' | ||

| − | === | + | ===Related Resource=== |

| − | PCI devices | + | This design is based on the PCI pass-through IRC meetings, provide common support for PCI SRIOV: |

| + | *https://wiki.openstack.org/wiki/Meetings/Passthrough | ||

| + | This document was used to finalise the design: | ||

| + | *https://docs.google.com/document/d/1vadqmurlnlvZ5bv3BlUbFeXRS_wh-dsgi5plSjimWjU/edit# | ||

| + | link back to bp: | ||

| + | *https://blueprints.launchpad.net/nova/+spec/pci-extra-info | ||

| + | |||

| + | ===Common PCI SRIOV design === | ||

| + | |||

| + | PCI devices have PCI standard properties like address (BDF), vendor_id, product_id, etc, Virtual functions also have a property referring to the function's physical address. Application specific or installation specific extra information can be attached to PCI device, like physical network connectivity using by Neutron SRIOV. | ||

| + | |||

| + | This bp focus on functionality to provide the common PCI SRIOV support. | ||

| + | |||

| + | * on compute node the pci_information/white-list define a set of filter. PCI device passed filter will be available for allocation. at same time extra information attached to the PCI device. | ||

| + | * PCI compute report the PCI stats information to scheduler. PCI stats contain several pools. each pool defined by several PCI property, control by the local configuration item: pci_flavor_attrs , default value is vendor_id, product_id, extra_info. | ||

| + | * PCI flavor define the user point of view PCI device selector: PCI flavor provide a set of (k,v) to form specs to select the available device(filtered by pci_information/white-list). | ||

| + | |||

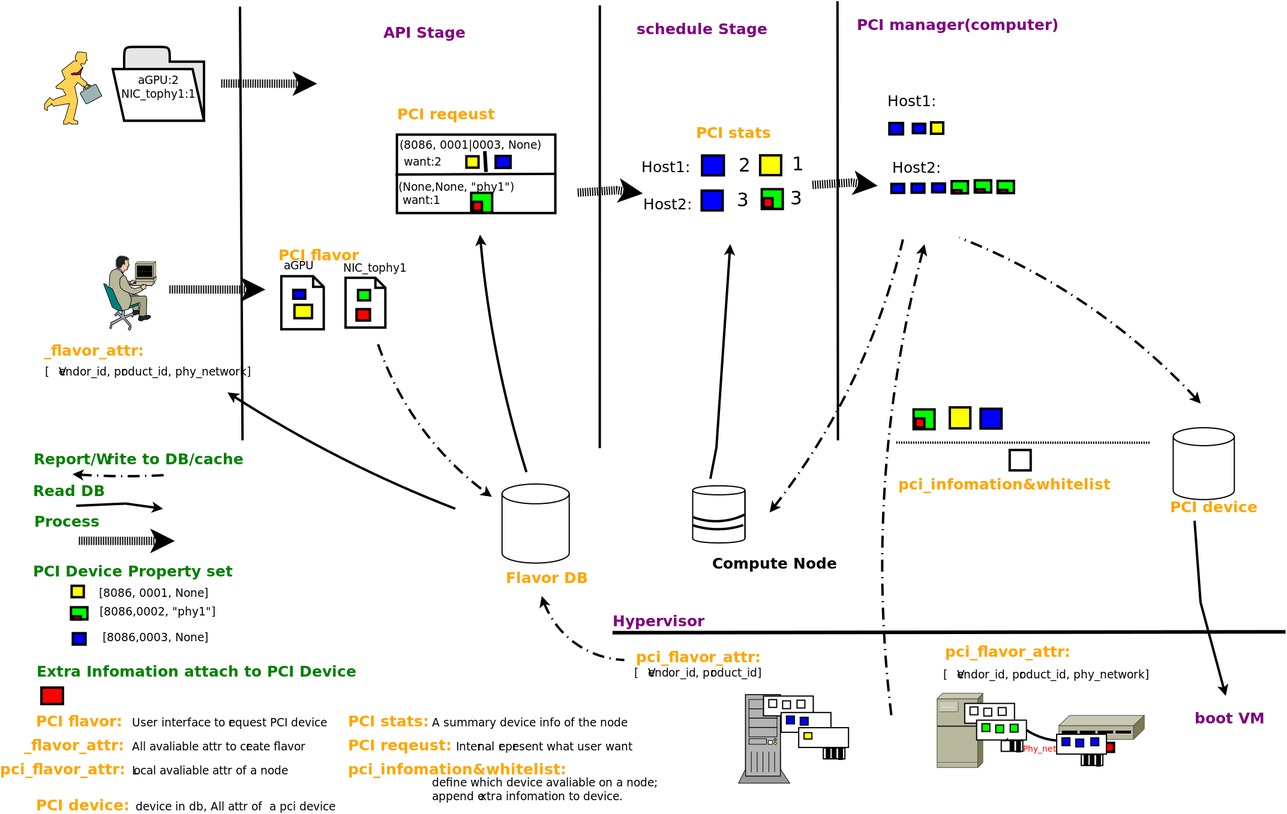

| + | the new PCI design based on 2 key change: the PCI flavor and the extra information attache to pci device. the following diagram is a summary of the design: | ||

| − | + | [[Image:PCI-sriov.jpg]] | |

| − | + | ====Design Choice ==== | |

| − | + | ||

| + | PCI flavor: User don't want to know details of a pci device and all of it's attrs and extra information attached to it. | ||

| + | (pci_flavor_attr: admin need to know all PCI attrs to define flavor for user/tenant.) | ||

| + | PCI Stats: compute node might have many devices and most of device properties are same, summary a stats to scheduler can reduce DB load, simply schedule. | ||

| + | PCI extra info: a pci device might attach to a specific network, a specific resource, with can be attach to device and schedule base on it. | ||

| − | |||

| − | |||

| + | ====PCI flavor==== | ||

| + | |||

| + | For OS users, PCI flavor is a reasonable name like 'oldGPU', 'FastGPU', '10GNIC', 'SSD', describe one kind of PCI device. User use the PCI flavor to select available pci devices. Internally the PCI flavor created by a set of API and saved in a DB table, keep PCI flavor available for all cloud. | ||

| + | |||

| + | |||

| + | Administrator define the PCI flavors via matching expression that selects available(offer by white list) devices, and a reasonable name. PCI flavor matching expression is a set of (k,v), the k is the PCI property, v is its value. not every PCI property is available to PCI flavor, only a selected set of PCI property can used to define the PCI flavor, the selected property should be global to cloud like vendor/product_id, can not be BDF or host of a PCI device. these selected PCI property is defined via compute local configuration : | ||

| + | |||

| + | pci_flavor_attrs = vendor_id, product_id, ... | ||

| + | |||

| + | a important behavior is the PCI flavors could overlap - that is, the same device on the same machine may be matched by multiple flavors. | ||

| + | |||

| + | ====Use PCI flavor in instance flavor extra info==== | ||

| + | |||

| + | user set pci flavor into instance flavor's extra info to specify how many PCI device/and what type PCI flavor the VM want to boot with. | ||

| + | |||

| + | nova flavor-key m1.small set pci_passthrough:pci_flavor= <pci flavor spec list> | ||

| + | |||

| + | pci flavor spec: | ||

| + | num1:flavor1,flavor2 | ||

| + | mean: want <number>'s pci devices from flavor or flavor | ||

| + | |||

| + | pci flavor spec list: | ||

| + | <pci flavor spec1>; <pci flavor spec2> | ||

| + | |||

| + | |||

| + | for example: | ||

| + | |||

| + | nova flavor-key m1.small set pci_passthrough:pci_flavor= 1:IntelGPU,NvGPU;1:intelQuickAssist; | ||

| + | |||

| + | which define requirements: | ||

| + | boot with 1 of IntelGPU or NvGPU, and 1 IntelQuickAssist card. | ||

| + | |||

| + | ====PCI pci_flavor_attrs ==== | ||

| + | |||

| + | this configuration is keep local to every compute node, this will make deploy process can locally decide what PCI properties this node will exposed. | ||

| + | |||

| + | pci_flavor_attrs = vendor_id, product_id, ... | ||

| + | |||

| + | compute node update local pci extra properties to PCI flavor Database, which is accessible by flavor API, provide PCI properties to define flavor. | ||

| + | |||

| + | pci_flavor_attrs store in flavor DB as a normal flavor, it's name "_flavor_attrs", it's UUID use "0": | ||

| + | |||

| + | {"vendor_id":"Ture", "product_id":"True", ... } | ||

| + | |||

| + | this flavor contain all available attrs can be used to define pci flavor, list it's content use: | ||

| − | + | nova pci-flavor-show 0 | |

| − | + | GET v2/{tenant_id}/os-pci-flavor/<0> | |

| + | data: | ||

| + | os-pci-flavor: { | ||

| + | 'UUID':'0' , | ||

| + | 'description':'Available flavor attrs ' | ||

| + | 'name':'_flavor_attrs', | ||

| + | 'vendor_id": "True", | ||

| + | 'product_id": "True", | ||

| + | .... | ||

| + | } | ||

| + | ====PCI request==== | ||

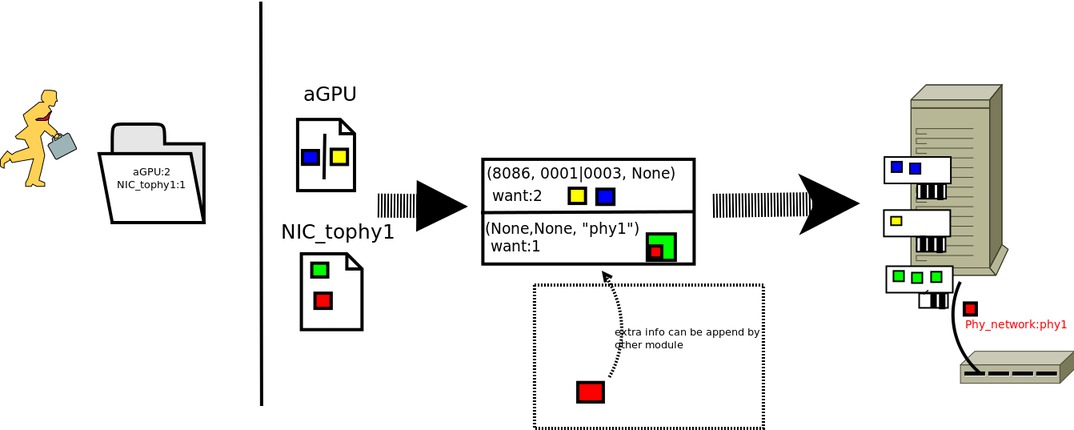

| − | + | PCI request is a internal structure to represent all PCI devices a VM want to have. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | request = {'count': int(count), | |

| + | 'spec': [{"vendor_id":"8086", "phynetwork":"phy1"}, ...], | ||

| + | 'alias_name': "Intel.NIC"} | ||

| + | [[Image:PCI-Request.jpg]] | ||

| − | + | ====Extra information of PCI device ==== | |

| − | + | the compute nodes offer available PCI devices for pass-through, since the list of devices doesn't usually change unless someone tinkers with the hardware, this matching expression used to create this list of offered devices is stored in compute node config. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | * | + | *the device information (device ID, vendor ID, BDF etc.) is discovered from the device and stored as part of the PCI device, same as current implement. |

| − | + | *on the compute node, additional arbitrary extra information, in the form of key-value pairs, can be added to the config and is included in the PCI device | |

| − | |||

| − | * | ||

| − | |||

| − | + | this is achieved by extend the pci white-list to: | |

| − | |||

| − | |||

| − | = | + | *pci_information = [ { pci-regex } ,{pci-extra-attrs } ] |

| + | *pci-regex is a dict of { string-key: string-value } pairs , it can only match device properties, like vendor_id, address, product_id,etc. | ||

| + | *pci-extra-attrs is a dict of { string-key: string-value } pairs. The values can be arbitrary The total size of the extra attrs may be restricted. all this extra attrs will be store in the pci device table's extra info field. and the extra attrs should use this naming schema: e.attrname | ||

| − | + | ====PCI stats grouping device base on pci_flavor_attrs ==== | |

| − | + | ||

| − | + | PCI stats pool summary the devices of a compute node, and the scheduler use flavor's matching specs select the available host for VM. The stats pool must contain the PCI properties used by PCI flavor. | |

| − | + | * current grouping is based on [vendor_id, product_id, extra_info] | |

| + | * going to group by keys specified by pci_flavor_attrs. | ||

| + | |||

| + | The algorithm for stats report should meet this request: the one device should only be in one pci stats pool, this mean pci stats can not overlap. this simplifies the scheduler design. | ||

| + | |||

| + | on computer node the pci_flavor_attrs provide the specs for pci stats to group its pool. and the pci_flavor_attrs on control node collection the attrs which can be used to define the pci_flavor. the definition of pci_flavor_attrs on controller should contain all the pci_flavor_attrs's content on every compute node. | ||

| + | |||

| + | *but compute node report stats pool by a subset of controller's pci_flavor_attrs is acceptable, in such scenario, this means the compute node can only provide the devices with these propertes.* | ||

===Use cases === | ===Use cases === | ||

| − | ==== | + | ==== General PCI pass through ==== |

| − | + | given compute nodes contain 1 GPU with vendor:device 8086:0001 | |

| + | |||

| + | *on the compute nodes, config the pci_information | ||

| + | pci_information = { { 'device_id': "8086", 'vendor_id': "0001" }, {} } | ||

| + | |||

| + | pci_flavor_attrs ='device_id','vendor_id' | ||

| + | |||

| + | the compute node would report PCI stats group by ('device_id', 'vendor_id'). | ||

| + | pci stats will report one pool: | ||

| + | {'device_id':'0001', 'vendor_id':'8086', 'count': 1 } | ||

| + | |||

| + | * create PCI flavor | ||

| + | |||

| + | nova pci-flavor-create name 'bigGPU' description 'passthrough Intel's on-die GPU' | ||

| + | nova pci-flavor-update name 'bigGPU' set 'vendor_id'='8086' 'product_id': '0001' | ||

| + | |||

| + | * create flavor and boot with it ( same as current PCI passthrough) | ||

| + | |||

| + | nova flavor-key m1.small set pci_passthrough:pci_flavor= 1:bigGPU; | ||

| + | nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec | ||

| + | |||

| + | ==== General PCI pass through with multi PCI flavor candidate ==== | ||

| + | |||

| + | given compute nodes contain 2 type GPU with , vendor:device 8086:0001, or vendor:device 8086:0002 | ||

| + | |||

| + | *on the compute nodes, config the pci_information | ||

| + | pci_information = { { 'device_id': "8086", 'vendor_id': "000[1-2]" }, {} } | ||

| + | |||

| + | * on controller | ||

| + | pci_flavor_attrs = ['device_id', 'vendor_id'] | ||

| − | + | the compute node would report PCI stats group by ('device_id', 'vendor_id'). | |

| − | + | pci stats will report 2 pool: | |

| − | + | {'device_id':'0001', 'vendor_id':'8086', 'count': 1 } | |

| + | {'device_id':'0002', 'vendor_id':'8086', 'count': 1 } | ||

| − | + | * create PCI flavor | |

| + | nova pci-flavor-create name 'bigGPU' description 'passthrough Intel's on-die GPU' | ||

| + | nova pci-flavor-update name 'bigGPU' set 'vendor_id'='8086' 'product_id': '0001' | ||

| + | nova pci-flavor-create name 'bigGPU2' description 'passthrough Intel's on-die GPU' | ||

| + | nova pci-flavor-update name 'bigGPU2' set 'vendor_id'='8086' 'product_id': '0002' | ||

| − | + | * create flavor and boot with it | |

| + | nova flavor-key m1.small set pci_passthrough:pci_flavor= '1:bigGPU,bigGPU2;' | ||

| + | nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec | ||

| − | + | ==== General PCI pass through wild-cast PCI flavor ==== | |

| − | + | given compute nodes contain 2 type GPU with , vendor:device 8086:0001, or vendor:device 8086:0002 | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| + | *on the compute nodes, config the pci_information | ||

| + | pci_information = { { 'device_id': "8086", 'vendor_id': "000[1-2]" }, {} } | ||

| + | pci_flavor_attrs = ['device_id', 'vendor_id'] | ||

| − | + | the compute node would report PCI stats group by ('device_id', 'vendor_id'). | |

| + | pci stats will report 2 pool: | ||

| − | + | {'device_id':'0001', 'vendor_id':'8086', 'count': 1 } | |

| − | + | {'device_id':'0002', 'vendor_id':'8086', 'count': 1 } | |

| − | + | ||

| − | + | * create PCI flavor | |

| − | + | ||

| − | + | nova pci-flavor-create name 'bigGPU' description 'passthrough Intel's on-die GPU' | |

| + | nova pci-flavor-update name 'bigGPU' set 'vendor_id'='8086' 'product_id': '000[1-2]' | ||

| + | |||

| + | * create flavor and boot with it | ||

| + | |||

| + | nova flavor-key m1.small set pci_passthrough:pci_flavor= '1:bigGPU;' | ||

| + | nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec | ||

| − | + | ==== PCI pass through support grouping tag ==== | |

| − | |||

| − | + | given compute nodes contain 2 type GPU with , vendor:device 8086:0001, or vendor:device 8086:0002 | |

| − | |||

| − | |||

| − | * | + | *on the compute nodes, config the pci_information |

| − | + | pci_information = { { 'device_id': "8086", 'vendor_id': "000[1-2]" }, { 'e.group':'gpu' } } | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | = | + | pci_flavor_attrs = ['e.group'] |

| − | + | the compute node would report PCI stats group by ('e.group'). | |

| − | + | pci stats will report 1 pool: | |

| + | {'e.group':'gpu', 'count': 2 } | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * create PCI flavor | |

| − | + | ||

| − | + | nova pci-flavor-create name 'bigGPU' description 'passthrough Intel's on-die GPU' | |

| − | + | nova pci-flavor-update name 'bigGPU' set 'e.group'='gpu' | |

| − | |||

| − | |||

| − | |||

| + | * create flavor and boot with it | ||

| + | nova flavor-key m1.small set pci_passthrough:pci_flavor= '1:bigGPU;' | ||

| + | nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec | ||

| − | + | ==== PCI SRIOV with tagged flavor ==== | |

| − | + | given compute nodes contain 5 PCI NIC , vendor:device 8086:0022, and it connect to physical network "X". | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | *on the compute nodes, config the pci_information | |

| − | |||

| − | + | pci_information = { { 'device_id': "8086", 'vendor_id': "000[1-2]" }, { 'e.physical_netowrk': 'X' } } | |

| − | |||

| − | |||

| − | |||

| − | + | pci_flavor_attrs = 'e.physical_netowrk' | |

| − | |||

| − | + | the compute node would report PCI stats group by ('e.physical_netowrk'). | |

| − | + | pci stats will report 1 pool: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | {'e.physical_netowrk':'X', 'count': 1 } | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * create PCI flavor | |

| + | nova pci-flavor-create name 'phyX_NIC' description 'passthrough NIC connect to physical network X' | ||

| + | nova pci-flavor-update name 'bigGPU' set 'e.physical_netowrk'='X' | ||

| − | |||

| − | + | * create flavor and boot with it | |

| + | nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec --nic net-id=network_X pci_flavor= '1:phyX_NIC;' | ||

| − | + | ====encryption card use case ==== | |

| − | |||

| − | + | there is 3 encryption card: [ V1-3 here means a vendor_id number.] | |

| − | |||

| − | + | card 1 (vendor_id is V1, device_id =0xa) | |

| − | + | card 2 (vendor_id is V1, device_id=0xb) | |

| + | card 3 (vendor_id is V2, device_id=0xb) | ||

| + | suppose there is two images. One image only support Card 1 and another image support Card 1/3 (or any other combination of the 3 card type). | ||

| − | + | *on the compute nodes, config the pci_information | |

| − | + | pci_information = { { 'device_id': "0xa", 'vendor_id': "v1" }, { 'e.QAclass':'1' } } | |

| − | + | pci_information = { { 'device_id': "0xb", 'vendor_id': "v1" }, { 'e.QAclass':'2' } } | |

| − | + | pci_information = { { 'device_id': "0xb", 'vendor_id': "v2" }, { 'e.QAclass':'3' }} | |

| + | pci_flavor_attrs = ['e.QAclass'] | ||

| − | + | the compute node would report PCI stats group by (['e.QAclass']). | |

| − | + | pci stats will report 3 pool: | |

| − | |||

| − | |||

| − | |||

| − | + | { 'e.QAclass":"1" , 'count': 1 } | |

| − | + | { 'e.QAclass":"2" , 'count': 1 } | |

| + | { 'e.QAclass":"3" , 'count': 1 } | ||

| − | + | * create PCI flavor | |

| − | |||

| − | + | nova pci-flavor-create name 'QA1' description 'QuickAssist card version 1' | |

| − | + | nova pci-flavor-update name 'QA1' set 'e.QAclass"="1" | |

| + | nova pci-flavor-create name 'QA13' description 'QuickAssist card version 1 and version 3' | ||

| + | nova pci-flavor-update name 'QA13' set 'e.QAclass"="(1|3)" | ||

| − | + | * create flavor and boot with it | |

| − | * | + | nova boot mytest --flavor m1.tiny --image=QA1_image --nic net-id=network_X pci_flavor= '1:QA1;' |

| − | + | nova boot mytest --flavor m1.tiny --image=QA13_image --nic net-id=network_X pci_flavor= '3:QA13;' | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | === | + | ===Common PCI SRIOV Configuration detail === |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | ====Compute host==== | |

| + | pci_information = [ {pci-regex},{pci-extra-attrs} ] | ||

| + | pci_flavor_attrs=attr,attr,attr | ||

| − | = | + | For instance, when using device and vendor ID this would read: |

| − | + | pci_flavor_attrs=device_id,vendor_id | |

| + | When the back end adds an arbitrary ‘group’ attribute to all PCI devices: | ||

| + | pci_flavor_attrs=e.group | ||

| + | When you wish to find an appropriate device and perhaps also filter by the connection tagged on that device, which you use an extra-info attribute to specify on the compute node config: | ||

| + | pci_flavor_attrs=device_id,vendor_id,e.connection | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | === | + | ====flavor API==== |

| − | |||

| − | |||

| − | |||

| − | == | ||

| − | * | + | * overall |

| − | + | nova pci-flavor-list | |

| − | + | nova pci-flavor-show name|UUID <name|UUID> | |

| − | + | nova pci-flavor-create name|UUID <name|UUID> description <desc> | |

| − | + | nova pci-flavor-update name|UUID <name|UUID> set 'description'='xxxx' 'e.group'= 'A' | |

| + | nova pci-flavor-delete <name|UUID> name|UUID | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | * list | + | * list available pci flavor (white list) |

| − | + | nova pci-flavor-list | |

| − | + | GET v2/{tenant_id}/os-pci-flavors | |

data: | data: | ||

| − | + | os-pci-flavors{ | |

| + | [ | ||

| + | { | ||

| + | 'UUID':'xxxx-xx-xx' , | ||

| + | 'description':'xxxx' | ||

| + | 'vendor_id':'8086', | ||

| + | .... | ||

| + | 'name':'xxx', | ||

| + | } , | ||

| + | ] | ||

| + | } | ||

| − | * get detailed | + | * get detailed information about one pci-flavor: |

| − | + | nova pci-flavor-show <UUID|name> | |

| − | + | GET v2/{tenant_id}/os-pci-flavor/<UUID|name> | |

| − | + | data: | |

| − | + | os-pci-flavor: { | |

| + | 'UUID':'xxxx-xx-xx' , | ||

| + | 'description':'xxxx' | ||

| + | .... | ||

| + | 'name':'xxx', | ||

| + | } | ||

* create pci flavor | * create pci flavor | ||

nova pci-flavor-create name 'GetMePowerfulldevice' description "xxxxx" | nova pci-flavor-create name 'GetMePowerfulldevice' description "xxxxx" | ||

| + | API: | ||

POST v2/{tenant_id}/os-pci-flavors | POST v2/{tenant_id}/os-pci-flavors | ||

data: | data: | ||

| − | + | pci-flavor: { | |

| + | 'name':'GetMePowerfulldevice', | ||

| + | description: "xxxxx" | ||

| + | } | ||

| + | action: create database entry for this flavor. | ||

| + | |||

*update the pci flavor | *update the pci flavor | ||

| − | + | nova pci-flavor-update UUID set 'description'='xxxx' 'e.group'= 'A' | |

| − | + | PUT v2/{tenant_id}/os-pci-flavors/<UUID> | |

| − | + | with data : | |

| − | { 'description':'xxxx', | + | { 'action': "update", |

| + | 'pci-flavor': | ||

| + | { | ||

| + | 'description':'xxxx', | ||

| + | 'vendor': '8086', | ||

| + | 'e.group': 'A', | ||

| + | .... | ||

| + | } | ||

| + | } | ||

| + | action: set this as the new definition of the pci flavor. | ||

* delete a pci flavor | * delete a pci flavor | ||

| Line 279: | Line 367: | ||

DELETE v2/{tenant_id}/os-pci-flavor/<UUID> | DELETE v2/{tenant_id}/os-pci-flavor/<UUID> | ||

| + | === Current PCI implementation gaps === | ||

| + | |||

| + | concept introduce here: | ||

| + | spec: a filter defined by (k,v) pairs, which k in the pci object fields, this means those (k,v) is the pci device property like: vendor_id, 'address', pci-type etc. | ||

| + | extra_spec: the filter defined by (k, v) and k not in the pci object fields. | ||

| + | |||

| + | |||

| + | ====pci utils support extra property ==== | ||

| + | * pci utils k,v match support the address reduce regular expression | ||

| + | * uitils provide a global extract interface to extract base property and extra property of a pci device. | ||

| + | * extra information also should use schema 'e.name' | ||

| + | |||

| + | ====PCI information(extended the white-list) support extra tag==== | ||

| + | * PCI information support reduce regular expression compare, match the pci device | ||

| + | * PCI information support store any other (k,v) pair pci device's extra info | ||

| + | * any extra tag's k, v is string. | ||

| + | |||

| + | ====pci_flavor_attrs==== | ||

| + | * implement the attrs parser, updated to flavor Database | ||

| + | |||

| + | ====support pci-flavor ==== | ||

| + | * pci-flavor store in DB | ||

| + | * pci-flavor config via API | ||

| + | * pci manager use extract method extract the specs and extra_specs, match them against the pci object & object.extra_info. | ||

| + | |||

| + | ====PCI scheduler: PCI filter ==== | ||

| + | When scheduling, marcher should applied regular expression stored in the named flavor, this read out from DB. | ||

| + | |||

| + | |||

| + | ==== convert pci flavor from SRIOV ==== | ||

| + | in API stage the network parser should convert the pci flavor in the --nic option to pci request and save them into instance meta data. | ||

| + | |||

| + | 1. translate pci flavor spec to request | ||

| + | *translate_pci_flavor_to_requests(flavor_spec) | ||

| + | * input flavor_spce is a list of pci flavor and requested number: "flavor_name:number, flavor_name2:number2,..." if number is 1, it can be omit. | ||

| + | * output is pci_request, a internal represents data structure | ||

| + | |||

| + | 2. save request to instance meta data: | ||

| + | *update_pci_request_to_metadata(metadata, new_requests, prefix='') | ||

| + | |||

| + | ==== find specific device of a instance based on request ==== | ||

| + | |||

| + | to boot VM with PCI SRIOV devices, there might need more configuration action to pci device instead of just a pci host dev. to achieve this, common SRIOV need a interface to query the device allocated to a specific usage, like the SRIOV network. | ||

| − | + | 3 steps facility to achive this: | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | * Mark the PCI request use UUID, so the pci request is distinguishable. | |

| − | + | * remember the devices allocated the this request. | |

| − | + | * a interface function provide to get these device from a instance. | |

| − | ==== | + | ====DB for PCI flavor ==== |

| − | + | each pci flavor will be a set of (k,v), store the (k,v) pair in DB. both k, v is string, and the value could be a simple regular expression, support wild-cast, address range operators. | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | Talbe: pci_flavor | |

| − | |||

| − | |||

| − | |||

| − | + | { | |

| + | UUID: which pci-flavor the k,v belong to | ||

| + | name: the pci flavor's name, we need this filed to index the DB with flavor's name | ||

| + | key | ||

| + | value (might be a simple string value or reduce Regular express) | ||

| + | } | ||

| − | + | DB interface: | |

| − | + | get_pci_flavor_by_name | |

| − | + | get_pci_flavor_by_UUID | |

| + | get_pci_flavor_all | ||

| + | update_pci_flavor_by_name | ||

| + | update_pci_flavor_by_UUID | ||

| + | delete_pci_flavor_by_name | ||

| + | delete_pci_flavor_by_UUID | ||

| − | the | + | ===transient config file to API === |

| + | *the config file for alias and white-list definition is going to deprecated. | ||

| + | *new config pci_information will replace white-list | ||

| + | *pci flavor will replace alias | ||

| + | *white list/alias schema still work, and given a deprecated notice, will fade out which will be remove start from next release. | ||

| + | *if pci_flavor_attrs is not defined it will default to vendor_id, product_id and extra_info. this is keep compatible with old system. | ||

Latest revision as of 03:21, 27 July 2016

!!!This is a design discussion document, not for end user reference!!!

Contents

Related Resource

This design is based on the PCI pass-through IRC meetings, provide common support for PCI SRIOV:

This document was used to finalise the design:

link back to bp:

Common PCI SRIOV design

PCI devices have PCI standard properties like address (BDF), vendor_id, product_id, etc, Virtual functions also have a property referring to the function's physical address. Application specific or installation specific extra information can be attached to PCI device, like physical network connectivity using by Neutron SRIOV.

This bp focus on functionality to provide the common PCI SRIOV support.

- on compute node the pci_information/white-list define a set of filter. PCI device passed filter will be available for allocation. at same time extra information attached to the PCI device.

- PCI compute report the PCI stats information to scheduler. PCI stats contain several pools. each pool defined by several PCI property, control by the local configuration item: pci_flavor_attrs , default value is vendor_id, product_id, extra_info.

- PCI flavor define the user point of view PCI device selector: PCI flavor provide a set of (k,v) to form specs to select the available device(filtered by pci_information/white-list).

the new PCI design based on 2 key change: the PCI flavor and the extra information attache to pci device. the following diagram is a summary of the design:

Design Choice

PCI flavor: User don't want to know details of a pci device and all of it's attrs and extra information attached to it.

(pci_flavor_attr: admin need to know all PCI attrs to define flavor for user/tenant.)

PCI Stats: compute node might have many devices and most of device properties are same, summary a stats to scheduler can reduce DB load, simply schedule.

PCI extra info: a pci device might attach to a specific network, a specific resource, with can be attach to device and schedule base on it.

PCI flavor

For OS users, PCI flavor is a reasonable name like 'oldGPU', 'FastGPU', '10GNIC', 'SSD', describe one kind of PCI device. User use the PCI flavor to select available pci devices. Internally the PCI flavor created by a set of API and saved in a DB table, keep PCI flavor available for all cloud.

Administrator define the PCI flavors via matching expression that selects available(offer by white list) devices, and a reasonable name. PCI flavor matching expression is a set of (k,v), the k is the PCI property, v is its value. not every PCI property is available to PCI flavor, only a selected set of PCI property can used to define the PCI flavor, the selected property should be global to cloud like vendor/product_id, can not be BDF or host of a PCI device. these selected PCI property is defined via compute local configuration :

pci_flavor_attrs = vendor_id, product_id, ...

a important behavior is the PCI flavors could overlap - that is, the same device on the same machine may be matched by multiple flavors.

Use PCI flavor in instance flavor extra info

user set pci flavor into instance flavor's extra info to specify how many PCI device/and what type PCI flavor the VM want to boot with.

nova flavor-key m1.small set pci_passthrough:pci_flavor= <pci flavor spec list>

pci flavor spec:

num1:flavor1,flavor2

mean: want <number>'s pci devices from flavor or flavor

pci flavor spec list:

<pci flavor spec1>; <pci flavor spec2>

for example:

nova flavor-key m1.small set pci_passthrough:pci_flavor= 1:IntelGPU,NvGPU;1:intelQuickAssist;

which define requirements:

boot with 1 of IntelGPU or NvGPU, and 1 IntelQuickAssist card.

PCI pci_flavor_attrs

this configuration is keep local to every compute node, this will make deploy process can locally decide what PCI properties this node will exposed.

pci_flavor_attrs = vendor_id, product_id, ...

compute node update local pci extra properties to PCI flavor Database, which is accessible by flavor API, provide PCI properties to define flavor.

pci_flavor_attrs store in flavor DB as a normal flavor, it's name "_flavor_attrs", it's UUID use "0":

{"vendor_id":"Ture", "product_id":"True", ... }

this flavor contain all available attrs can be used to define pci flavor, list it's content use:

nova pci-flavor-show 0

GET v2/{tenant_id}/os-pci-flavor/<0>

data:

os-pci-flavor: {

'UUID':'0' ,

'description':'Available flavor attrs '

'name':'_flavor_attrs',

'vendor_id": "True",

'product_id": "True",

....

}

PCI request

PCI request is a internal structure to represent all PCI devices a VM want to have.

request = {'count': int(count),

'spec': [{"vendor_id":"8086", "phynetwork":"phy1"}, ...],

'alias_name': "Intel.NIC"}

Extra information of PCI device

the compute nodes offer available PCI devices for pass-through, since the list of devices doesn't usually change unless someone tinkers with the hardware, this matching expression used to create this list of offered devices is stored in compute node config.

*the device information (device ID, vendor ID, BDF etc.) is discovered from the device and stored as part of the PCI device, same as current implement. *on the compute node, additional arbitrary extra information, in the form of key-value pairs, can be added to the config and is included in the PCI device

this is achieved by extend the pci white-list to:

*pci_information = [ { pci-regex } ,{pci-extra-attrs } ]

*pci-regex is a dict of { string-key: string-value } pairs , it can only match device properties, like vendor_id, address, product_id,etc.

*pci-extra-attrs is a dict of { string-key: string-value } pairs. The values can be arbitrary The total size of the extra attrs may be restricted. all this extra attrs will be store in the pci device table's extra info field. and the extra attrs should use this naming schema: e.attrname

PCI stats grouping device base on pci_flavor_attrs

PCI stats pool summary the devices of a compute node, and the scheduler use flavor's matching specs select the available host for VM. The stats pool must contain the PCI properties used by PCI flavor.

* current grouping is based on [vendor_id, product_id, extra_info] * going to group by keys specified by pci_flavor_attrs.

The algorithm for stats report should meet this request: the one device should only be in one pci stats pool, this mean pci stats can not overlap. this simplifies the scheduler design.

on computer node the pci_flavor_attrs provide the specs for pci stats to group its pool. and the pci_flavor_attrs on control node collection the attrs which can be used to define the pci_flavor. the definition of pci_flavor_attrs on controller should contain all the pci_flavor_attrs's content on every compute node.

- but compute node report stats pool by a subset of controller's pci_flavor_attrs is acceptable, in such scenario, this means the compute node can only provide the devices with these propertes.*

Use cases

General PCI pass through

given compute nodes contain 1 GPU with vendor:device 8086:0001

- on the compute nodes, config the pci_information

pci_information = { { 'device_id': "8086", 'vendor_id': "0001" }, {} }

pci_flavor_attrs ='device_id','vendor_id'

the compute node would report PCI stats group by ('device_id', 'vendor_id'). pci stats will report one pool:

{'device_id':'0001', 'vendor_id':'8086', 'count': 1 }

- create PCI flavor

nova pci-flavor-create name 'bigGPU' description 'passthrough Intel's on-die GPU' nova pci-flavor-update name 'bigGPU' set 'vendor_id'='8086' 'product_id': '0001'

- create flavor and boot with it ( same as current PCI passthrough)

nova flavor-key m1.small set pci_passthrough:pci_flavor= 1:bigGPU; nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec

General PCI pass through with multi PCI flavor candidate

given compute nodes contain 2 type GPU with , vendor:device 8086:0001, or vendor:device 8086:0002

- on the compute nodes, config the pci_information

pci_information = { { 'device_id': "8086", 'vendor_id': "000[1-2]" }, {} }

- on controller

pci_flavor_attrs = ['device_id', 'vendor_id']

the compute node would report PCI stats group by ('device_id', 'vendor_id'). pci stats will report 2 pool:

{'device_id':'0001', 'vendor_id':'8086', 'count': 1 }

{'device_id':'0002', 'vendor_id':'8086', 'count': 1 }

- create PCI flavor

nova pci-flavor-create name 'bigGPU' description 'passthrough Intel's on-die GPU' nova pci-flavor-update name 'bigGPU' set 'vendor_id'='8086' 'product_id': '0001' nova pci-flavor-create name 'bigGPU2' description 'passthrough Intel's on-die GPU' nova pci-flavor-update name 'bigGPU2' set 'vendor_id'='8086' 'product_id': '0002'

- create flavor and boot with it

nova flavor-key m1.small set pci_passthrough:pci_flavor= '1:bigGPU,bigGPU2;' nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec

General PCI pass through wild-cast PCI flavor

given compute nodes contain 2 type GPU with , vendor:device 8086:0001, or vendor:device 8086:0002

- on the compute nodes, config the pci_information

pci_information = { { 'device_id': "8086", 'vendor_id': "000[1-2]" }, {} }

pci_flavor_attrs = ['device_id', 'vendor_id']

the compute node would report PCI stats group by ('device_id', 'vendor_id'). pci stats will report 2 pool:

{'device_id':'0001', 'vendor_id':'8086', 'count': 1 }

{'device_id':'0002', 'vendor_id':'8086', 'count': 1 }

- create PCI flavor

nova pci-flavor-create name 'bigGPU' description 'passthrough Intel's on-die GPU' nova pci-flavor-update name 'bigGPU' set 'vendor_id'='8086' 'product_id': '000[1-2]'

- create flavor and boot with it

nova flavor-key m1.small set pci_passthrough:pci_flavor= '1:bigGPU;' nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec

PCI pass through support grouping tag

given compute nodes contain 2 type GPU with , vendor:device 8086:0001, or vendor:device 8086:0002

- on the compute nodes, config the pci_information

pci_information = { { 'device_id': "8086", 'vendor_id': "000[1-2]" }, { 'e.group':'gpu' } }

pci_flavor_attrs = ['e.group']

the compute node would report PCI stats group by ('e.group'). pci stats will report 1 pool:

{'e.group':'gpu', 'count': 2 }

- create PCI flavor

nova pci-flavor-create name 'bigGPU' description 'passthrough Intel's on-die GPU' nova pci-flavor-update name 'bigGPU' set 'e.group'='gpu'

- create flavor and boot with it

nova flavor-key m1.small set pci_passthrough:pci_flavor= '1:bigGPU;' nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec

PCI SRIOV with tagged flavor

given compute nodes contain 5 PCI NIC , vendor:device 8086:0022, and it connect to physical network "X".

- on the compute nodes, config the pci_information

pci_information = { { 'device_id': "8086", 'vendor_id': "000[1-2]" }, { 'e.physical_netowrk': 'X' } }

pci_flavor_attrs = 'e.physical_netowrk'

the compute node would report PCI stats group by ('e.physical_netowrk'). pci stats will report 1 pool:

{'e.physical_netowrk':'X', 'count': 1 }

- create PCI flavor

nova pci-flavor-create name 'phyX_NIC' description 'passthrough NIC connect to physical network X' nova pci-flavor-update name 'bigGPU' set 'e.physical_netowrk'='X'

- create flavor and boot with it

nova boot mytest --flavor m1.tiny --image=cirros-0.3.1-x86_64-uec --nic net-id=network_X pci_flavor= '1:phyX_NIC;'

encryption card use case

there is 3 encryption card: [ V1-3 here means a vendor_id number.]

card 1 (vendor_id is V1, device_id =0xa) card 2 (vendor_id is V1, device_id=0xb) card 3 (vendor_id is V2, device_id=0xb)

suppose there is two images. One image only support Card 1 and another image support Card 1/3 (or any other combination of the 3 card type).

- on the compute nodes, config the pci_information

pci_information = { { 'device_id': "0xa", 'vendor_id': "v1" }, { 'e.QAclass':'1' } }

pci_information = { { 'device_id': "0xb", 'vendor_id': "v1" }, { 'e.QAclass':'2' } }

pci_information = { { 'device_id': "0xb", 'vendor_id': "v2" }, { 'e.QAclass':'3' }}

pci_flavor_attrs = ['e.QAclass']

the compute node would report PCI stats group by (['e.QAclass']). pci stats will report 3 pool:

{ 'e.QAclass":"1" , 'count': 1 }

{ 'e.QAclass":"2" , 'count': 1 }

{ 'e.QAclass":"3" , 'count': 1 }

- create PCI flavor

nova pci-flavor-create name 'QA1' description 'QuickAssist card version 1' nova pci-flavor-update name 'QA1' set 'e.QAclass"="1" nova pci-flavor-create name 'QA13' description 'QuickAssist card version 1 and version 3' nova pci-flavor-update name 'QA13' set 'e.QAclass"="(1|3)"

- create flavor and boot with it

nova boot mytest --flavor m1.tiny --image=QA1_image --nic net-id=network_X pci_flavor= '1:QA1;' nova boot mytest --flavor m1.tiny --image=QA13_image --nic net-id=network_X pci_flavor= '3:QA13;'

Common PCI SRIOV Configuration detail

Compute host

pci_information = [ {pci-regex},{pci-extra-attrs} ] pci_flavor_attrs=attr,attr,attr

For instance, when using device and vendor ID this would read:

pci_flavor_attrs=device_id,vendor_id

When the back end adds an arbitrary ‘group’ attribute to all PCI devices:

pci_flavor_attrs=e.group

When you wish to find an appropriate device and perhaps also filter by the connection tagged on that device, which you use an extra-info attribute to specify on the compute node config: pci_flavor_attrs=device_id,vendor_id,e.connection

flavor API

- overall

nova pci-flavor-list nova pci-flavor-show name|UUID <name|UUID> nova pci-flavor-create name|UUID <name|UUID> description <desc> nova pci-flavor-update name|UUID <name|UUID> set 'description'='xxxx' 'e.group'= 'A' nova pci-flavor-delete <name|UUID> name|UUID

* list available pci flavor (white list)

nova pci-flavor-list

GET v2/{tenant_id}/os-pci-flavors

data:

os-pci-flavors{

[

{

'UUID':'xxxx-xx-xx' ,

'description':'xxxx'

'vendor_id':'8086',

....

'name':'xxx',

} ,

]

}

- get detailed information about one pci-flavor:

nova pci-flavor-show <UUID|name>

GET v2/{tenant_id}/os-pci-flavor/<UUID|name>

data:

os-pci-flavor: {

'UUID':'xxxx-xx-xx' ,

'description':'xxxx'

....

'name':'xxx',

}

- create pci flavor

nova pci-flavor-create name 'GetMePowerfulldevice' description "xxxxx"

API:

POST v2/{tenant_id}/os-pci-flavors

data:

pci-flavor: {

'name':'GetMePowerfulldevice',

description: "xxxxx"

}

action: create database entry for this flavor.

- update the pci flavor

nova pci-flavor-update UUID set 'description'='xxxx' 'e.group'= 'A'

PUT v2/{tenant_id}/os-pci-flavors/<UUID>

with data :

{ 'action': "update",

'pci-flavor':

{

'description':'xxxx',

'vendor': '8086',

'e.group': 'A',

....

}

}

action: set this as the new definition of the pci flavor.

- delete a pci flavor

nova pci-flavor-delete <UUID>

DELETE v2/{tenant_id}/os-pci-flavor/<UUID>

Current PCI implementation gaps

concept introduce here: spec: a filter defined by (k,v) pairs, which k in the pci object fields, this means those (k,v) is the pci device property like: vendor_id, 'address', pci-type etc. extra_spec: the filter defined by (k, v) and k not in the pci object fields.

pci utils support extra property

* pci utils k,v match support the address reduce regular expression

* uitils provide a global extract interface to extract base property and extra property of a pci device.

* extra information also should use schema 'e.name'

PCI information(extended the white-list) support extra tag

* PCI information support reduce regular expression compare, match the pci device

* PCI information support store any other (k,v) pair pci device's extra info

* any extra tag's k, v is string.

pci_flavor_attrs

* implement the attrs parser, updated to flavor Database

support pci-flavor

* pci-flavor store in DB

* pci-flavor config via API

* pci manager use extract method extract the specs and extra_specs, match them against the pci object & object.extra_info.

PCI scheduler: PCI filter

When scheduling, marcher should applied regular expression stored in the named flavor, this read out from DB.

convert pci flavor from SRIOV

in API stage the network parser should convert the pci flavor in the --nic option to pci request and save them into instance meta data.

1. translate pci flavor spec to request

*translate_pci_flavor_to_requests(flavor_spec) * input flavor_spce is a list of pci flavor and requested number: "flavor_name:number, flavor_name2:number2,..." if number is 1, it can be omit. * output is pci_request, a internal represents data structure

2. save request to instance meta data:

*update_pci_request_to_metadata(metadata, new_requests, prefix=)

find specific device of a instance based on request

to boot VM with PCI SRIOV devices, there might need more configuration action to pci device instead of just a pci host dev. to achieve this, common SRIOV need a interface to query the device allocated to a specific usage, like the SRIOV network.

3 steps facility to achive this:

* Mark the PCI request use UUID, so the pci request is distinguishable. * remember the devices allocated the this request. * a interface function provide to get these device from a instance.

DB for PCI flavor

each pci flavor will be a set of (k,v), store the (k,v) pair in DB. both k, v is string, and the value could be a simple regular expression, support wild-cast, address range operators.

Talbe: pci_flavor

{

UUID: which pci-flavor the k,v belong to

name: the pci flavor's name, we need this filed to index the DB with flavor's name

key

value (might be a simple string value or reduce Regular express)

}

DB interface:

get_pci_flavor_by_name

get_pci_flavor_by_UUID

get_pci_flavor_all

update_pci_flavor_by_name

update_pci_flavor_by_UUID

delete_pci_flavor_by_name

delete_pci_flavor_by_UUID

transient config file to API

*the config file for alias and white-list definition is going to deprecated. *new config pci_information will replace white-list *pci flavor will replace alias *white list/alias schema still work, and given a deprecated notice, will fade out which will be remove start from next release. *if pci_flavor_attrs is not defined it will default to vendor_id, product_id and extra_info. this is keep compatible with old system.