Difference between revisions of "Ovs-flow-logic"

| Line 40: | Line 40: | ||

As it was discussed in the mailing list, the same testtbed was also used to measure the impact of using distinct bridges for tunelling: | As it was discussed in the mailing list, the same testtbed was also used to measure the impact of using distinct bridges for tunelling: | ||

| − | + | a VXLAN tunnel port was added to br-int, and two flows were set-up to hard-wire VM's ports to tunnels on each host | |

| − | add-flow br-int in_port=vm_ofport,actions="output:tunnel_ofport" | + | add-flow br-int in_port=vm_ofport,actions="output:tunnel_ofport" |

| − | add-flow br-int in_port=tunnel_ofport,actions="output:tunnel_ofport" | + | add-flow br-int in_port=tunnel_ofport,actions="output:tunnel_ofport" |

The results trend to show that current bridge separation logic doesn't introduces that a big performance penality: | The results trend to show that current bridge separation logic doesn't introduces that a big performance penality: | ||

| − | root@test:~# iperf -t 50 -i 10 -c 192.168.1.105 | + | root@test:~# iperf -t 50 -i 10 -c 192.168.1.105 |

------------------------------------------------------------ | ------------------------------------------------------------ | ||

Client connecting to 192.168.1.105, TCP port 5001 | Client connecting to 192.168.1.105, TCP port 5001 | ||

Revision as of 09:39, 14 August 2013

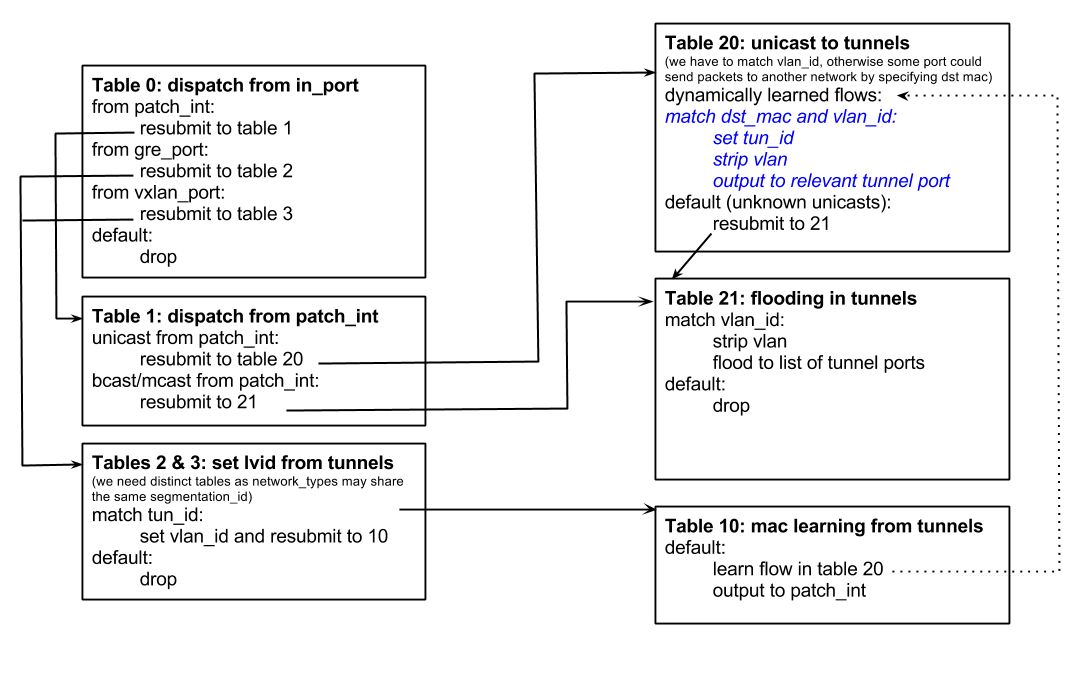

Current packet processing openvswitch-agent in br-tun is based on tun-id, as a consequence, two networks using different tunnel types but sharing the same tun-id would not be properly isolated.

To ensure proper isolation within a single bridge, NORMAL action can't be used any more as it floods unknown unicasts on all bridges ports. It is replaced by a learn action that dynamically sets-up flows when packets are recieved from tunnel ports. As mac address are learnt in explicit flows (in table 20), we can use a default action in that table to flood unknown unicasts to the right set of ports, like broadcasts and multicasts packets.

As it impacts how packets are handled in tunnels, this proposed changed was benchmaked to verify how it could impact tunelling performance. Current test was run between two VM running on distinct hosts, and measuring IPerf throughput:

Before proposed change (using NORMAL action and set_tunnel in br-tun):

root@test:~# iperf -t 50 -i 10 -c 192.168.1.105

Client connecting to 192.168.1.105, TCP port 5001 TCP window size: 22.9 KByte (default)

[ 3] local 192.168.1.104 port 59027 connected with 192.168.1.105 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.60 GBytes 1.37 Gbits/sec [ 3] 10.0-20.0 sec 1.58 GBytes 1.36 Gbits/sec [ 3] 20.0-30.0 sec 1.95 GBytes 1.68 Gbits/sec [ 3] 30.0-40.0 sec 1.75 GBytes 1.51 Gbits/sec [ 3] 40.0-50.0 sec 1.94 GBytes 1.67 Gbits/sec [ 3] 0.0-50.0 sec 8.82 GBytes 1.52 Gbits/sec

With proposed change (using the logic descibed above):

root@test:~# iperf -t 50 -i 10 -c 192.168.1.105

Client connecting to 192.168.1.105, TCP port 5001 TCP window size: 22.9 KByte (default)

[ 3] local 192.168.1.104 port 59026 connected with 192.168.1.105 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.58 GBytes 1.36 Gbits/sec [ 3] 10.0-20.0 sec 1.80 GBytes 1.55 Gbits/sec [ 3] 20.0-30.0 sec 1.70 GBytes 1.46 Gbits/sec [ 3] 30.0-40.0 sec 1.92 GBytes 1.65 Gbits/sec [ 3] 40.0-50.0 sec 1.82 GBytes 1.56 Gbits/sec [ 3] 0.0-50.0 sec 8.83 GBytes 1.52 Gbits/sec

As it was discussed in the mailing list, the same testtbed was also used to measure the impact of using distinct bridges for tunelling: a VXLAN tunnel port was added to br-int, and two flows were set-up to hard-wire VM's ports to tunnels on each host

add-flow br-int in_port=vm_ofport,actions="output:tunnel_ofport" add-flow br-int in_port=tunnel_ofport,actions="output:tunnel_ofport"

The results trend to show that current bridge separation logic doesn't introduces that a big performance penality:

root@test:~# iperf -t 50 -i 10 -c 192.168.1.105

Client connecting to 192.168.1.105, TCP port 5001 TCP window size: 22.9 KByte (default)

[ 3] local 192.168.1.104 port 59037 connected with 192.168.1.105 port 5001 [ ID] Interval Transfer Bandwidth [ 3] 0.0-10.0 sec 1.78 GBytes 1.53 Gbits/sec [ 3] 10.0-20.0 sec 1.79 GBytes 1.54 Gbits/sec [ 3] 20.0-30.0 sec 1.70 GBytes 1.46 Gbits/sec [ 3] 30.0-40.0 sec 1.70 GBytes 1.46 Gbits/sec [ 3] 40.0-50.0 sec 1.70 GBytes 1.51 Gbits/sec [ 3] 0.0-50.0 sec 8.73 GBytes 1.50 Gbits/sec

These results are not that suprising, as linux networking performance seems to be more tied to how often the networking stack is crossed by packets (thus, how much interrupts and context switches the processors must handle), rather than the number of operations that are performed on each packet.