Obsolete:Swift-Improved-Object-Replicator

- Launchpad Cinder blueprint: improved-object-replicator

- Created: 2 October 2012

- Last updated: 2 October 2012

- Drafter: Alex Yang Hui Cheng

- Contributor: Alex Yang Hui Cheng

Contents

Abstract

The goal of this blueprint is to improve the efficiency of object-replicator. In Swift, the object-replicator plays an important role in keeping data consistency and data durability. But, for the production environment of billions of objects, the performance of object-replicator is not so good because of the time-consuming phase of synchronizing data. Moreover, the time for synchronizing data will infect the data durability and data consistency during failure. So, to optimize the mechanism of object-replicator is necessary.

This blueprint discusses the current implementation and performance issues of object-replicator, and how could it be solved in the new object-replicator.

Current implementation of object-replicator

As a dynamo like storage system, the main tasks of replicator is followings:

Replica synchronization: An anti-entropy protocol to keep the consistency of replicas and replica recovery. Hinted handoff recovery: In order to ensure the read and write operations are not failed due to temporary node or network failures, a temproary node became the handoff node when the origin node is unavailble. So, after the origin node is available, the replica should be moved from handoff node to origin node.

In swift, the object-replicator is responsible for replica synchronization, hinted handoff recovery, directory recaim. The main logic of object-replicator is shown below:

01: partitions = collect_partitions() 02: # collect jobs 03: for partition in partitions: 04: if partition not in local: 05: partitions_to_move.add(partition) 06: else: 07: partitions_to_check.add(partition) 08: # hinted handoff recovery 09: for partition in partitions_to_move: 10: move_to_origin_node(partiotion) 11: # replica synchronization 12: for partition in partitions_to_check: 13: local_hash_of_suffix_dirs = get_hashes_and_reclaim(partition) 14: remote_hash_of_suffix_dirs = get_reomote_hashes(partition) 15: different_suffix_dirs = compare(local_hash_of_suffix_dirs, remote_hash_of suffix_dirs) 16: rsync(different_suffix_dirs)

Shortages of current object-replicator

A round of replication spends very long time

For my deployment of swift, there are about 150,000 partitions and 30,000,000 suffix dirs in one server. The percentage of disk usage of is 12% (about 2T). In the general situation, a round of replication would spent 15 hours. If there are some node failures, the time would spent more. The time for replication would be longer and longer during the numbers of objects became larger. This is terrible for data consistency and durability that the time of replication is too long. Even though we can add more nodes to decrease the time, but we also consider the ROI.

The object-replicator is not quite flexible

A round of replication will loop all partitions on disks and sync them randomly. So, if there are some urgent replicas need to sync, the current mechanism can't sync them as soon as possible.

Principles of design

- To split the synchronize process to asynchronize;

- To calculate hashing for replication in real-time;

- Compatible with current object-replicator;

- Replication process in controllable;

Implementation

The design will be described in two parts. Firstly, an figure shows overall architecture; Secondly, the components of this architecture will be discussed in details.

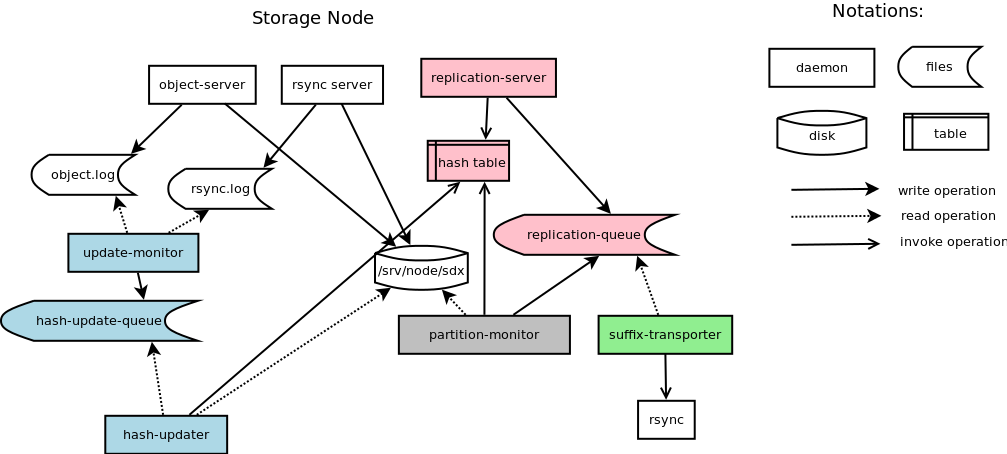

As the figure shows that the icons with colors are the main components of this design. All of them is independent with the current object-replicator. The origin logic of object-replicator was split into four parts with different colors. the components with the color of cyan are in charge of calculating hash in real-time; the components with the color of pink are in charge of indexing the hash of suffix and partition directories, receiving and sending requests to compare the hash of partition or suffix, generating jobs of replicating suffix directories to the replication-queue; The partition-monitor is in charge of checking the partition whether to move at interval; The suffix-transporter is in charge of monitoring the replication-queue and invoking the rsync to sync suffix directories.

Calculating hash in real-time

update-monitor

The update monitor is in charge of monitoring the log file of object-server and rsync-server. The log of PUT and DELETE operations would be filtered by update-monitor and put into the hash-update-queue.

hash-update-queue

A queue for storing the data of update-monitor.

hash-updater

A daemon to calculate the hash of suffix directories in the hash-update-queue and update them to the hash table.

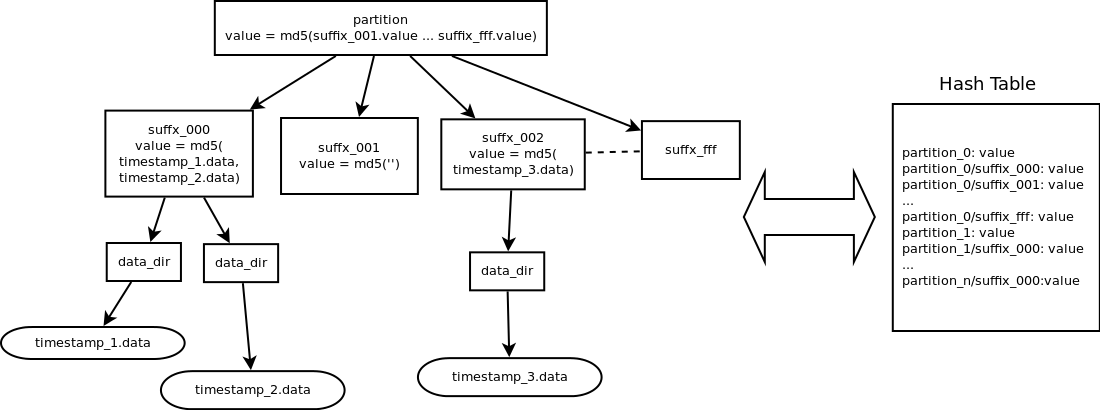

== Hash Table == The hash table is a key-value storage for storing the {partition/suffix: hash} and {partition: hash} items of one disk. The {partition/suffix: hash} is same with the hashes.pkl file under the partition directory. The {partition: hash} is the MD5 checksum of all {partition/suffix: hash}. The structure is shown below.

The total number of items in a hash table would be one hundred million. we can calculate the approximate number of items using the formula shown below.

item_num ~= (partition_num * replica_num * suffix_num + partition_num * replica_num) / disk_num;<

>

item_num ~= partition_num * replica_num * (1 + suffix_num) / disk_num<

>

Because of the max suffx_num = 16^3 = 4096, so:<

>

item_num ~= partition_num * replica_num * 4097 / disk_num<

>

In this formula, we consider the weight of disks is all the same. The item_num could be very large or small. It dependents on the configuration of partition_num, replica_num and disk_num. For example, if the partition num is 218, the replica_num is 3 and disk_num is 50, the item_num ~= 218 * 3 * 4097 / 50 ~= 64440238.

To consider the size of hash table, we can not store all of the hashtable in memory. We must store the hash table in disk and cache part of data in memory. So, we use the LSM-Tree and bloom filter to accept this goal. The LSM-Tree, Log-Structured Merge-Tree, is a disk-based data structure designed to provide low-cost indexing for a file experiencing a high rate of record inserts (and deletes) over an extended period. The bloom filter is is a space-efficient probabilistic data structure that is used to test whether an element is a member of a set. The integration of LSM-Tree and bloom filter can provide the high performance of write and read operations. There are some open source projects implemented the LSM-Tree and bloom filter like leveldb and nessDB. We can use one of them to store the hash table.

Replication-Server

The replication-server is a REST-API server for receiving the request from peer node or control node. The request form peer node is to compare the hash of an partition, if the local hash of the partition is different with the remote hash, the replication-server will return all the hashes of suffix directories as the response. The request form control node is to sync the specific partitions or suffix directories. So the replication-server should handle this kind of request and generate jobs to replication-queue. For example, after rebanlancing the ring, there are some partition need to transport, A job of tranporting a partition can be split into some parts of suffix transporting and executing by different node.

Partition-Monitor

The partition-monitor is a daemon run at interval. The main tasks of partition-monitor are shown below.

- To collect the partitions which are need moving and put them into the replication-queue.

The partition-monitor will compatre all the partitions in disk with the ring. If the partition is handoff or need to transport, the partition-monitor will add it to the replication-queue.

- Sending request to the replication-server of peer node to compare hash, if they are different, put them into the replication-queue.

A prority queue may be uesd in here. The partition-monitor compare the partition which is most active at first.

Suffix-Transporter

An daemon for executing the job in replication-queue.

Summary

The principle of this blueprint is split the synchronize process of object-replicator to asynchronize. By doing this change, the replication can be more flexible and controlable. The specific replcation-server can splict the REPLICATE request from object-server and receive request from control node. Some strategy can be used in hash-update-queue and replication-queue to control the replication. All of this are based on the paper of dynamo and compatible with the object-replicator.