Difference between revisions of "Obsolete:Overview"

m (Fifieldt moved page Overview to Obsolete:Overview: outdated) |

|||

| (8 intermediate revisions by 4 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | = Clustering, Globalization, and Scale-out Architecture Overview = | |

| − | = Architecture Overview = | + | This page was for discussion of the overall architecture at the first OpenStack Design Summit in August 2010, so the discussion here is likely to be outdated. More accurate information can be found on the [http://nova.openstack.org/service.architecture.html Nova developer site, service architecture ] and the [http://swift.openstack.org/overview_architecture.html Swift developer site, architectural overview ]. |

| − | + | Live notes were taken for this topic at: http://etherpad.openstack.org/Clustering | |

| − | + | The sections below describe two proposed distributed architectures. The first uses a hierarchical approach and the second uses a peer-to-peer approach. | |

| − | All red boxes represent some form of data storage. The | + | == Glossary == |

| + | |||

| + | * Public API Servers - Know as the "Nucleus" in Cloud Servers v1 or "Cloud Controller" in other systems. | ||

| + | * Cluster - A group of physical host nodes. Known as a "Huddle" in Cloud Servers v1. | ||

| + | * Cluster Controller - Software running on each cluster to control the hosts within it. Known as the "QB" in Cloud Servers v1. | ||

| + | * Host - Individual physical host in a Cluster. | ||

| + | * Guest - VM instance running on a host. | ||

| + | |||

| + | == Common Components == | ||

| + | |||

| + | All green boxes represent pieces of software we either must write or use off the shelf. For example, the Message Queue may be Rabbit MQ, but we'll most likely need to write our own host and guest agents. All red boxes represent some form of data storage, whether it is a simple in-memory key/value cache or a relational database. The data stored in the Cluster Controller and Public API Servers will most likely be caches of a subset of the Host table data needed to make informed decisions such as where to route API requests or schedule new operations. | ||

Note that only Cluster Controllers talk to the Public API Servers (via the message queue), Host and Guest agents should never talk directly to the API servers. Connection aggregation such as this should be used wherever possible (Guests->Host, Hosts->Cluster Controller, Cluster Controllers->Public API Servers). | Note that only Cluster Controllers talk to the Public API Servers (via the message queue), Host and Guest agents should never talk directly to the API servers. Connection aggregation such as this should be used wherever possible (Guests->Host, Hosts->Cluster Controller, Cluster Controllers->Public API Servers). | ||

| − | The | + | The Image Cache and Source will most likely leverage the existing technology such as the ibacks and Cloud Files, but it should be kept generic so other (possibly external) image sources are supported. For example, a user may wish to specify an arbitrary URI to pull a custom image from. |

| + | == Hierarchical == | ||

| + | |||

| + | At a high level, this approach would be similar to how DNS works, there is some set of Public API Servers that the Cluster Controllers register with and requests coming into these API servers are routed to the appropriate Cluster (or multi-cast to many Clusters) to answer queries. Depending on how much data we can effectively cache from the Clusters at this layer, we should be able to answer some subset of queries directly without forwarding onto the Clusters directly. This allows the Cluster Controllers to act as an aggregation layer for all Hosts within a Cluster, so only 2-3 Cluster Controllers need to register with the Public API Servers (not every Host). This architecture will require at least four logical APIs, but they may share code in implementation. These are: | ||

| + | |||

| + | * External users to Public API Servers | ||

| + | * Public API Servers to Cluster Controllers | ||

| + | * Cluster Controllers to Hosts | ||

| + | * Hosts to Guest Agents. | ||

| + | |||

| + | The requests may be answered at any layer if the information is available and up to date. In the case of a simple one-cluster setup, you could start Public API Servers alongside the Cluster Controllers. | ||

| + | |||

| + | [[Image:cloud_servers_v2.png]] | ||

The original Open Office Draw file can be found at: [[attachment:cloud_servers_v2.odg]] | The original Open Office Draw file can be found at: [[attachment:cloud_servers_v2.odg]] | ||

| − | [[Image: | + | == Peer-to-peer == |

| + | |||

| + | A peer-to-peer model would look more like SMTP, IRC, or even some network routing protocols (BGP, RIP, ...). This would allow Cluster Controllers to peer with one another and they would advertise what resources are available locally or to their other peers through aggregated resource notifications. The graph of Clusters would not need to be complete (fully connected), it could be loosely connected. The Public API Servers and Cluster Controller are logically separate in their function, but could be combined into the same piece of software. This combination would speak both the Public API and the peering API and could either answer the request directly or route it to the cluster that can answer it. This may take the form of a proxied request or a redirect. For example, if the request came in as a HTTP request, a 301 or 302 Redirect response could be sent with the appropriate cluster to contact. | ||

| + | |||

| + | [[Image:cloud_servers_v2_peer.png]] | ||

| + | The original Open Office Draw file can be found at: [[attachment:cloud_servers_v2_peer.odg]] | ||

Latest revision as of 19:05, 25 July 2013

Contents

Clustering, Globalization, and Scale-out Architecture Overview

This page was for discussion of the overall architecture at the first OpenStack Design Summit in August 2010, so the discussion here is likely to be outdated. More accurate information can be found on the Nova developer site, service architecture and the Swift developer site, architectural overview .

Live notes were taken for this topic at: http://etherpad.openstack.org/Clustering

The sections below describe two proposed distributed architectures. The first uses a hierarchical approach and the second uses a peer-to-peer approach.

Glossary

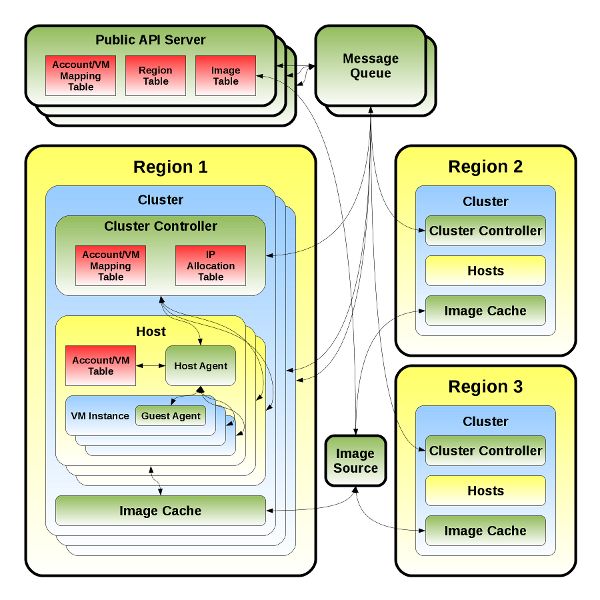

- Public API Servers - Know as the "Nucleus" in Cloud Servers v1 or "Cloud Controller" in other systems.

- Cluster - A group of physical host nodes. Known as a "Huddle" in Cloud Servers v1.

- Cluster Controller - Software running on each cluster to control the hosts within it. Known as the "QB" in Cloud Servers v1.

- Host - Individual physical host in a Cluster.

- Guest - VM instance running on a host.

Common Components

All green boxes represent pieces of software we either must write or use off the shelf. For example, the Message Queue may be Rabbit MQ, but we'll most likely need to write our own host and guest agents. All red boxes represent some form of data storage, whether it is a simple in-memory key/value cache or a relational database. The data stored in the Cluster Controller and Public API Servers will most likely be caches of a subset of the Host table data needed to make informed decisions such as where to route API requests or schedule new operations.

Note that only Cluster Controllers talk to the Public API Servers (via the message queue), Host and Guest agents should never talk directly to the API servers. Connection aggregation such as this should be used wherever possible (Guests->Host, Hosts->Cluster Controller, Cluster Controllers->Public API Servers).

The Image Cache and Source will most likely leverage the existing technology such as the ibacks and Cloud Files, but it should be kept generic so other (possibly external) image sources are supported. For example, a user may wish to specify an arbitrary URI to pull a custom image from.

Hierarchical

At a high level, this approach would be similar to how DNS works, there is some set of Public API Servers that the Cluster Controllers register with and requests coming into these API servers are routed to the appropriate Cluster (or multi-cast to many Clusters) to answer queries. Depending on how much data we can effectively cache from the Clusters at this layer, we should be able to answer some subset of queries directly without forwarding onto the Clusters directly. This allows the Cluster Controllers to act as an aggregation layer for all Hosts within a Cluster, so only 2-3 Cluster Controllers need to register with the Public API Servers (not every Host). This architecture will require at least four logical APIs, but they may share code in implementation. These are:

- External users to Public API Servers

- Public API Servers to Cluster Controllers

- Cluster Controllers to Hosts

- Hosts to Guest Agents.

The requests may be answered at any layer if the information is available and up to date. In the case of a simple one-cluster setup, you could start Public API Servers alongside the Cluster Controllers.

The original Open Office Draw file can be found at: attachment:cloud_servers_v2.odg

The original Open Office Draw file can be found at: attachment:cloud_servers_v2.odg

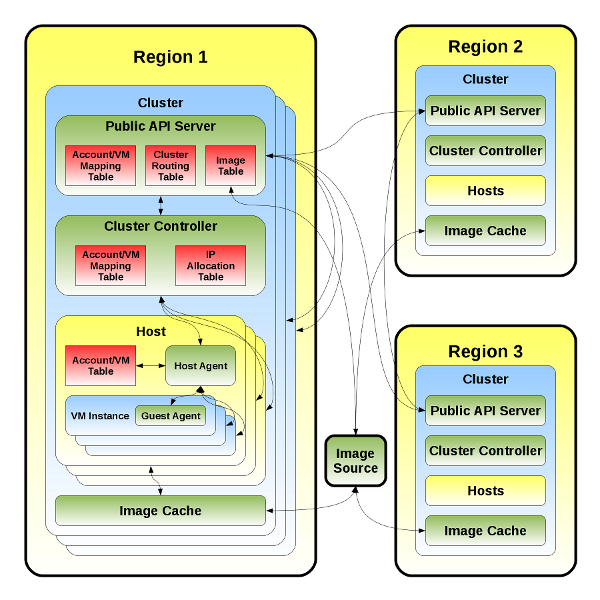

Peer-to-peer

A peer-to-peer model would look more like SMTP, IRC, or even some network routing protocols (BGP, RIP, ...). This would allow Cluster Controllers to peer with one another and they would advertise what resources are available locally or to their other peers through aggregated resource notifications. The graph of Clusters would not need to be complete (fully connected), it could be loosely connected. The Public API Servers and Cluster Controller are logically separate in their function, but could be combined into the same piece of software. This combination would speak both the Public API and the peering API and could either answer the request directly or route it to the cluster that can answer it. This may take the form of a proxied request or a redirect. For example, if the request came in as a HTTP request, a 301 or 302 Redirect response could be sent with the appropriate cluster to contact.

The original Open Office Draw file can be found at: attachment:cloud_servers_v2_peer.odg

The original Open Office Draw file can be found at: attachment:cloud_servers_v2_peer.odg