Nova/Process

If you are new to Nova, please read this first: Nova/Mentoring

Contents

- 1 Dates overview

- 2 How do I get my code merged?

- 3 Nova Process Mission

- 4 FAQs

- 4.1 Why bother with all this process?

- 4.2 Why don't you remove old process?

- 4.3 Why are specs useful?

- 4.4 If we have specs, why still have blueprints?

- 4.5 Why do we have priorities?

- 4.6 Why is there a Feature Freeze (and String Freeze) in Nova?

- 4.7 Why is there a non-priority Feature Freeze in Nova?

- 4.8 Why do you still use Launchpad?

- 4.9 When should I submit my spec?

- 4.10 How can I get my code merged faster?

- 5 Process Evolution Ideas

Dates overview

For Mitaka, please see: Nova/Mitaka_Release_Schedule

For Liberty, please see: Nova/Liberty_Release_Schedule

Feature Freeze

This effort is primarily to help the horizontal teams help prepare their items for release, while at the same time giving developers time to focus on stabilising what is currently in master, and encouraging users and packages to perform tests (automated, and manual) on the release, to spot any major bugs.

As such we have the following processes:

- https://wiki.openstack.org/wiki/FeatureProposalFreeze

- make sure all code is up for review

- so we can optimise for completed features, not lots of half completed features

- https://wiki.openstack.org/wiki/FeatureFreeze

- make sure all feature code is merged

- https://wiki.openstack.org/wiki/StringFreeze

- give translators time to translate all our strings

- Note: debug logs are no longer translated

- https://wiki.openstack.org/wiki/DepFreeze

- time to co-ordinate the final list of deps, and give packagers time to package them

- generally it is also quite destabilising to take upgrades (beyond bug fixes) this late

We align with this in Nova and the dates for this release are stated above.

As with all processes here, there are exceptions. But the exceptions at this stage need to be discussed with the horizontal teams that might be affected by changes beyond this point, and as such are discussed with one of the OpenStack release managers.

Spec and Blueprint Approval Freeze

This is a (mostly) Nova specific process.

Why do we have a Spec Freeze:

- specs take a long time to review, keeping it open distracts from code reviews

- keeping them "open" and being slow at reviewing the specs (or just ignoring them) really annoys the spec submitters

- we generally have more code submitted that we can review, this time bounding is a way to limit the number of submissions

By the freeze date, we expect this to also be the complete list of approved blueprints for liberty:

https://blueprints.launchpad.net/nova/liberty

The date listed above is when we expect all specifications for Liberty to be merged and displayed here: http://specs.openstack.org/openstack/nova-specs/specs/liberty/approved/

New in Liberty, we will keep the backlog open for submission at all times. Note: the focus is on accepting and agreeing problem statements as being in scope, rather than queueing up work items for the next release. We are still working on a new lightweight process to get our of the backlog and approved for a particular release. For more details on backlog specs, please see: http://specs.openstack.org/openstack/nova-specs/specs/backlog/index.html

Also new in Liberty, we will allow people to submit Mitaka specs from liberty-2 (rather than liberty-3 as normal).

There can be exceptions, usually it's an urgent feature request that comes up after the initial deadline. These will generally be discussed at the weekly Nova meeting, by adding the spec or blueprint to discuss in the appropriate place in the meeting agenda here (ideally make yourself available to discuss the blueprint, or alternatively make your case on the ML before the meeting): https://wiki.openstack.org/wiki/Meetings/Nova

Non-priority Feature Freeze

This is a Nova specific process.

This only applies to low priority blueprints in this list: https://blueprints.launchpad.net/nova/liberty

We currently have a very finite amount of review bandwidth. In order to make code review time for the agreed community wide priorities, we have to not do some other things. To this end, we are reserving liberty-3 for priority features and bug fixes. As such, we intend not to merge any non-priority things during liberty-3, so around liberty-2 is the "Feature Freeze" for blueprints that are not a priority for liberty.

For liberty, we are not aligning the Non-priority Feature Freeze with the tagging of liberty-2. That means the liberty-2 tag will not include some features that merge later in the week. This means, we only require the code to be approved before the end of July 30th, we don't require it to be merged by that date. This should help stop any gate issues disrupting our ability to merge all the code that we have managed to get reviewed in time. Ideally all code should be merged by the end of July 31st, but the state of the gate will determine how possible that is.

You can see the list of priorities for each release: http://specs.openstack.org/openstack/nova-specs/#priorities

For things that are very close to merging, it's possible it might get an exception for one week after the freeze date, given the patches get enough +2s from the core team to get the code merged. But we expect this list to be zero, if everything goes to plan (no massive gate failures, etc). For details, process see: http://lists.openstack.org/pipermail/openstack-dev/2015-July/070920.html

Exception process:

- Please add request in here: https://etherpad.openstack.org/p/liberty-nova-non-priority-feature-freeze (ideally with core reviewers to sponsor your patch, normally the folks who have already viewed those patches)

- make sure you make your request before the end of Wednesday 5th August

- nova-drivers will meet to decide what gets an exception (just like they did last release: http://lists.openstack.org/pipermail/openstack-dev/2015-February/056208.html)

- an initial list of exceptions (probably just a PTL compiled list at that point) will be available for discussion during the Nova meeting on Thursday 6th August

- the aim is to merge the code for all exceptions by the end of Monday 10th August

Alternatives:

- It was hoped to make this a continuous process using "slots" to control what gets reviewed, but this was rejected by the community when it was last discussed. There is hope this can be resurrected to avoid the "lumpy" nature of this process.

- Currently the runways/kanban ideas are blocked on us adopting something like phabricator that could support such workflows

String Freeze

NOTE: this is still a provisional idea

There are general guidelines here: https://wiki.openstack.org/wiki/StringFreeze

But below is an experiment for Nova during liberty, to trial a new process. There are four views onto this process.

First, the user point of view:

- Would like to see untranslated strings, rather than hiding error/info/warn log messages as debug

Second, the translators:

- Translators will start translation without string freeze, just after feature freeze.

- Then we have a strict string freeze date (around RC1 date)

- After at least 10 days to finish up the translations before the final release

Third, the docs team:

- Config string updates often mean there is a DocImpact and docs need updating

- best to avoid those during feature freeze, where possible

Fourth, the developer point of view:

- Add any translated strings before Feature Freeze

- Post Feature Freeze, allow string changes where an untranslated string is better than no string

- i.e. allow new log message strings, until the hard freeze

- Post Feature Freeze, have a soft string freeze, try not to change existing strings, where possible

- Note: moving a string and re-using a existing string is fine, as the tooling deals with that automatically

- Post Hard String Freeze, there should be no extra strings to translate

- Assume any added strings will not be translated

- Send email about the string freeze exception in this case only, but there should be zero of these

So, what has changed from https://wiki.openstack.org/wiki/StringFreeze, well:

- no need to block new strings until much later in the cycle

- should stop the need to rework bug fixes to remove useful log messages

- instead, just accept the idea of untranslated strings being better than no strings in those cases

So for liberty, 21st September, so we will call 21st September the hard freeze date, as we expect RC1 to by cut sometime after 21st September. Note the date is fix, its not aligned with the cutting of RC1

This means we must cut another tarball (RC2 or higher) at some point after 5th October to include new translations, even if there are no more bug fixes, to give time before the final release on 13th-16th October.

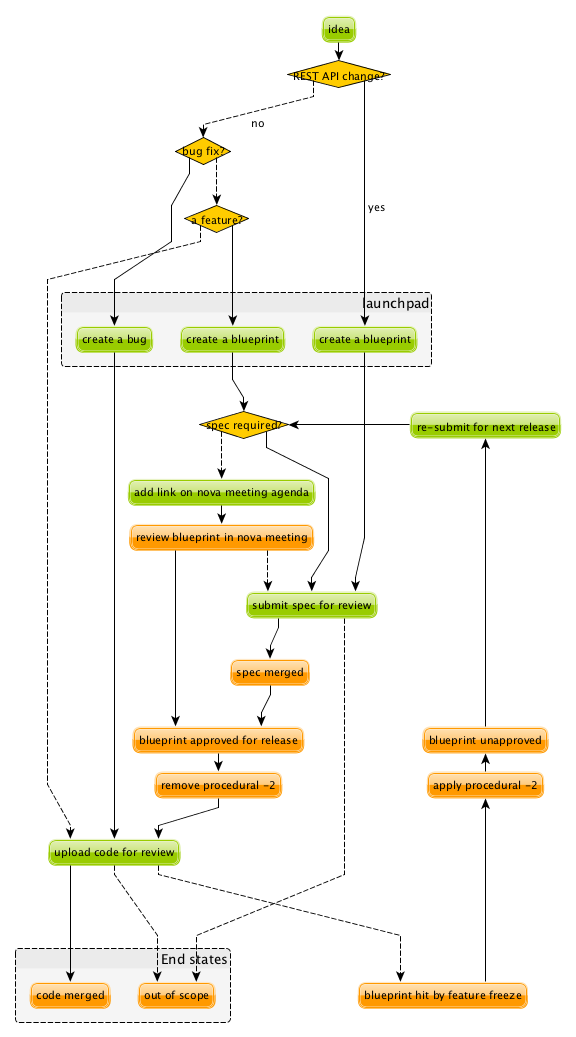

How do I get my code merged?

OK, so you are new to Nova, and you have been given a feature to implement. How do I make that happen?

You can get most of your questions answered here:

But let's put a Nova specific twist on things...

Overview

Where do you track bugs?

We track bugs here:

If you fix an issue, please raise a bug so others who spot that issue can find the fix you kindly created for them.

Also before submitting your patch it's worth checking to see if someone has already fixed it for you (Launchpad helps you with that, at little, when you create the bug report).

When do I need a blueprint vs a spec?

For more details see:

To understand this question, we need to understand why blueprints and specs are useful.

But here is the rough idea:

- if it needs a spec, it will need a blueprint.

- if it's an API change, it needs a spec.

- if it's a single small patch that touches a small amount of code, with limited deployer and doc impact, it probably doesn't need a spec.

If you are unsure, please ask johnthetubaguy on IRC, or one of the other nova-drivers.

How do I get my blueprint approved?

So you need your blueprint approved? Here is how:

- if you don't need a spec, please add a link to your blueprint to the agenda for the next nova meeting: https://wiki.openstack.org/wiki/Meetings/Nova

- be sure your blueprint description has enough context for the review in that meeting.

- if you need a spec, then please submit a nova-spec for review, see: http://docs.openstack.org/infra/manual/developers.html

Got any more questions? Contact johnthetubaguy or one of the other nova-specs-core who are awake at the same time as you. IRC is best as you will often get an immediate response, if they are too busy send him/her an email.

Why are the reviewers being mean to me?

They don't mean to be mean, honest! They are just trying to make sure that you are solving the problem you intend, you are not creating problems for other users and that the code is maintainable.

TODO - need more details

My code reviews seems to have got stuck, what can I do?

So you have a -1 or a -2 but the reviewer is not responding (or the patch author is not responding) or you are failing to reach common ground, what can be done?

You can raise it on IRC or via email. If that doesn't work, add it into the agenda for the next nova meeting you are able to attend: https://wiki.openstack.org/wiki/Meetings/Nova

Please note: if you have not had any reviews at all, please don't ping core reviewers on IRC or via email. This is about reviews that have not been revisited after a previous negative review. Instead please read: https://wiki.openstack.org/wiki/Nova/Liberty_Release_Schedule#How_can_I_get_my_code_merged_faster.3F

If you code is not getting any attention be sure that you have:

- nova is a big project, be aware of the average wait times

- put your blueprint in the commit message

- ensure your blueprint is marked as NeedsCodeReview if you are finished

- ensure the whiteboard on the blueprint is easy to understand in terms of what patches need reviewing (ideally all in a single gerrit topic)

- or put a bug in your commit message

- if its a bug, share the fact you have fixed an issue by raising a bug in launch pad

- make sure it has the correct priority set and bug tag set

- for more details see:

- Is your patch for a priority, or could it get recommended by a subteam, or is it a trivial fix?

- if it does, consider adding your patch in here: https://etherpad.openstack.org/p/liberty-nova-priorities-tracking

- if none of the above apply, reach out on IRC for advice on how you get more attention for your patch

Nova Process Mission

This section takes a high level look at the guiding principles behind the Nova process.

Open

Our mission is to have:

- Open Source

- Open Design

- Open Development

- Open Community

We have to work out how to keep communication open in all areas. We need to be welcoming and mentor new people, and make it easy for them to pickup the knowledge they need to get involved with OpenStack. For more info on Open, please see: https://wiki.openstack.org/wiki/Open

Interoperable API, supporting a vibrant ecosystem

An interoperable API that gives users on-demand access to compute resources is at the heart of Nova's mission: http://docs.openstack.org/developer/nova/project_scope.html#mission

Nova has a vibrant ecosystem of tools built on top of the current Nova API. All features should be designed to work with all technology combinations, so the feature can be adopted by our ecosystem. If a new feature is not adopted by the ecosystem, it will make it hard for your users to make use of those features, defeating most of the reason to add the feature in the first place. The microversion system allows users to isolate themselves

This is a very different aim to being "pluggable" or wanting to expose all capabilities to end users. At the same time, it is not just a "lowest common denominator" set of APIs. It should be discoverable which features are available, and while no implementation details should leak to the end users, purely admin concepts may need to understand technology specific details that back the interoperable and more abstract concepts that are exposed to the end user. This is a hard goal, and one area we currently don't do well is isolating image creators from these technology specific details.

Smooth Upgrades

As part of our mission for a vibrant ecosystem around our APIs, we want to make it easy for those deploying Nova to upgrade with minimal impact to their users. Here is the scope of Nova's upgrade support:

- upgrade from any commit, to any future commit, within the same major release

- only support upgrades between N and N+1 major versions, to reduce technical debt relating to upgrades

Here are some of the things we require developers to do, to help with upgrades:

- when replacing an existing feature or configuration option, make it clear how to transition to any replacement

- deprecate configuration options and features before removing them

- i.e. continue to support and test features for at least one release before they are removed

- this gives time for operator feedback on any removals

- End User API will always be kept backwards compatible

Interaction goals

When thinking about the importance of process, we should take a look at: http://agilemanifesto.org

With that in mind, let's look at how we want different members of the community to interact. Let's start with looking at issues we have tried to resolve in the past (currently in no particular order). We must:

- have a way for everyone to review blueprints and designs, including allowing for input from operators and all types of users (keep it open)

- take care to not expand Nova's scope any more than absolutely necessary

- ensure we get sufficient focus on the core of Nova so that we can maintain or improve the stability and flexibility of the overall codebase

- support any API we release approximately for ever. We currently release every commit, so we're motivate to get the API right first time

- avoid low priority blueprints slowing work on high priority work, without blocking those forever

- focus on a consistent experience for our users, rather than ease of development

- optimise for completed blueprints, rather than more half completed blueprints, so we get maximum value for our users out of our review bandwidth

- focus efforts on a subset of patches to allow our core reviewers to be more productive

- set realistic expectations on what can be reviewed in a particular cycle, to avoid sitting in an expensive rebase loop

- be aware of users that do not work on the project full time

- be aware of users that are only able to work on the project at certain times that may not align with the overall community cadence

- discuss designs for non-trivial work before implementing it, to avoid the expense of late-breaking design issues

FAQs

Why bother with all this process?

We are a large community, spread across multiple timezones, working with several horizontal teams. Good communication is a challenge and the processes we have are mostly there to try and help fix some communication challenges.

If you have a problem with a process, please engage with the community, discover the reasons behind our current process, and help fix the issues you are experiencing.

Why don't you remove old process?

We do! For example, in Liberty we stopped trying to predict the milestones when a feature will land.

As we evolve, it is important to unlearn new habits and explore if things get better if we choose to optimise for a different set of issues.

Why are specs useful?

Spec reviews allow anyone to step up and contribute to reviews, just like with code. Before we used gerrit, it was a very messy review process, that felt very "closed" to most people involved in that process.

As Nova has grown in size, it can be hard to work out how to modify Nova to meet your needs. Specs are a great way of having that discussion with the wider Nova community.

For Nova to be a success, we need to ensure we don't break our existing users. The spec template helps focus the mind on the impact your change might have on existing users and gives an opportunity to discuss the best way to deal with those issues.

However, there are some pitfalls with the process. Here are some top tips to avoid them:

- keep it simple. Shorter, simpler, more decomposed specs are quicker to review and merge much quicker (just like code patches).

- specs can help with documentation but they are only intended to document the design discussion rather than document the final code.

- don't add details that are best reviewed in code, it's better to leave those things for the code review.

If we have specs, why still have blueprints?

We use specs to record the design agreement, we use blueprints to track progress on the implementation of the spec.

Currently, in Nova, specs are only approved for one release, and must be re-submitted for each release you want to merge the spec, although that is currently under review.

Why do we have priorities?

To be clear, there is no "nova dev team manager", we are an open team of professional software developers, that all work for a variety of (mostly competing) companies that collaborate to ensure the Nova project is a success.

Over time, a lot of technical debt has accumulated, because there was a lack of collective ownership to solve those cross-cutting concerns. Before the Kilo release, it was noted that progress felt much slower, because we were unable to get appropriate attention on the architectural evolution of Nova. This was important, partly for major concerns like upgrades and stability. We agreed it's something we all care about and it needs to be given priority to ensure that these things get fixed.

Since Kilo, priorities have been discussed at the summit. This turns in to a spec review which eventually means we get a list of priorities here: http://specs.openstack.org/openstack/nova-specs/#priorities

Allocating our finite review bandwidth to these efforts means we have to limit the reviews we do on non-priority items. This is mostly why we now have the non-priority Feature Freeze. For more on this, see below.

Blocking a priority effort is one of the few widely acceptable reasons to block someone adding a feature. One of the great advantages of being more explicit about that relationship is that people can step up to help review and/or implement the work that is needed to unblock the feature they want to get landed. This is a key part of being an Open community.

Why is there a Feature Freeze (and String Freeze) in Nova?

The main reason Nova has a feature freeze is that it gives people working on docs and translations to sync up with the latest code. Traditionally this happens at the same time across multiple projects, so the docs are synced between what used to be called the "integrated release".

We also use this time period as an excuse to focus our development efforts on bug fixes, ideally lower risk bug fixes, and improving test coverage.

In theory, with a waterfall hat on, this would be a time for testing and stabilisation of the product. In Nova we have a much stronger focus on keeping every commit stable, by making use of extensive continuous testing. In reality, we frequently see the biggest influx of fixes in the few weeks after the release, as distributions do final testing of the released code.

It is hoped that the work on Feature Classification will lead us to better understand the levels of testing of different Nova features, so we will be able to reduce and dependency between Feature Freeze and regression testing. It is also likely that the move away from "integrated" releases will help find a more developer friendly approach to keep the docs and translations in sync.

Why is there a non-priority Feature Freeze in Nova?

We have already discussed why we have priority features.

The rate at which code can be merged to Nova is primarily constrained by the amount of time able to be spent reviewing code. Given this, earmarking review time for priority items means depriving it from non-priority items.

The simplest way to make space for the priority features is to stop reviewing and merging non-priority features for a whole milestone. The idea being developers should focus on bug fixes and priority features during that milestone, rather than working on non-priority features.

A known limitation of this approach is developer frustration. Many developers are not being given permission to review code, work on bug fixes or work on priority features, and so feel very unproductive upstream. An alternative approach of "slots" or "runways" has been considered, that uses a kanban style approach to regulate the influx of work onto the review queue. We are yet to get agreement on a more balanced approach, so the existing system is being continued to ensure priority items are more likely to get the attention they require.

Why do you still use Launchpad?

We are actively looking for an alternative to Launchpad's bugs and blueprints.

Originally the idea was to create Storyboard. However the development has stalled. A more likely front runner is this: http://phabricator.org/applications/projects/

When should I submit my spec?

Ideally we want to get all specs for a release merged before the summit. For things that we can't get agreement on, we can then discuss those at the summit. There will always be ideas that come up at the summit and need to be finalised after the summit. This causes a rush which is best avoided.

How can I get my code merged faster?

So no-one is coming to review your code, how do you speed up that process?

Firstly, make sure you are following the above process. If it's a feature, make sure you have an approved blueprint. If it's a bug, make sure it is triaged, has its priority set correctly, it has the correct bug tag and is marked as in progress. If the blueprint has all the code up for review, change it from Started into NeedsCodeReview so people know only reviews are blocking you, make sure it hasn't accidentally got marked as implemented.

Secondly, if you have a negative review (-1 or -2) and you responded to that in a comment or uploading a new change with some updates, but that reviewer hasn't come back for over a week, it's probably a good time to reach out to the reviewer on IRC (or via email) to see if they could look again now you have addressed their comments. If you can't get agreement, and your review gets stuck (i.e. requires mediation), you can raise your patch during the Nova meeting and we will try to resolve any disagreement.

Thirdly, is it in merge conflict with master or are any of the CI tests failing? Particularly any third-party CI tests that are relevant to the code you are changing. If you're fixing something that only occasionally failed before, maybe recheck a few times to prove the tests stay passing. Without green tests, reviews tend to move on and look at the other patches that have the tests passing.

OK, so you have followed all the process (i.e. your patches are getting advertised via the project's tracking mechanisms), and your patches either have no reviews, or only positive reviews. Now what?

Have you considered reviewing other people's patches? Firstly, participating in the review process is the best way for you to understand what reviewers are wanting to see in the code you are submitting. As you get more practiced at reviewing it will help you to write "merge-ready" code. Secondly, if you help review other peoples code and help get their patches ready for the core reviewers to add a +2, it will free up a lot of non-core and core reviewer time, so they are more likely to get time to review your code. For more details, please see: Nova/Mentoring#Why_do_code_reviews_if_I_am_not_in_nova-core.3F

Please note, I am not recommending you go to ask people on IRC or via email for reviews. Please try to get your code reviewed using the above process first. In many cases multiple direct pings generate frustration on both sides and that tends to be counter productive.

Now you have got your code merged, lets make sure you don't need to fix this bug again. The fact the bug exists means there is a gap in our testing. Your patch should have included some good unit tests to stop the bug coming back. But don't stop there, maybe its time to add tempest tests, to make sure your use case keeps working? Maybe you need to set up a third party CI so your combination of drivers will keep working? Getting that extra testing in place should stop a whole heap of bugs, again giving reviewers more time to get to the issues or features you want to add in the future.

Process Evolution Ideas

We are always evolving our process as we try to improve and adapt to the changing shape of the community. Here we discuss some of the ideas, along with their pros and cons.

Splitting out the virt drivers (or other bits of code)

Currently, Nova doesn't have strong enough interfaces to split out the virt drivers, scheduler or REST API. This is seen as the key blocker. Let's look at both sides of the debate here.

Reasons for the split:

- can have separate core teams for each repo

- this leads to quicker turn around times, largely due to focused teams

- splitting out things from core means less knowledge required to become core in a specific area

Reasons against the split:

- loss of interoperability between drivers

- this is a core part of Nova's mission, to have a single API across all deployments, and a strong ecosystem of tools and apps built on that

- we can overcome some of this with stronger interfaces and functional tests

- new features often need changes in the API and virt driver anyway

- the new "depends-on" can make these cross-repo dependencies easier

- loss of code style consistency across the code base

- fear of fragmenting the nova community, leaving few to work on the core of the project

- could work in subteams within the main tree

TODO - need to complete analysis

Subteam recommendation as a +2

There are groups of people with great knowledge of particular bits of the code base. It may be a good idea to give their recommendation of a merge. In addition, having the subteam focus review efforts on a subset of patches should help concentrate the nova-core reviews they get, and increase the velocity of getting code merged.

The first part is for subgroups to show they can do a great job of recommending patches. This is starting in here: https://etherpad.openstack.org/p/liberty-nova-priorities-tracking

Ideally this would be done with gerrit user "tags" rather than an etherpad. There are some investigations by sdague in how feasible it would be to add tags to gerrit.

Stop having to submit a spec for each release

As mentioned above, we use blueprints for tracking, and specs to record design decisions. Targeting specs to a specific release is a heavyweight solution and blurs the lines between specs and blueprints. At the same time, we don't want to lose the opportunity to revise existing blueprints. Maybe there is a better balance?

What about this kind of process:

- backlog has these folders:

- backlog/incomplete - merge a partial spec

- backlog/complete - merge complete specs (remove tracking details, such as assignee part of the template)

- ?? backlog/expired - specs are moved here from incomplete or complete when no longer seem to be given attention (after 1 year, by default)

- <release>/implemented - when a spec is complete it gets moved into the release directory and possibly updated to reflect what actually happened

- there will no longer be a per-release approved spec list

To get your blueprint approved:

- add it to the next nova meeting

- if a spec is required, update the URL to point to the spec merged in a spec to the blueprint

- ensure there is an assignee in the blueprint

- a day before the meeting, a note is sent to the ML to review the list before the meeting

- discuss any final objections in the nova-meeting

- this may result in a request to refine the spec, if things have changed since it was merged

- trivial cases can be approved in advance by a nova-driver, so not all folks need to go through the meeting

This still needs more thought, but should decouple the spec review from the release process. It is also more compatible with a runway style system, that might be less focused on milestones.

Runways

Runways are a form of Kanban, where we look at optimising the flow through the system, by ensure we focus our efforts on reviewing a specific subset of patches.

The idea goes something like this:

- define some states, such as: design backlog, design review, code backlog, code review, test+doc backlog, complete

- blueprints must be in one of the above state

- large or high priority bugs may also occupy a code review slot

- core reviewer member moves item between the slots

- must not violate the rules on the number of items in each state

- states have a limited number of slots, to ensure focus

- certain percentage of slots are dedicated to priorities, depending on point in the cycle, and the type of the cycle, etc

Reasons for:

- more focused review effort, get more things merged more quickly

- more upfront about when your code is likely to get reviewed

- smooth out current "lumpy" non-priority feature freeze system

Reasons against:

- feels like more process overhead

- control is too centralised

Replacing Milestones with SemVer Releases

You can deploy any commit of Nova and upgrade to a later commit in that same release. Making our milestones versioned more like an official release would help signal to our users that people can use the milestones in production, and get a level of upgrade support.

It could go something like this:

- 14.0.0 is milestone 1

- 14.0.1 is milestone 2 (maybe, because we add features, it should be 14.1.0?)

- 14.0.2 is milestone 3

- we might do other releases (once a critical bug is fixed?), as it makes sense, but we will always be the time bound ones

- 14.0.3 two weeks after milestone 3, adds only bug fixes (and updates to RPC versions?)

- maybe a stable branch is created at this point?

- 14.1.0 adds updated translations and co-ordinated docs

- this is released from the stable branch?

- 15.0.0 is the next milestone, in the following cycle

- not the bump of the major version to signal an upgrade incompatibility with 13.x

We are currently watching Ironic to see how their use of semver goes, and see what lessons need to be learnt before we look to maybe apply this technique during M.

Feature Classification

This is a look at moving forward this effort:

The things we need to cover:

- note what is tested, and how often that test passes (via 3rd party CI, or otherwise)

- link to current test results for stable and master (time since last pass, recent pass rate, etc)

- TODO - sync with jogo on his third party CI audit and getting trends, ask infra

- include experimental features (untested feature)

- get better at the impact of volume drivers and network drivers on available features (not just hypervisor drivers)

Main benefits:

- users get a clear picture of what is known to work

- be clear about when experimental features are removed, if no tests are added

- allows a way to add experimental things into Nova, and track either their removal or maturation

TODO fill out other details...