Neutron Trunk API Performance and Scaling

Back to Neutron/TrunkPort

WORK IN PROGRESS

Contents

Testbed

underlying hardware

| CPU | Intel(R) Core(TM) i7-4600M CPU @ 2.90GHz |

| RAM | DDR3 Synchronous 1600 MHz |

VM running devstack

| vCPU count | 2 |

| RAM | 6 GiB |

software versions

2016 late november, cc. 6 weeks after the newton release

| ubuntu | 16.04.1 |

| openvswitch | 2.5.0-0ubuntu1 |

| devstack | a3bb131 |

| neutron | b8347e1 |

| nova | 2249898 |

| python-neutronclient | 36ad0b7 |

| python-novaclient | 6.0.0 |

| python-openstackclient | 3.3.0 |

local.conf

only the relevant parts

[[post-config|/etc/neutron/neutron.conf]] [DEFAULT] service_plugins = trunk,... rpc_response_timeout = 600 [quotas] default_quota = -1 quota_network = -1 quota_subnet = -1 quota_port = -1 [[post-config|/etc/neutron/plugins/ml2/ml2_conf.ini]] [ml2] mechanism_drivers = openvswitch,linuxbridge tenant_network_types = vxlan,vlan [ovs] ovsdb_interface = native of_interface = native datapath_type = system [securitygroup] firewall_driver = openvswitch [[post-config|/etc/neutron/dhcp_agent.ini]] [DEFAULT] enable_isolated_metadata = false [[post-config|/etc/neutron/l3_agent.ini]] [DEFAULT] enable_metadata_proxy = false [[post-config|/etc/nova/nova.conf]] [DEFAULT] # NOTE this could have probably stayed the default and not make a difference vif_plugging_timeout = 300

workflow variations

pseudocode of test scenarios

NOTE metadata and dhcp services are all turned off to avoid being memory bound

add subports before boot, all at once

for 1 parent port:

network create

subnet create

port create

for N subports:

network create

subnet create

port create

trunk create with N subports

server create with parent port

N = 1, 2, 4, 8, 16, 32, 64, 128, 256, 512

add subports after boot, one by one

for 1 parent port:

network create

subnet create

port create

trunk create with 0 subports

server create with parent port

for N subports:

network create

subnet create

port create

trunk set (add one subport)

port list

port list --device-id server-uuid

server show

N = 1, 2, 4, 8, 16, 32, 64, 128, 256, 512

add subports after boot, in batches

for 1 parent port:

network create

subnet create

port create

trunk create with 0 subports

server create with parent port

for N batches of subports:

for M subports in a batch:

network create

subnet create

port create

trunk set (add M subports)

port list

port list --device-id server-uuid

server show

N*M = 8*64(=512), 8*128(=1024)

test results

add subports before boot, all at once

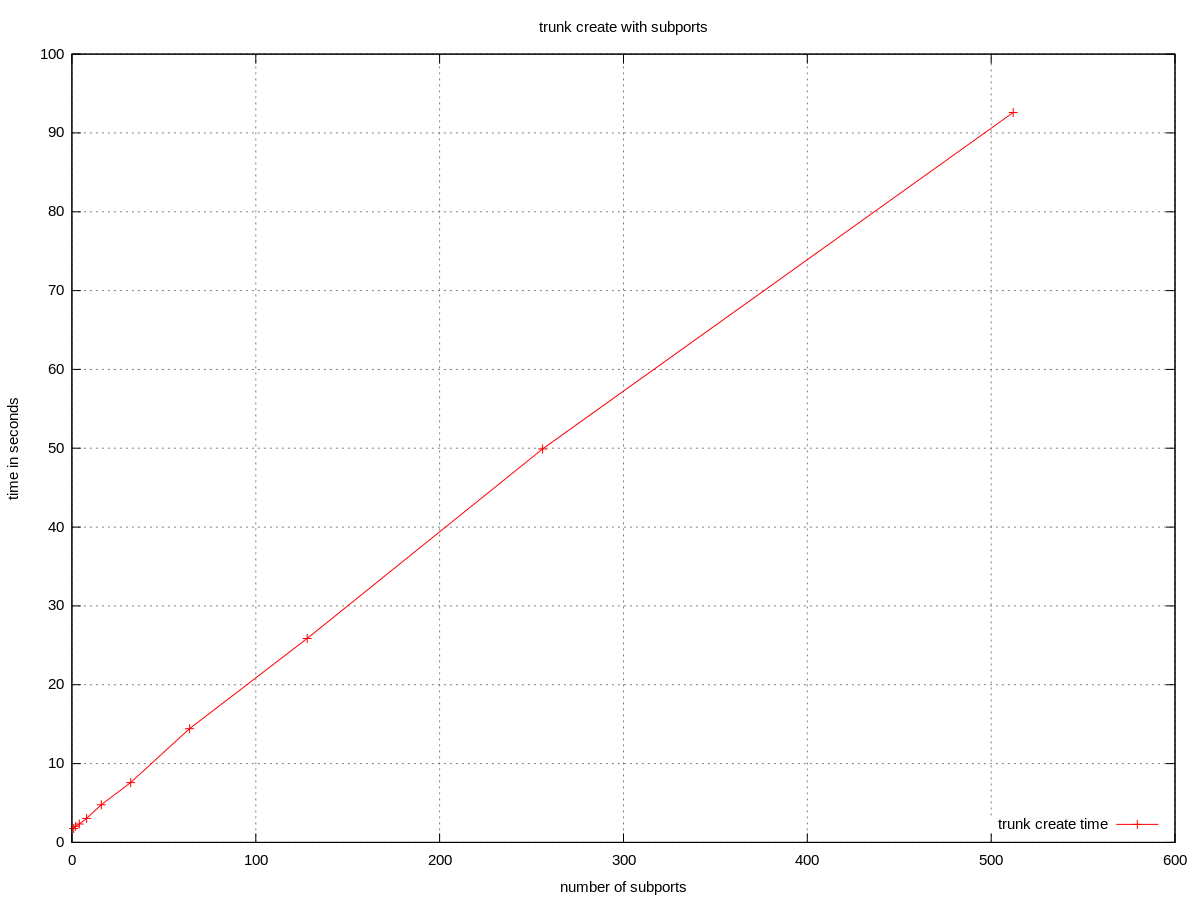

trunk create with subports

The time to create a trunk with N subports is clearly a linear function of N. No errors in the measured range.

The time to create a trunk with N subports is clearly a linear function of N. No errors in the measured range.

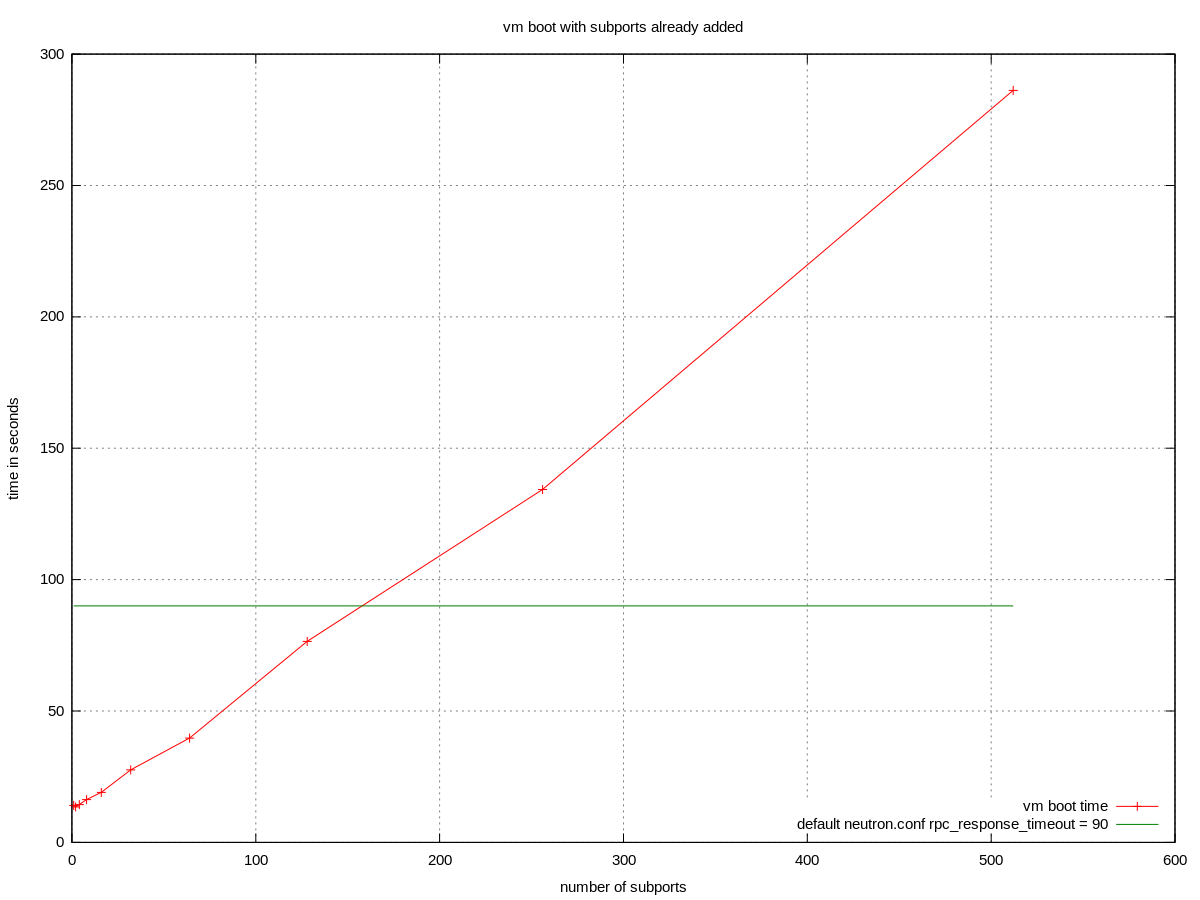

server boot with subports

The time to boot a server with a trunk with N subports is also a linear function of N. Errors appear above cc. 150 subports if using default configuration.

The time to boot a server with a trunk with N subports is also a linear function of N. Errors appear above cc. 150 subports if using default configuration.

Error symptoms:

- trunk remains in BUILD state

- subports remain in DOWN state

Example q-agt.log:

2016-11-25 14:42:56.571 ERROR neutron.agent.linux.openvswitch_firewall.firewall [req-75355670-e83b-42ac-8e99-08e50a352609 None None] Initializing port 30c12b8f-cfae-4090-bfb9-8257b4278300 that was already initialized. 2016-11-25 14:43:09.765 ERROR neutron.services.trunk.drivers.openvswitch.agent.ovsdb_handler [-] Got messaging error while processing trunk bridge tbr-fd003eb7-3: Timed out waiting for a reply to message ID 781bd8f7778941ddaf3e3b7feb166011

Example neutron-api.log:

2016-11-25 14:41:43.900 DEBUG oslo_messaging._drivers.amqpdriver [-] received message msg_id: 781bd8f7778941ddaf3e3b7feb166011 reply to reply_d020591a17164210af4973e8432edbef from (pid=7717) __call__ /usr/local/lib/python2.7/dist-packages/oslo_messaging/_drivers/amqpdriver.py:194 2016-11-25 14:43:15.977 DEBUG oslo_messaging._drivers.amqpdriver [req-75355670-e83b-42ac-8e99-08e50a352609 None None] sending reply msg_id: 781bd8f7778941ddaf3e3b7feb166011 reply queue: reply_d020591a17164210af4973e8432edbef time elapsed: 92.0763713859s from (pid=7717) _send_reply /usr/local/lib/python2.7/dist-packages/oslo_messaging/_drivers/amqpdriver.py:73

Where the elapsed time (92s) is longer than the default 90s of:

/etc/neutron/neutron.conf: [DEFAULT] rpc_response_timeout = 90

Workarounds:

- neutron-ovs-agent after a restart seems to bring up all subports and the trunk correctly without an rpc timeout.

- rpc_response_timeout can be raised. The rest of the tests were performed with rpc_response_timeout = 600

The rpc method timing out:

2016-11-25 15:55:42.370 DEBUG neutron.services.trunk.rpc.server [req-5a3498ad-efcb-400d-a2e7-cfe19db946a9 None None] neutron.services.trunk.rpc.server.TrunkSkeleton method update_subport_bindings called with arguments ...

Of course rpc_response_timeout does not directly apply to vm boot time, so the chart is slightly misleading.

Relevant code: https://github.com/openstack/neutron/blob/b8347e16d1db62bf79b125d89e1c7863246fcd0d/neutron/services/trunk/rpc/server.py

def update_subport_bindings

_process_trunk_subport_bindings()

def _process_trunk_subport_bindings

for port_id in port_ids:

_handle_port_binding()

def _handle_port_binding

core_plugin.update_port()

In this case that is practically a cascading update to all subports that has to complete in a single update_subport_bindings rpc call.

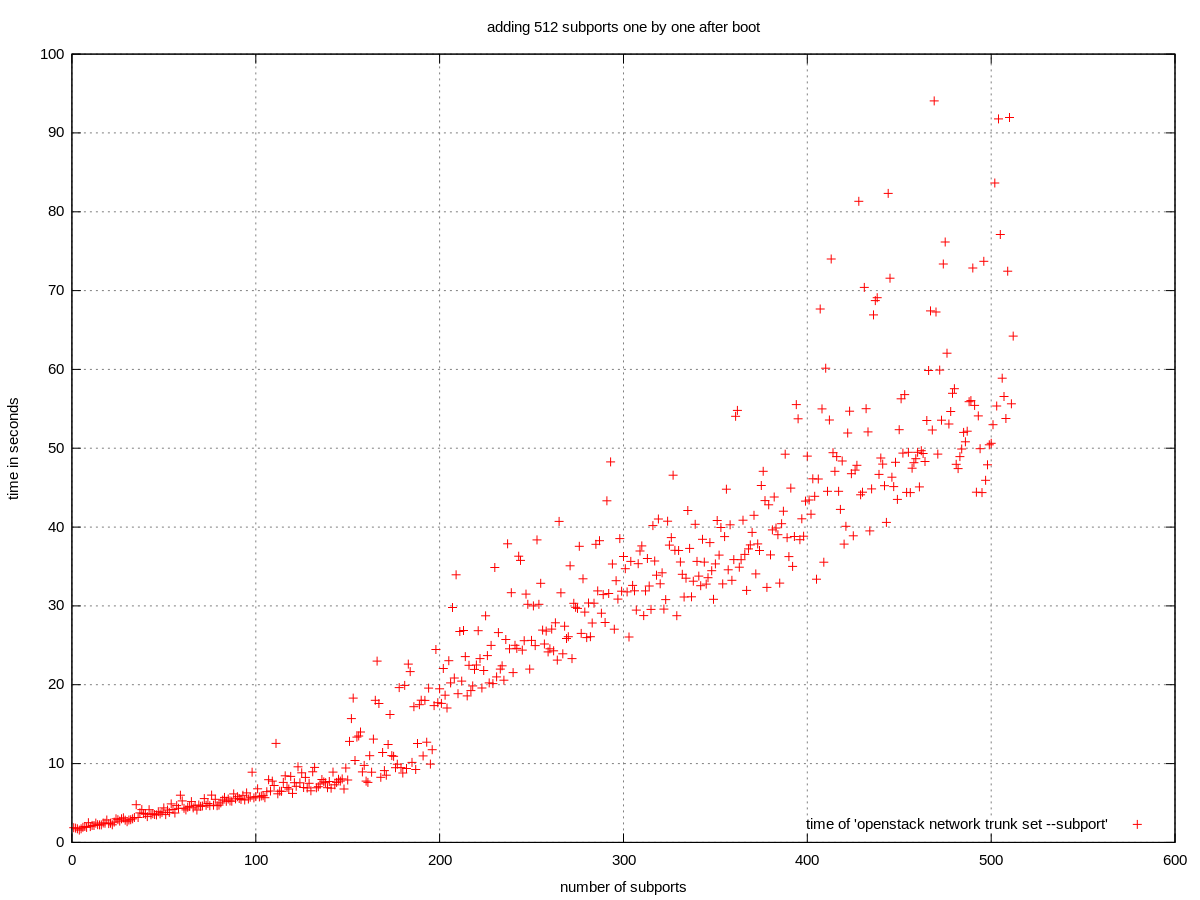

add subports after boot, one by one

add subports to trunk, one by one

The chart is clearly bimodal. The starting section is linear with low deviation. The second section is also linear but with a higher slope and much higher deviation. The second section starts around 150 subports, however the reapperance of 150 is just a coincidence.

The chart is clearly bimodal. The starting section is linear with low deviation. The second section is also linear but with a higher slope and much higher deviation. The second section starts around 150 subports, however the reapperance of 150 is just a coincidence.

TODO What changes between the two sections?

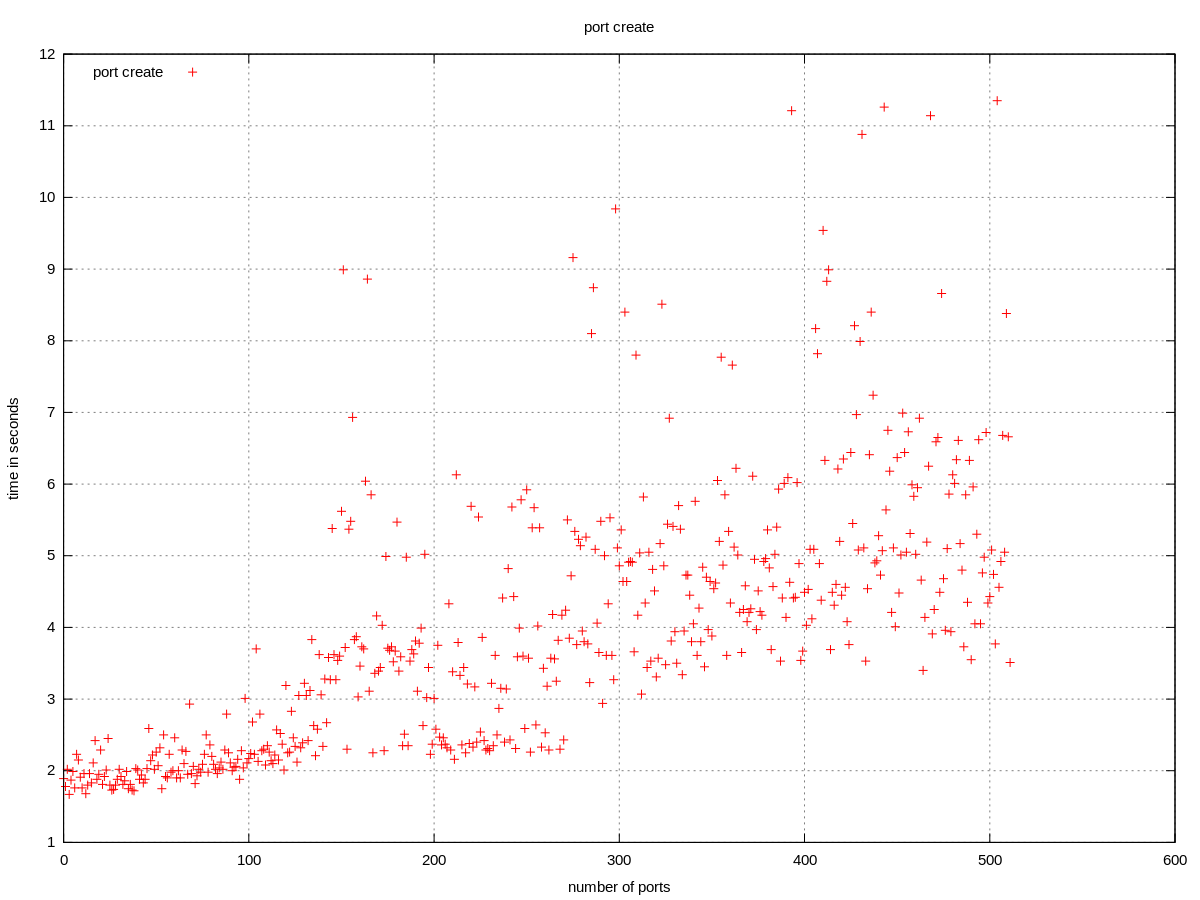

The bimodality may or may not have to do anything with the trunk feature, because the same can be observed on a sequence of port creations:

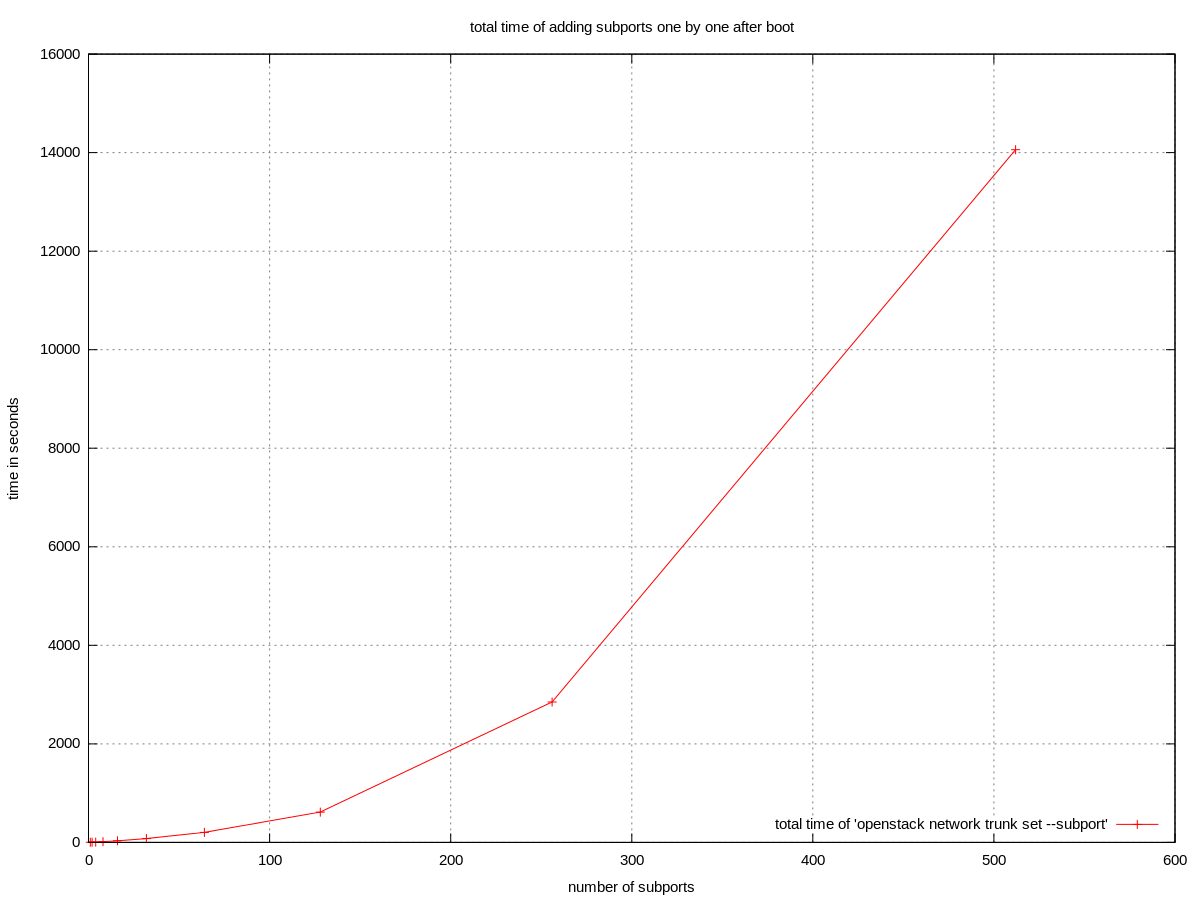

cumulative time of adding subports to trunk, one by one

For the end-user the total time spent in adding subports is more significant. Based on the previous chart measuring the time of adding one subport to a trunk we can expect this to be roughly quadratic. There were no errors observed in the measured range, however the cumulative time quickly becomes unfeasible to wait for.

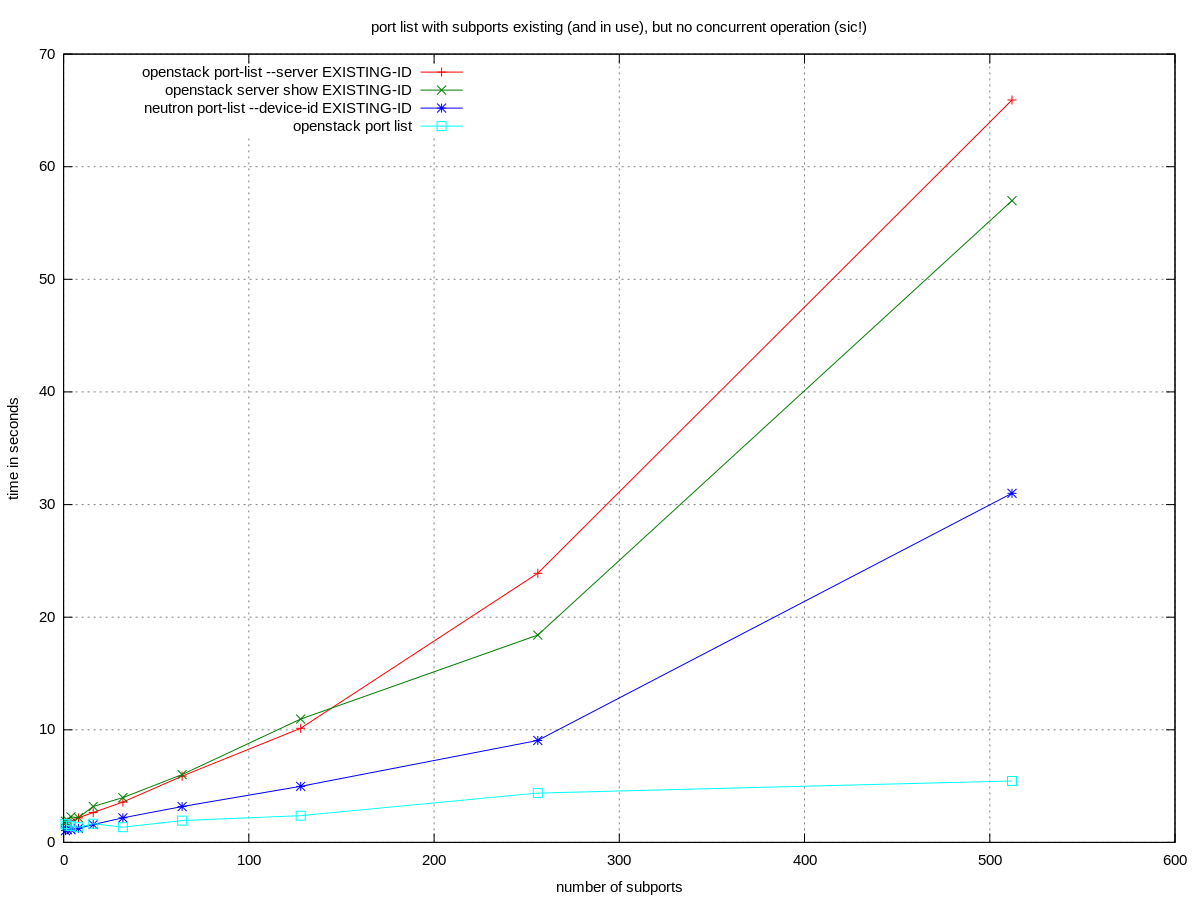

surprise effect on filtered port listings

An unexpected slowdown effect can be observed on filtered port listings as in:

- neutron port-list --device-id ID, or

- openstack port list --server ID

It only occurs, if:

- the ports are used as subports

- if the port listing is filtered (listing all ports is fast)

- if the VM ID used in the filtering in existing ID (filtering for non-existing IDs is fast)

nova makes similar calls to neutron in various places (eg. openstack server show), with the consequence of some nova operations being slow.

TODO What happens if we boot a VM with no trunk and subports and we filter for its ID?

TODO What's the difference on the wire between neutron and openstack CLI?