Neutron/BGP MPLS VPN

Contents

Scope:

This BP implements partial RFC4364 BGP/MPLS IP Virtual Private Networks (VPNs) support for interconnecting existing network and OpenStack cloud, or inter connection of OpenStack Cloud.

Use Cases:

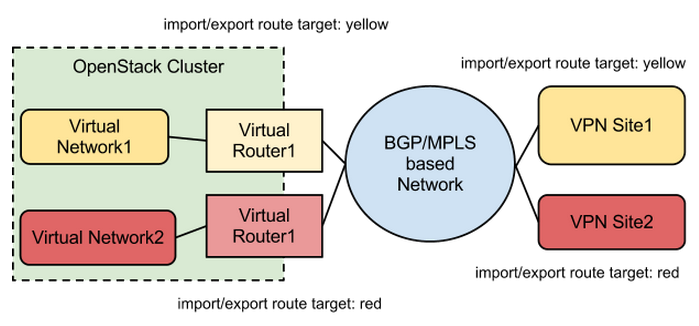

Use case 1: Connect Neutron Virtual Network with Existing VPN site via Virtual Router In this case, we connect virtual networks in the neutron to the existing VPN site via Virtual Router. Each route will be dynamically exchanged using BGP.

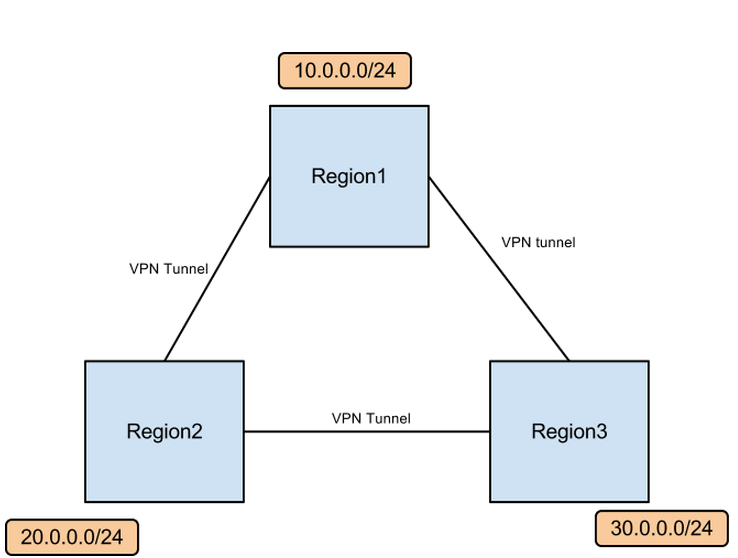

Use case 2: Interconnect multiple OpenStack regions There are 3 regions which is interconnected via some VPN method (eg, IPSec) in the diagram below. All regions are managed by only one operator. 10.0.0.0/24 is on Region1 and 20.0.0.0/24,30.0.0.0/24 are so on. Using ibgp, we can connect virtual networks on any cluster on efficient manner.

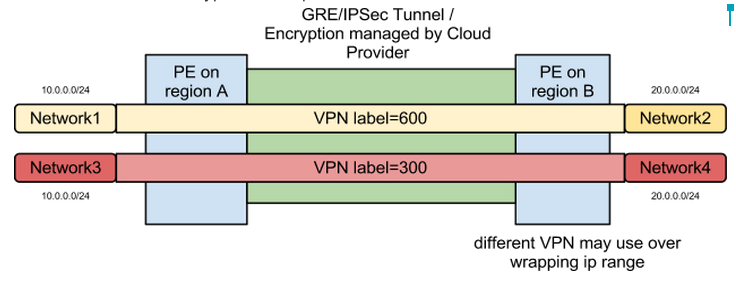

The diagram below shows encapsulation of packet on this usecase. Each region connected with IPSec tunnel w/ Encryption. Each packet will labeled with MPLS.

Data Model Changes:

BGPMPLSVPN (new resource)

based on service insertion model

Admin only parameter

- route_distinguisher = type:administrator:assigned

- type=0 administrator=16bit : assigned = 32bit

- type=1 administrator=IPaddress: assigned = 16bit

- type=2 administrator=4octet AS Number: assigend = 16 bit

Note Default value automatically assigned with type=0 format

Tenant parameter

- subnet_id: ID of the Subnet which will connect virtual router and PE router.

- routes = routes of PE. If router_id is specified, the vpn service will configure routes in the virtual router also automatically

The format of routes is as follows.

[ {destination:[cider,...],

nexthop: IP(optional),

router_id:XXX(optional)} ,

....]

- remote_prefixes =[20.0.0.0/24] list of imported prefix ( if this value is null, l3-agent setup all recieved routes

- connect_from = [VPNG_ID1,VPNG_ID2,VPNG_ID3] (list of vpn group )

- connect_to = [VPNG_ID1, VGPN_ID2] (list of vpn group )

Note: default value of connect_from/connet_to route target will all vpns in the list on create

BGPMPLSVPNGroup (new resource)

shows list of route targets assigned for each tenant

- id:

- name:

- tenant_id:

- route_target (*) asn16bit :32bit integer or ipv4:16bit integer (admin only)

(*) Only admin user can specify route target, otherwise it will be automatically assigned.

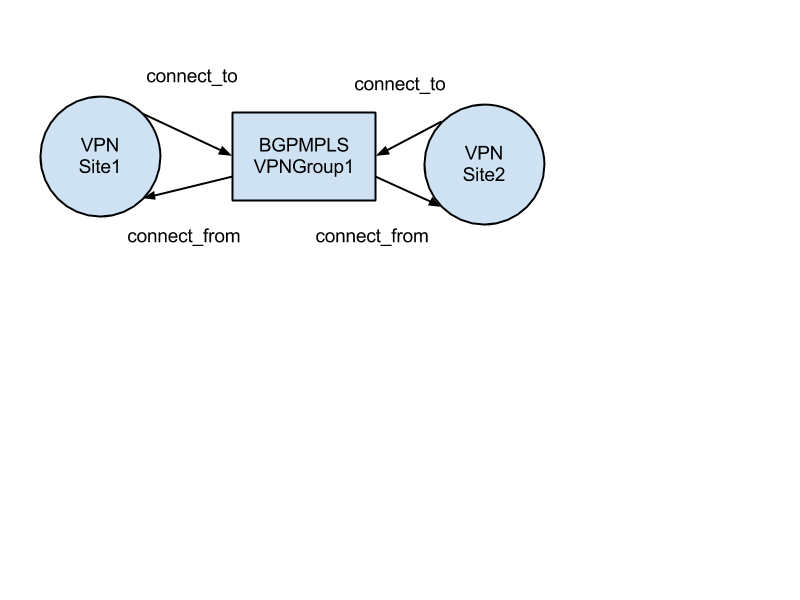

Model of BGPMPLSVPNGroup

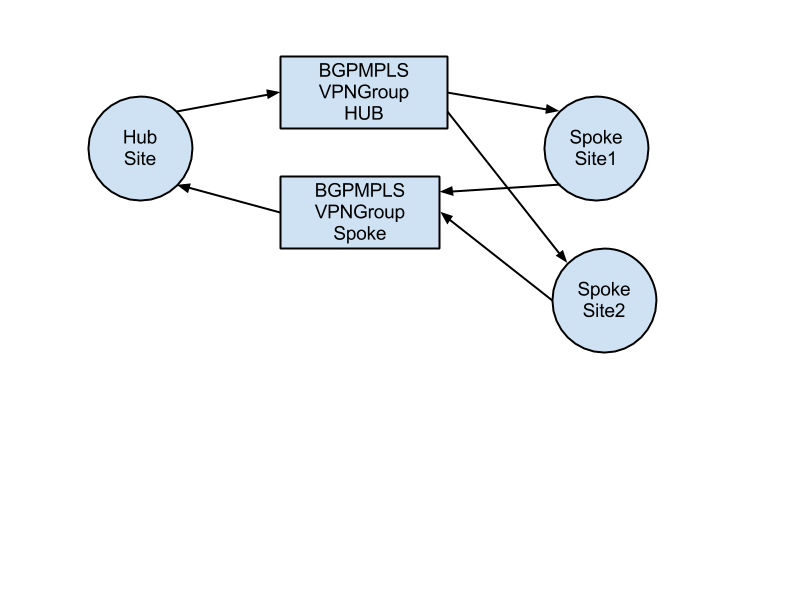

BGPMPLSVPNGroup correspond to Route target internally. (see RFC4364 4.3.5. Building VPNs Using Route Targets). In this diagram, VPN site1 and Site2 can talk each other.

You can also support hub-spoke model, shown in the diagram. In this case Hub Site and Spoke Site1 and Site2 can reach each other, however Spoke Site1 and Site2 is unreach.

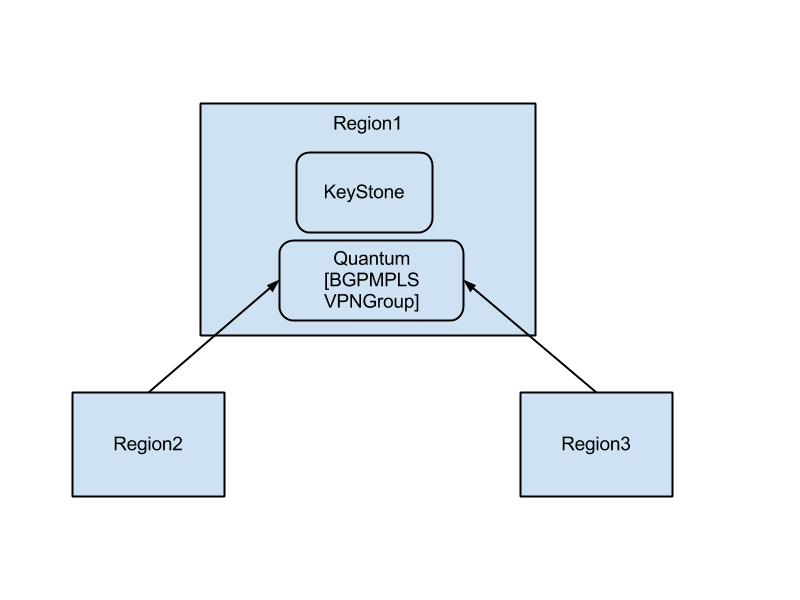

BGPMPLSVPNGroup will be managed by Network provider. We will share one BGPMPLSVPNGroup server in different data centers. In the diagram below, Region2, and Region3 uses BGPMPLSVPNGroup DB in Region1, thus they can assign BGPMPLSVPNGroup by consistent manner between several regions.

Note, this BGPMPLSVPNGroup assignment has driver architecture. For same case, Network operator have already managing mapping to BGPMPLSVPNGroup (RT) and tenant. Fot that case,the Network operator can implement a driver which provides there own BGPMPLSVPNGroup (RT) and tenant mappings.

Implementation Overview:

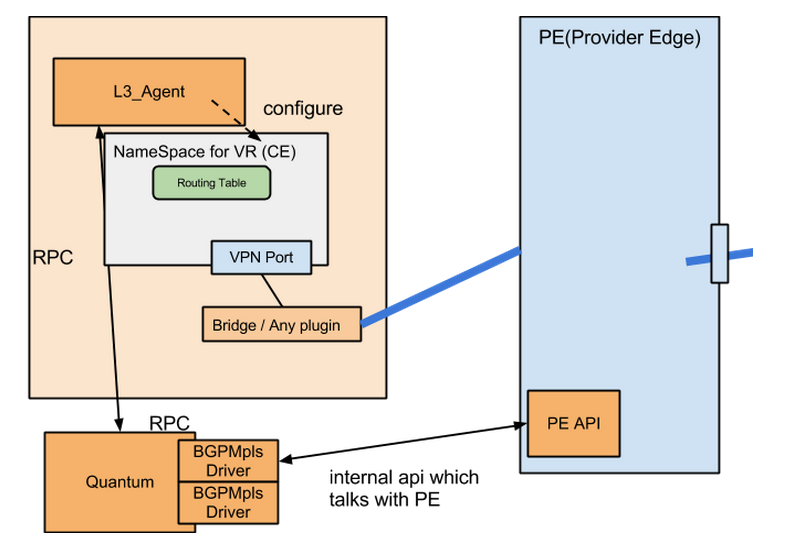

The diagram below shows implementation overview. Neutron Server and BGP Peer will be connected via BGP speaker process. Neutron calls BGPMplsVPNDriver to realize BGP/MPLS services. Different driver provider will provide different way to implement this.

Configuration variables:

neutron server configuration

- my_as

- bgp_speaker ip and port: specifies bgp speaker which will be interconnected

- route_target_range: route target range for rt assignment

- route_distinguisher_range: rd range for rd assignment

- bgpvpngroup_driver: driver for managing route target

- bgpspeaker_driver (*)

- driver to configure bgp speaker

You can use db or neutron value for bgpvpngroup_driver

- bgpvpngroup_driver=db using local db

- bgpvpngroup_driver=neutron use another neutron server

bgpspeaker configuration

- my_as

- bgp_identifier

- neighbors

API's:

CRUD rest api for each new resources

Plugin Interface: Implement above data model

Required Plugin support: yes

Dependencies: BGPSpeaker

CLI Requirements:

Create bgpmplsvpngroup

neutron bgpmplsvpngroup-create --name test --route-target 6800:1

List bgpmplsvpngroup

neutron bgpmplsvpngroup-list -c id -c name -c route_target

Set bgpmplsvpngroup id to env variable

GROUP=`neutron bgpmplsvpngroup-list -c id -c name -c route_target | awk '/test/{print $2}'`

Delete bgpmplsvpngroup

neutron bgpmplsvpngroup-delete <id>

Create virtual router

neutron router-create router1

Create network which will connect virtual router and PE router

neutron net-create net-connect

Create subnet in the above network

neutron subnet-create net-connect 50.0.0.0/24 --name=subnet-connect

Create BGP MPLS VPN

neutron bgpmplsvpn-create --name=vpn1 --route_distinguisher=6800:1 --connected_subnet=subnet-connect --routes '[{"destination": ["10.0.0.0/24", "20.0.0.0/24"], "router_id": "router1"}]' --remote_prefixes list=true 30.0.0.0/24 40.0.0.0/24 --connect_to list=true $GROUP --connect_from list=true $GROUP

List BGP MPLS VPN

neutron bgpmplsvpn-list

Delete BGP MPLS VPN

neutron bgpmplsvpn-delete vpn1

Insert VPN service to the router

neutron router-service-insert router1 vpn1

Horizon Requirements:

- Extended attribute configuration page

- show dynamic routes

Usage Example:

TBD

Test Cases:

Connect two different openstack cluster (devstack) with BGP/MPLS vpn