Manila/Replication Internals Design

Redirect to:

Intro

Overview

Replicated shares will be implemented in Manila without adding any new services or any new drivers. The code for creating replicated shares and for adding/removing replicas and other replication-related operations will all go into the existing share drivers. The most significant change required to allow this will be that share drivers will be responsible for communicating with ALL storage controllers necessary to achieve any replication tasks, even if that involves sending commands to other storage controllers in other AZs.

While we can't know how every storage controller works and how each vendor will implement replication, this approach should give driver writers all the flexibility needed to implement what they need with minimal added complexity in the Manila core. There are already examples of drivers reading config sections for other drivers when a operation requires communicating with 2 backends.

Driver Changes

Existing driver methods will need to change to support replicated shares. The create_share() method will need to do any additional configuration needed for replicated shares if the share_type for the new share specifies replication. Operations like creating/deleting snapshots will be responsible for creating/deleting snapshot instances on every replica. Expanding/shrinking shares will need to expands all the replicas, etc. The API service will send RPCs to a manager responsible for the active replica for any share will multiple replicas.

New Driver Entry Points

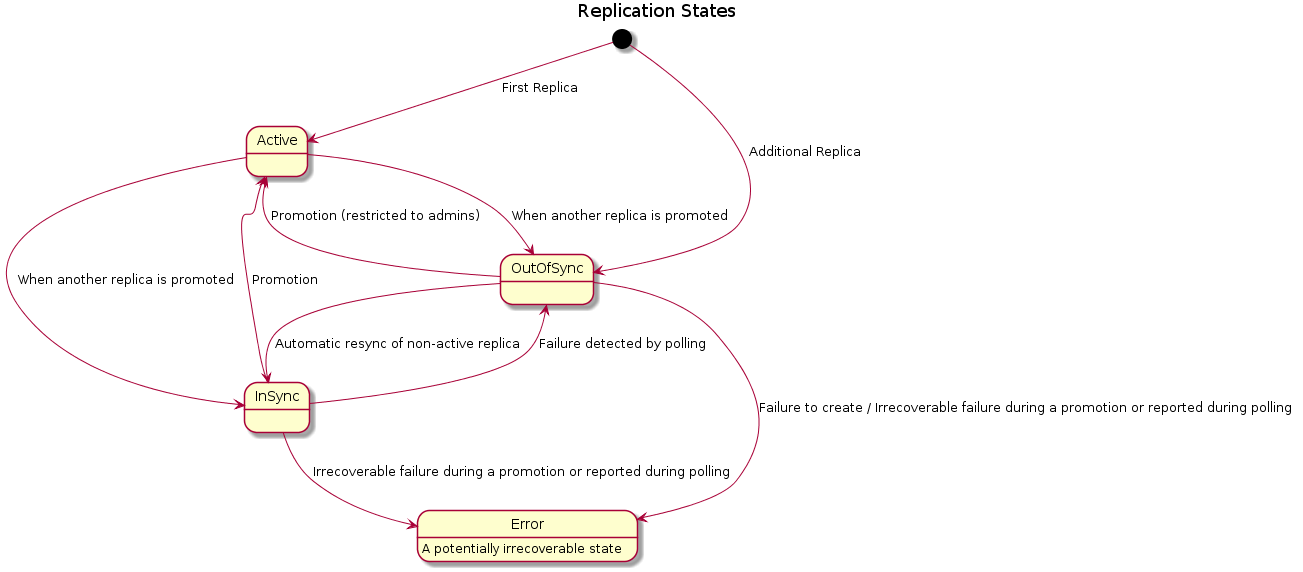

add_replica(active_share_instance, new_share_instance) - The driver should establish a new replica on a different pool in a different backend in a different AZ. The active_share_instance already exists, and the new_share_instance is one one to be created. Drivers will typically need to communicate with a different storage controller to implement this method.

remove_replica(share_instance) - The driver should remove the given share_instance, cleaning up the storage and any replication relationships. This method should fail if the replica cannot be cleaned up.

set_active_replica(share_instance) - The driver should change the active replica. This will be called on the backend that will manage the NEW active replica, not the backend managing the current active replica. This method should succeed if the new active replica was able to be set, even if the old active replica is broken or unreachable.

update_replica_status(share_instance) - The driver should check the status of the replica. Called periodically. Used to detect replicas that have gone out of sync. Also drivers should fix replication relationships that were broken if possible inside this method.

Changes

- Add replica scheduler logic - The API service should send RPC to the scheduler to schedule the replica. The scheduler should pick a place for the new share_instance similar to existing share scheduling, but send the add_replica RPC request to the backend that owns the currently active replica.

- Remove replica - API service should send RPC directly to the manager responsible for the currently active replica.

- Set active replica - API server should send RPC directly to the manager responsible for the new active replica.

- Updating replica status - The manager should have a looping call on an admin-configurable time interval (default 5 minutes). Each time the manager should enumerate all the replicated shares which have an active instance owned by that backend and call this method once per instance. The method should return a model update.