MagnetoDB

Contents

Status

- Inital draft --isviridov (talk) 17:40, 16 October 2013 (UTC)

- Added more details isviridov (talk) 01:10, 30 October 2013 (UTC)

Scope

Document describes the idea and technical concept of DB service for OpenStack like Amazon DynamoDB. There is no implementation yet.

What is MagnetoDB?

MagnetoDB is Amazon DynamoDB API implementation for OpenStack world.

What is DynamoDB?

DynamoDB is a fast, fully managed NoSQL database service that makes it simple and cost-effective to store and retrieve any amount of data, and serve any level of request traffic. With DynamoDB, you can offload the administrative burden of operating and scaling a highly available distributed database cluster

DynamoDB tables do not have fixed schemas, and each item may have a different number of attributes. Multiple data types add richness to the data model. Local secondary indexes add flexibility to the queries you can perform, without impacting performance. [1]

Why it is needed?

In order to provide hight level database service with DynamoDB API interface for OpenStack users.

Just simply create the table and start working with your data. No vm provisioning, database software setup, configuration, patching, monitoring and other administrative routine.

Integration with Trove?

Initially suggested as blueprint, it separated out as project having different from Trove program mission.

MagnetoDB is not one more database, which can be provisioned and managed by Trove. It is one more OpenStack service with own API, keystone based authentication, quota management.

However, the underlaying database will be provisioned and managed by Trove, in order to reuse existing Openstack components and avoid duplication of functionality.

Overall architecture

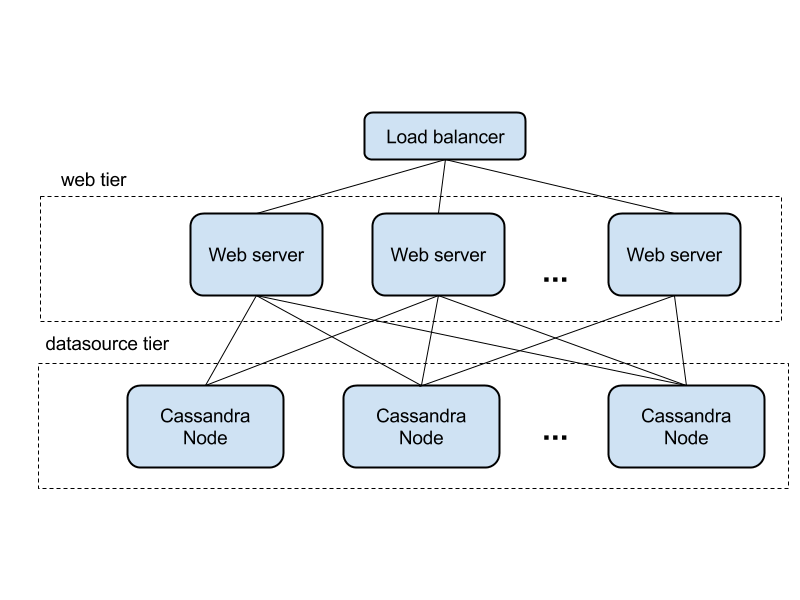

The shape of the architecture based on Cassandra storage is below. It is the classic 2 layer web application, where each layer is horizontally scalable. Requests are routed by load balancer to web application layer, and processed there.

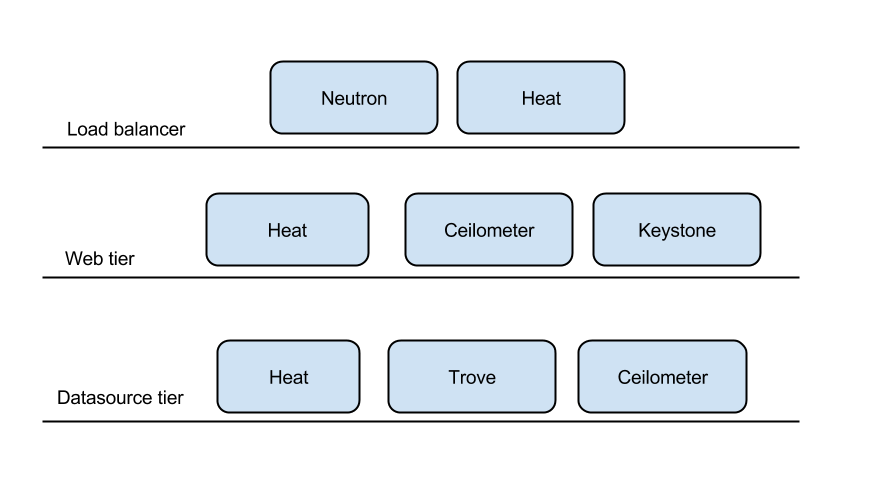

In projection to existing OpenStack components

API

The DynamoDB API documented very well here Operations in Amazon DynamoDB However, the key aspects are mentioned below.

The API present HTTP request/responce, where JSON is used as data transfer model. The request body contains the payload, and HTTP headers are used for metadata.

Example of such request

POST / HTTP/1.1 host: dynamodb.us-west-2.amazonaws.com x-amz-date: 20130112T092034Z x-amz-target: DynamoDB_20120810.CreateTable Authorization: AWS4-HMAC-SHA256 Credential=AccessKeyID/20130112/us-west-2/dynamodb/aws4_request,SignedHeaders=host;x-amz-date;x-amz-target,Signature=145b1567ab3c50d929412f28f52c45dbf1e63ec5c66023d232a539a4afd11fd9 content-type: application/x-amz-json-1.0 content-length: 23 connection: Keep-Alive

As you can see the operation name and authorization data is sent in HTTP request headers.

Authorization

It is HMAC based on EC2 Credentials, which is sent in a header of HTTP request as in example above.

Table CRUD operations

With following methonds you can create, list, delete tables. In the first implementation the througput configuration parameters can be ignored. So UpdateTable operation turns making no effect, because of that.

Item CRUD operations

With items operation you can apply CRUD operation on your data. In addition to that your request can be conditional, means will be executed if item is in a state you defiened in the request

The GetItem operation consistency can be eventual or strong per request.

More detailes can be found below

Data quering

In order to search the data there are two ways query and scan operations.

Query operation searches only primary key attribute values and supports a subset of comparison operators on key attribute values to refine the search process. A Query operation always returns results, but can return empty results.

Scan operation examines every item in the table. You can specify filters to apply to the results to refine the values returned to you, after the scan has finished. [2]

Batch operations

The batch operations are also supported.

Implementation

The technical concept is still in progress, but the shape is pretty clear and key things are mentioned below

Datasource pluggability

For backend storage provisioning and management OpenStack DBaaS Trove will be used. The backend database should be pluggable to provide flexibility around choosing the solution which matches the best the existing or planned OpenStack installation technology stack. MySQL is one of the obvious options, being de-facto standard for being used with OpenStack and being supported by Trove right away.

Apache Cassandra looks very suitable for that case due to having tunable consistency, easy scalability, key-value data model, ring topology and other features what gives us predictable high performance and fault tolerance. Cassandra data model perfectly fits MagnetoDB needs.

OpenStack component reusage

Being the OpenStack service MagnetoDB must and will use its infrastructure wherever it possible. For most of the core modules the corresponding OpenStack modules are defined in the table below.

| Purpose | OS component |

|---|---|

| Provisioning of web tier | Heat, Mistral (Convection) |

| Load balancer | LBaaS, Neutron |

| Autoscaling of web tier | Heat autoscaling, Ceilometer |

| Data Source provisioning and management | Trove |

| Monitoring | Ceilometer |

| Authentication | Keystone |

Autoscaling

MagnetoDB should scale accoring to the load and data it handle. Heat autoscaling group is gonna be used to achive it.

The autoscaling of datasource expected to be implemened in Trove.

CI/CD

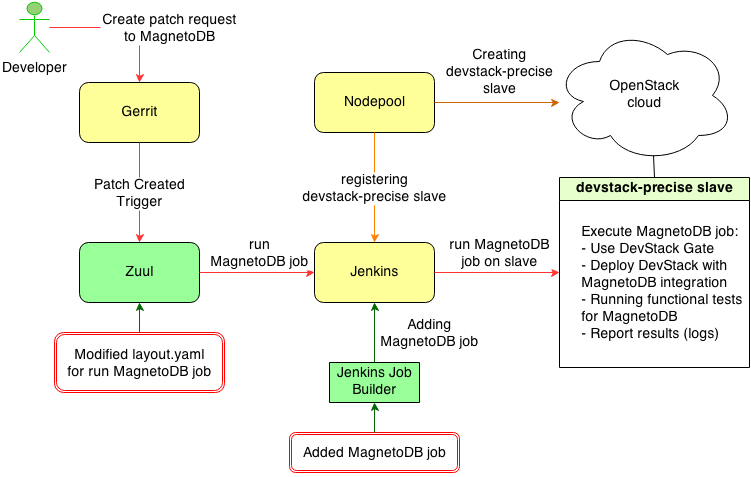

CI/CD concept

There are several ways to integrate with OpenStack Infra:

- Adding own public cloud to Infra's NodePool as new provider which will be used to provide the specific environment (jenkins slaves) for MagnetoDB project. Adding own Jenkins job and script to Infra for this. For all tasks use Openstack Infra infrastructure (Jenkins, Zuul etc.)

- Adding own Jenkins to Infra's Gearman (Zuul) via Jenkins Gearman plugin. This Jenkins will be contain jobs and slaves for MagnetoDB project. Infra's Zull will be used for the orchestration.

- Gerrit layer integration. Use Jenkins Gerrit Trigger Plugin or Zuul for integration with OpenStack Gerrit and own envoronment for CI. Described here http://ci.openstack.org/third_party.html.

- Full integration. Adding own job to Jenkins Job Builder and some changes to layout.yaml (Zull). Use Infra's slaves for running own job.

- Below is a diagram describing the method of full integration

Diagram shows standard Openstack Infra workflow for main components (Jenkins, Zuul, Nodepool). Red rectangles describes the necessary steps for Infra integration:

- Preparing and adding new Jenkins job to Jenkins Job Builder in .yaml format

- Modify layout.yaml (Zuul) for adding new job in some pipeline.

Useful links

- Source code

- Project space

- Blueprints

- Bugs

- Patches on review

- IRC logs

- IRC server: irc.freenode.net channel:

#magnetodb

Improve MagnetoDB

Where can I discuss & propose changes?

- Our IRC channel: IRC server

#magnetodbon irc.freenode.net; - Openstack mailing list: openstack-dev@lists.openstack.org (see subscription and usage instructions);

- MagnetoDB team on Launchpad: Questions&Answers/Bugs/Blueprints.

How can I start?

It is extremetly simple to participate in different MagnetoDB development lines. MagnetoDB Launchpad page contains a wide range of tasks perfectly suited for you to start contributing to MagnetoDB. You can choose any unassigned bug or blueprint. As soon as you have chosen a bug, just assign it to you, and you can start fixing it. If you would like chose a blueprint, please contact core team at the #magnetodb IRC channel on irc.freenode.net.

The most bugs and blueprints contain basic descriptions of what is to be done there; in case you have questions or want to share your ideas, be sure to contanct us in IRC.

How to contribute?

- First of all you need a Launchpad account. Make sure Launchpad has your SSH key, Gerrit (the code review system) uses this;

- Sign the Contributors License Agreement as outlined in section 3 of the How To Contribute wiki page;

- Tell git your details:

git config --global user.name "Firstname Lastname"

git config --global user.email "your_email@youremail.com" - Install git-review. This tool takes a lot of the pain out of remembering commands to push code up to Gerrit for review and to pull it back down to edit it. It is installed using:

pip install git-review

NOTE: Several Linux distributions (notably Fedora 16 and Ubuntu 12.04) are also starting to include git-review in their repositories so it can also be installed using the standard package manager; - Grab the MagnetoDB repository:

git clone git@github.com:stackforge/magnetodb.git

- Checkout a new branch to hack on:

git checkout -b TOPIC-BRANCH

- Start coding;

- Run the test suite locally to make sure nothing broke, e.g.:

./run_tests.sh

or you can usetoxtest command line tool (install it withpip install tox).

NOTE: If you extend MagnetoDB with new functionality, make sure you also have provided unit tests for it; - Commit your work using:

git commit -a

or you can use the following to edit a previous commit:

git commit -a --amend

NOTE: Make sure you have supplied your commit with a neat commit message, containing a link to the corresponding blueprint/bug, if appropriate; - Push the commit up for code review using:

git review

That is the awesome tool you installed earlier that does a lot of hard work for you; - Watch your email or review site, it will automatically send your code for a battery of tests on our Jenkins setup and the core team for the project will review your code. If there is any changes that should be made they will let you know;

- When all is good the review site will automatically merge your code.

(This tutorial is based on: http://www.linuxjedi.co.uk/2012/03/real-way-to-start-hacking-on-openstack.html)

What are Code Review Guidelines?

Sub pages

- MagnetoDB/Code Review Guidelines

- MagnetoDB/DeveloperGuide

- MagnetoDB/FAQ

- MagnetoDB/How To Contribute

- MagnetoDB/How to release

- MagnetoDB/ListOfContributors

- MagnetoDB/Logging configs

- MagnetoDB/PTL Elections Juno

- MagnetoDB/PTL Elections Kilo

- MagnetoDB/QA/Master test plan

- MagnetoDB/QA/Organization of tests in CI

- MagnetoDB/QA/Test cases

- MagnetoDB/QA/Test process

- MagnetoDB/QA/Test statistics

- MagnetoDB/QA/Tests on env with devstack

- MagnetoDB/QA/Tests on local env workflow

- MagnetoDB/Roadmap

- MagnetoDB/Tools/DevStackIntegration

- MagnetoDB/WeeklyMeetingAgenda

- MagnetoDB/WeeklyMeetingArchive

- MagnetoDB/specs/

- MagnetoDB/specs/all old for put item

- MagnetoDB/specs/async-schema-operations

- MagnetoDB/specs/bulkload-api

- MagnetoDB/specs/data-api

- MagnetoDB/specs/monitoring-api

- MagnetoDB/specs/monitoring-health-check

- MagnetoDB/specs/notification

- MagnetoDB/specs/rbac

- MagnetoDB/specs/requestmetrics

- MagnetoDB/specs/streamingbulkload

- MagnetoDB/specs/template

- MagnetoDB/specs/uuid-for-a-table