Difference between revisions of "Image handling in edge environment"

| Line 13: | Line 13: | ||

== One Glance with multiple backends == | == One Glance with multiple backends == | ||

| − | There is one central Glance what is capable to handle multiple backends. Every edge cloud instance is represented by a backend. | + | There is one central Glance what is capable to handle multiple backends. Every edge cloud instance is represented by a Glance backend in the central Glance. Every edge cloud instance runs a Glance API server which is configured in a way, that it uses the central Glance to pull the images, but also uses a local cache. All Glance API servers use a shared database and the databese clustering is out of Glance-s reponsibility. Each Glance API server can access each others images, but use its own by default. When accessing a remote image Glance API streams the image from the remote location. (In this sense this is pull mode). To achieve push strategy with the appropriate backup it is possible to configure the replication of the images to every location, but in this case the distribution is not location aware. OpenStack services in the edge cloud instances are using the images in the Glance backend with a direct url. <br/> |

[[File:Glance-edge-architecture-multiple-backends.PNG]] <br/> | [[File:Glance-edge-architecture-multiple-backends.PNG]] <br/> | ||

Work for multiple backends has been already started with an [https://etherpad.openstack.org/p/glance-multi-store-ceph-backend-support etherpad] and a [https://review.openstack.org/#/c/562467/ spec]. | Work for multiple backends has been already started with an [https://etherpad.openstack.org/p/glance-multi-store-ceph-backend-support etherpad] and a [https://review.openstack.org/#/c/562467/ spec]. | ||

| Line 19: | Line 19: | ||

* Concerns/Questions | * Concerns/Questions | ||

** Network partitioning tolerance? | ** Network partitioning tolerance? | ||

| + | *** Network conenction is required when a given image is started for the first time. | ||

** Is it safe to store database credentials in the far edge? (It is not possible to provide image access without network connection and not store the database credentials in the edge cloud instance) | ** Is it safe to store database credentials in the far edge? (It is not possible to provide image access without network connection and not store the database credentials in the edge cloud instance) | ||

| + | *** It is unavoidable in this case. | ||

** Can the OpenStack services running in the edge cloud instances use the images from the local Glance? There is a worry that OpenStack services (e.g. nova) still need to get the direct URL via the Glance API which is ONLY available at the central site. | ** Can the OpenStack services running in the edge cloud instances use the images from the local Glance? There is a worry that OpenStack services (e.g. nova) still need to get the direct URL via the Glance API which is ONLY available at the central site. | ||

| + | *** This can be avoided by allowing Glance to access all the CEPH instances as backends | ||

** Is CEPH backend CEPH block of CEPH RGW in the figure? | ** Is CEPH backend CEPH block of CEPH RGW in the figure? | ||

| + | *** Glance talks directly to the CEPH block. As an alternative it is possible to use Swift from Glance and use CEPH RGW as a Swift backend. | ||

** Did I got it correctly, that the CEPH backends are in a replication configuration? | ** Did I got it correctly, that the CEPH backends are in a replication configuration? | ||

| + | *** Not always | ||

* Pros | * Pros | ||

** Relatively easy to implement based on the current Glance architecture | ** Relatively easy to implement based on the current Glance architecture | ||

| Line 29: | Line 34: | ||

** Requires the same OpenStack version in every edge cloud instance (apart from during upgrade) | ** Requires the same OpenStack version in every edge cloud instance (apart from during upgrade) | ||

** Sensitivity for network connection loss is not clear | ** Sensitivity for network connection loss is not clear | ||

| + | ** There is no explicit control over the time period while an image is cached | ||

| + | ** It is not possible to invalidate an image from the cache | ||

| + | ** It is mandatory to store the Glance database credentials in every edge cloud instance | ||

== Several Glances with an independent syncronisation service, sych via Glance API == | == Several Glances with an independent syncronisation service, sych via Glance API == | ||

Revision as of 11:43, 7 August 2018

This page contains a summary of the Vancouver Forum discussions about the topic. Full notes of the discussion are in here. The features and requirements for edge cloud infrastructure are described in OpenStack_Edge_Discussions_Dublin_PTG.

Source of the figures is here.

Synchronisation strategies

- Copy every image to every edge cloud instance: the simplest and less optimal solution

- Copy images only to those edge cloud instances where they are needed

- Provide a syncronisation policy together with the image

- Rely on the pulling of the images

Architecture options for Glance

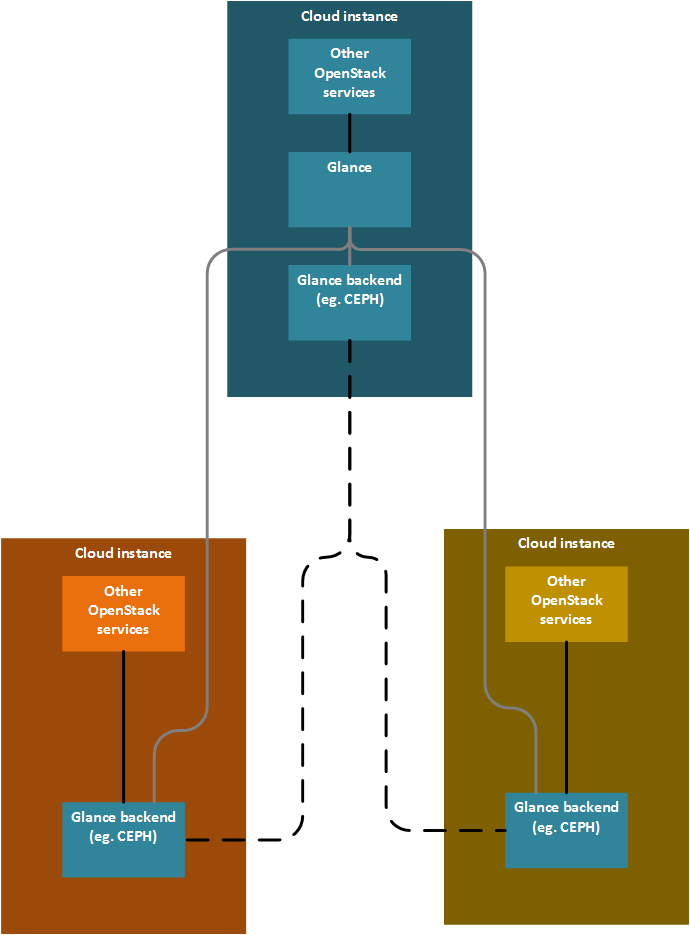

One Glance with multiple backends

There is one central Glance what is capable to handle multiple backends. Every edge cloud instance is represented by a Glance backend in the central Glance. Every edge cloud instance runs a Glance API server which is configured in a way, that it uses the central Glance to pull the images, but also uses a local cache. All Glance API servers use a shared database and the databese clustering is out of Glance-s reponsibility. Each Glance API server can access each others images, but use its own by default. When accessing a remote image Glance API streams the image from the remote location. (In this sense this is pull mode). To achieve push strategy with the appropriate backup it is possible to configure the replication of the images to every location, but in this case the distribution is not location aware. OpenStack services in the edge cloud instances are using the images in the Glance backend with a direct url.

Work for multiple backends has been already started with an etherpad and a spec.

Cascading (one edge cloud instance is the receiver and the source of the images at the same time) is possible if the central Glance is able to orchestrate the syncronisation of images.

- Concerns/Questions

- Network partitioning tolerance?

- Network conenction is required when a given image is started for the first time.

- Is it safe to store database credentials in the far edge? (It is not possible to provide image access without network connection and not store the database credentials in the edge cloud instance)

- It is unavoidable in this case.

- Can the OpenStack services running in the edge cloud instances use the images from the local Glance? There is a worry that OpenStack services (e.g. nova) still need to get the direct URL via the Glance API which is ONLY available at the central site.

- This can be avoided by allowing Glance to access all the CEPH instances as backends

- Is CEPH backend CEPH block of CEPH RGW in the figure?

- Glance talks directly to the CEPH block. As an alternative it is possible to use Swift from Glance and use CEPH RGW as a Swift backend.

- Did I got it correctly, that the CEPH backends are in a replication configuration?

- Not always

- Network partitioning tolerance?

- Pros

- Relatively easy to implement based on the current Glance architecture

- Cons

- Requires the same Glance backend in every edge cloud instance

- Requires the same OpenStack version in every edge cloud instance (apart from during upgrade)

- Sensitivity for network connection loss is not clear

- There is no explicit control over the time period while an image is cached

- It is not possible to invalidate an image from the cache

- It is mandatory to store the Glance database credentials in every edge cloud instance

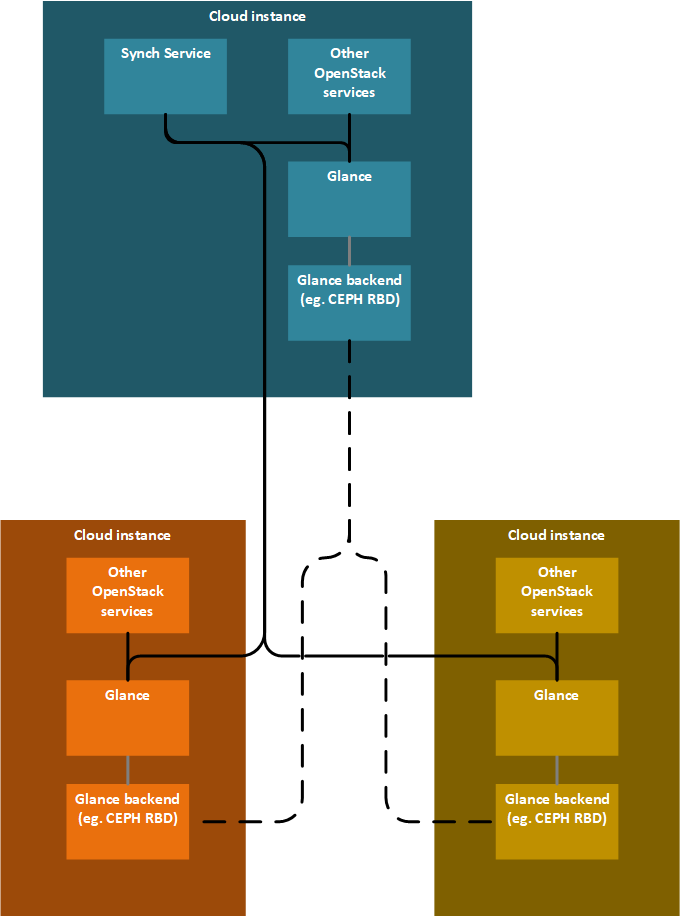

Several Glances with an independent syncronisation service, sych via Glance API

Every edge cloud instance have its Glance instance. There is a synchronisation service what is able to instruct the Glances to do the synchronisation. The synchronisation of the image data is done using Glance API-s.

Cascading is visible for the syncronisation service only, not for Glance.

- Pros

- Every edge cloud instance can have a different Glance backend

- Can support multiple OpenStack versions in the different edge cloud instances

- Can be extended to support multiple VIM types

- Cons

- Needs a new synchronisation service

Several Glances with an independent syncronisation service, synch using the backend

Every edge cloud instance have its Glance instance. There is a synchronisation service what is able to instruct the Glances to do the synchronisation. The synchronisation of the image data is the responsibility of the backend (eg.: CEPH).

Cascading is visible for the syncronisation service only, not for Glance.

Cascading is visible for the syncronisation service only, not for Glance.

- Concerns/Questions

- Is CEPH backend CEPH block of CEPH RGW in the figure?

- Pros

- Cons

- Needs a new synchronisation service

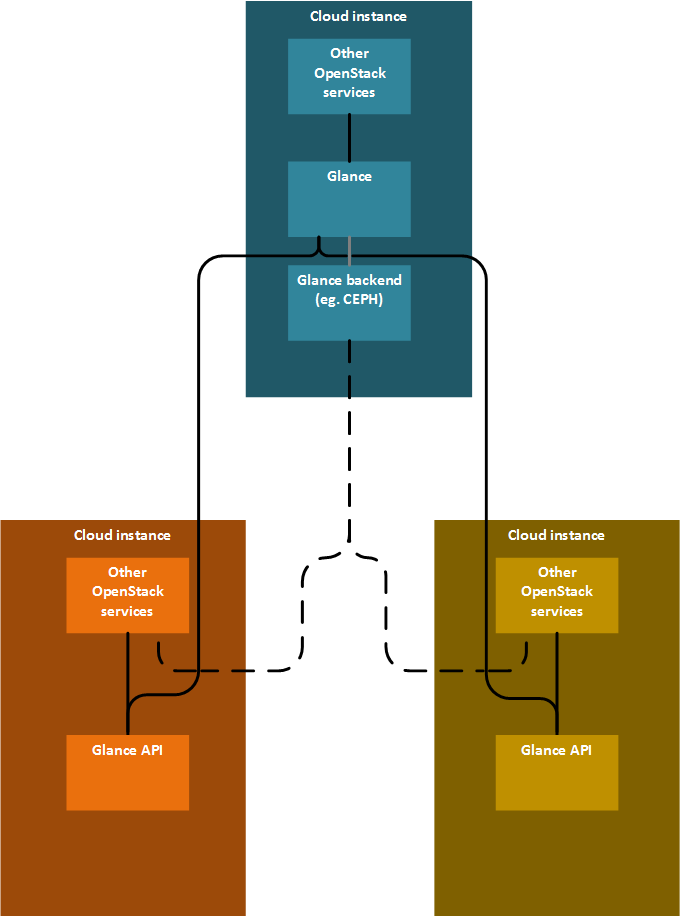

One Glance and multiple Glance API servers

There is one central Glance and every edge cloud instance runs a separate Glance API server. These Glance API servers are communicating with the central Glance. Backend is accessed centrally, there is only caching in the edge cloud instances. Nova Image Caching caches at the compute node what would work ok for all-in-one edge clouds or small edge clouds. But glance-api caching caches at the edge cloud level, so works better for large edge clouds with lots of compute nodes.

Cascading is not possible as only pulling strategy works in this case.

- Concerns/Questions

- Do we plan to use Nova image caching or caching in the Glance API server?

- Are the image metadata also cached in Glance API server or only the images?

- Pros

- Implicitly location aware

- Cons

- First usage of an image always takes a long time

- In case of network connection error to the central Glance Nova will have access to the images, but will not be able to figure out if the user have rights to use the image and will not have path to the images data