Difference between revisions of "Heat/AutoScaling"

(link to source of the sequence diagrams) |

(link to autoscaling blueprint) |

||

| Line 41: | Line 41: | ||

=== Beyond === | === Beyond === | ||

| + | |||

| + | |||

| + | The following blueprint and its dependents *should* accurately reflect the design laid out in this document: https://blueprints.launchpad.net/heat/+spec/heat-autoscaling | ||

==== Use Cases ==== | ==== Use Cases ==== | ||

| Line 78: | Line 81: | ||

[[File:Resource-creation.svg|thumbnail|sequence diagram for autoscaling resource creation [http://interactive.blockdiag.com/seqdiag/?compression=deflate&src=eJyVkt1rwjAUxd_9Ky5lj1u7D9hLyUQcjIEw0e1JpKTx2oaluS5NHE7835d-qB37YObx5Jz7O23utgewwCV3yiZL0raUH8iubmMv4yLDRKHObM6uby7jjlOTxUSQIsOUzHKbKodxzxuiiAsr19xK0kyTxrgRnSXtihQNs6a1WixWiluMIUduYxhML0o0aym8IlAqKtCiqa2MMZhgSc4IBGGwHl-pnTHA7upBMFM8RcWCYeVDGHGnRT4kvZRZMI__kRj4slPBldTZgyG3CuZbn4LG6zPHoofk-Gn6DGEYRlkVKBvO7i-WaFgtZ0xKis0JoLMaFK2qnMQyOAeD1hnNglraPN63Hb786l-_WHFTnEJvINE7pjnRq8fXyZ_OvpbT8s0htAl4mYyagkfe8c1h1o7rYvv9_gFjqp2rdrC9S2mxAeG3l0tdfq_SgYb7p9l9AmIQ7JE (source)]]] | [[File:Resource-creation.svg|thumbnail|sequence diagram for autoscaling resource creation [http://interactive.blockdiag.com/seqdiag/?compression=deflate&src=eJyVkt1rwjAUxd_9Ky5lj1u7D9hLyUQcjIEw0e1JpKTx2oaluS5NHE7835d-qB37YObx5Jz7O23utgewwCV3yiZL0raUH8iubmMv4yLDRKHObM6uby7jjlOTxUSQIsOUzHKbKodxzxuiiAsr19xK0kyTxrgRnSXtihQNs6a1WixWiluMIUduYxhML0o0aym8IlAqKtCiqa2MMZhgSc4IBGGwHl-pnTHA7upBMFM8RcWCYeVDGHGnRT4kvZRZMI__kRj4slPBldTZgyG3CuZbn4LG6zPHoofk-Gn6DGEYRlkVKBvO7i-WaFgtZ0xKis0JoLMaFK2qnMQyOAeD1hnNglraPN63Hb786l-_WHFTnEJvINE7pjnRq8fXyZ_OvpbT8s0htAl4mYyagkfe8c1h1o7rYvv9_gFjqp2rdrC9S2mxAeG3l0tdfq_SgYb7p9l9AmIQ7JE (source)]]] | ||

| − | |||

* LaunchConfig: stored locally in Heat. | * LaunchConfig: stored locally in Heat. | ||

Revision as of 21:53, 2 July 2013

Heat Autoscaling now and beyond

AS == AutoScaling

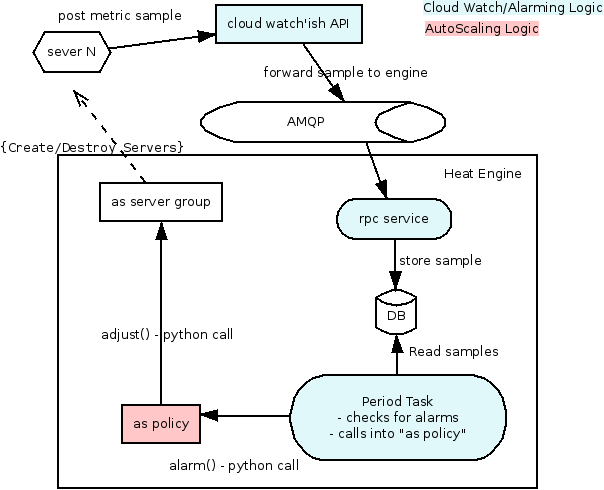

Now

The AWS AS is broken into a number of logical objects

- AS group (heat/engine/resources/autoscaling.py)

- AS policy (heat/engine/resources/autoscaling.py)

- AS Launch Config (heat/engine/resources/autoscaling.py)

- Cloud Watch Alarms (heat/engine/resources/cloud_watch.py, heat/engine/watchrule.py)

Dependencies

Note the in template resource dependencies are:

- Alarm

- Group

- Policy

- Group

- Launch Config

- [Load Balancer] - optional

- Group

This mean the creation order should be [LB, LC, Group, Policy, Alarm].

When a stack is created with these resources the following happens:

- Alarm: the alarm rule is written into the DB

- Policy: nothing interesting

- LaunchConfig: it is just storage

- Group: the Launch config is used to create the initial number of servers.

- the new server starts posting samples back to the cloud watch API

When an alarm is triggered in watchrule.py the following happens:

- the periodic task runs the watch rule

- when an alarm is triggered it calls (python call) the policy resource (policy.alarm())

- the policy figures out if it needs to adjust the group size, if it does it calls (via python again) group.adjust()

Beyond

The following blueprint and its dependents *should* accurately reflect the design laid out in this document: https://blueprints.launchpad.net/heat/+spec/heat-autoscaling

Use Cases

- Administrators want to manually add or remove (*specific*) instances from an instance group. (one node was compromised or had some critical error?)

- External automated tools may want to adjust the number of instances in an instance group (e.g. AutoScaling)

- Developers want to integrate with AutoScale without using Heat templates

General Ideas

- Make Heat responsible for "Scaling" without the "Auto"

- Make the AS policy a separate service

- Use ceilometer (pluggable monitoring)

Basically the same steps happen just instead of python calls they will be REST calls.

Scaling

"Scaling" (without the "Auto") will be a part of Heat. OS::Heat::InstanceGroup will maintain a sub-stack that contains all of the individual Instances so that they can be addressed for orchestration purposes.

External agents or services will be able to interact with the InstanceGroup to implement various use cases like autoscaling and manual administrator intervention (to manually remove or add instances for example). Tools can use the normal resource API to look at the instances in the sub-stack, but adding/removing instances should only be done on the InstanceGroup directly (either via a webhook to adjust the size or via other property manipulation through the API). (This addresses use cases #1 and #2 above).

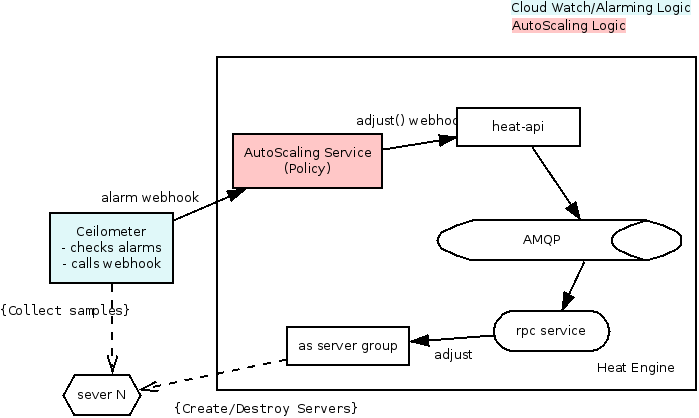

AutoScaling

AutoScaling will be delegated to a service external to Heat (but implemented inside the Heat project/codebase). The AutoScaling service is simple. It knows only about AutoScalingGroups and ScalingPolicies. Monitoring services (e.g. Ceilometer) will tell the AutoScaling service to execute policies, and the AutoScaling service will execute those policies by talking to the Heat API.

The communication is as thus:

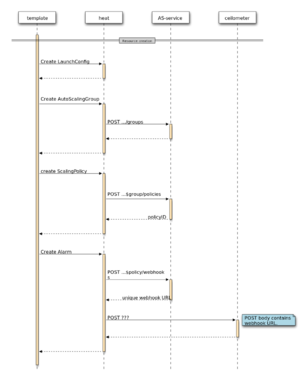

- When AutoScaling resources are created in Heat, they will register the data with the AutoScaling service via POSTs to its API. This includes the AutoScalingGroup and the ScalingPolicy.

- When Ceilometer (or any other monitoring service) hits an AutoScaling webhook, the AutoScaling service will execute the associated policy (unless it's on cooldown).

- During policy execution, the AutoScaling service will talk to Heat to manipulate the AutoScalingGroup that lives within Heat.

We would like to implement very basic support in the AS-Service daemon to run launch configurations that are specified directly through the API, without Heat resources. (use case #3 above). The common case will ideally be the case where Heat resources are used, though.

When a stack is created with these resources the following happens:

- LaunchConfig: stored locally in Heat.

- AutoScalingGroup (subclass of InstanceGroup):

- Base InstanceGroup behavior:

- A substack is created to represent all of the servers.

- Launch config is used to create the initial number of servers in the substack.

- the servers will be tagged with the group name so that metrics from ceilometer can be aggregated.

- AutoScalingGroup-specific behavior:

- The scaling group will be registered (POST) with the AS service (AutoScalingGroup behavior).

- Base InstanceGroup behavior:

- Policy: The policy is registered (POST) with the AS service.

- including a webhook pointing back to the Heat API, at the specific AutoScalingGroup, to adjust its size as required.

- Alarm: the alarm is created in Ceilometer via a POST (https://github.com/openstack/python-ceilometerclient/blob/master/ceilometerclient/v2/alarms.py). We pass it the above webhook. (should this be done *via* the AS Service? or straight from Heat?)

- ceilometer then starts collecting samples and calculating the thresholds.

When an alarm is triggered in Ceilometer the following happens:

- Ceilometer will post a webhook to what ever we supply (I'd guess a url to the policy API)

- the policy figures out if it needs to adjust the group size, if it does it calls a heat-api webhook (something like PUT.../resources/<as-group>/adjust)

Authentication

- how do we authenticate the request from ceilometer to AS?

- is this a special unprivileged user "ceilometer-alarmer" that we trust?

- at some point we need to get the correct user credentials to scale in the AS group.

Securing Webhooks

Many systems just treat the webhook URL as a secret (with a big random UUID in it, generated *per client*). I think think this is actually fine, but it has two problems we can easily solve:

- there are lots of places other than the actual SSL stream that URLs can be seen. Logs of the Autoscale HTTP server, for example.

- it's susceptible to replay attacks (if sniff one request, you can send the same request to keep doing the same operation, like scaling up or down)

The first one is easy to solve by putting some important data into the POST body. The second one can be solved with a nonce with timestamp component.

The API for creating a webhook in the autoscale server should return two things, the webhook URL and a random signing secret. When Ceilometer (or any client) hits the webhook URL, it should do the following:

- include a "timestamp" argument with the current timestamp

- include another random nonce

- sign the request with the signing secret

(to solve the first problem from above, the timestamp and nonce should be in the POST request body instead of the URL)

And anytime the AS service receives a webhook it should:

- verify the signature

- ensure that the timestamp is reasonably recent (no more than minutes old, and no more than minutes into the future)

- check to see if the timestamp+nonce has been used recently (we only need to store the nonces used within that "reasonable" time window)

On top of all of this, of course, webhooks should be revokable.

[Qu] if we do this in the context of Heat (db not accessible from the API daemon).

- We are going to have to send all webhooks to the heat-engine for verification.

- This is because we can't check the uuid in the API, thus making it very easy for a DOS attack. Any idea on how to solve this?

[An] This doesn't sound like a unique problem, which should be solved by rate limiting, as other parts of OpenStack do.

[Qu] Why make Autoscale a separate service?

[An] To clarify, service == REST server (to me)

Initially because someone wanted it separate (rackers). But I think it is the right approach long term.

Heat should not be in the business of implementing too many services internally, but rather having resources to orchestrate them.

monitoring <> Xaas.policy <> heat.resource.action()

Some cool things we could do with this:

- better instance HA (restarting servers when they are ill) - and smarter logic defining what is "ill"

- autoscaling

- energy saving (could be linked to autoscaling)

- automated backup (calling snapshots at regular time periods)

- autoscaling using shelving? (maybe for faster response)

I guess we could put all this into one service (an all purpose policy service)?