Celery

Celery Homepage http://celeryproject.org/

Used in Distributed Task Management https://wiki.openstack.org/wiki/DistributedTaskManagement

Contents

OVERVIEW

Celery is a Distributed Task Queue.

"Celery is an asynchronous task queue/job queue based on distributed message passing. It is focused on real-time operation, but supports scheduling as well. The execution units, called tasks, are executed concurrently on a single or more worker servers using multiprocessing, Eventlet, or gevent. Tasks can execute asynchronously (in the background) or synchronously (wait until ready)."

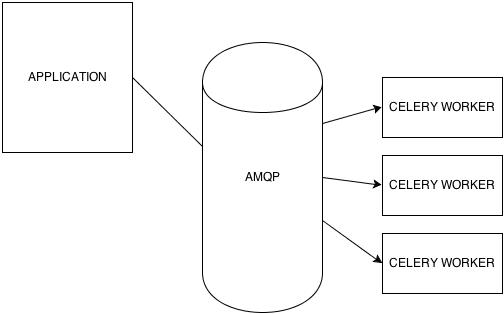

ARCHITECTURE

Celery works by asynchronously (or synchronously if designated) posting task objects to any AMQP - compliant message queue. (List of suggested implementations can be found here: http://docs.celeryproject.org/en/latest/getting-started/brokers/index.html). This is not a dedicated queue, so your queue can be hosted locally, on another box, in a different project, etc. The tasks now sitting on the queue are picked up by the next available listening celery worker. These workers, like the queue, can be hosted locally, or on an external host, or on multiple hosts. If a worker goes down in the middle of task processing, the task-message will eventually go unacknowledged, and another worker will pick up and execute the task. Assuming no errors, the worker will process and execute the task, then return the results up through the celery client (which is initialized inside your application) and back into the application. If a task does not get posted back (such as in the event of a connection error), the task can set its' own individual retry settings, such as timeout, what action to take on failure, how many times to retry, etc.

USAGE

GENERAL

Celery tasks can be run as individual units, or chained up into workflows. Individual tasks are simply designated as follows:

import celery

@celery.task

def add(x, y):

return x + y

You can either run a task immediately, or designate it as a subtask (a task to be run at a later time, either signaled by a user or an event). To run your task immediately, simply apply an async call to your task:

task = add.delay(1, 2) task.result = 3

Alternatively, you can designate a task as a subtask, so that you can wire tasks together before execution (celery will by default pass the return value of the previous task as the first argument for the proceeding task):

task1 = add.s(1, 1) task2 = add.s(2) task3 = add.s(3) flow = task1.chain(task2).chain(task3) results = flow.delay() results.result = 7

Celery also allows for groups (one task chained to a group of multiple tasks to be run in parallel) and chords (A group of tasks running in parallel chained to a single task).

SIGNALS

Another feature celery provides worth mentioning is celery signals. Each worker is capable of sending out signals to the celery client based on some sort of event (task_success, task_received, task_failure, etc). As a user, you can then define a handler for each of these signals. For instance, in the distributed task project (https://wiki.openstack.org/wiki/DistributedTaskManagement) a handler for a task success and a task failure has been defined. The success handler knows to take the successful task, and notify all tasks connected to the success task (in a workflow) that this task has completed successfully.

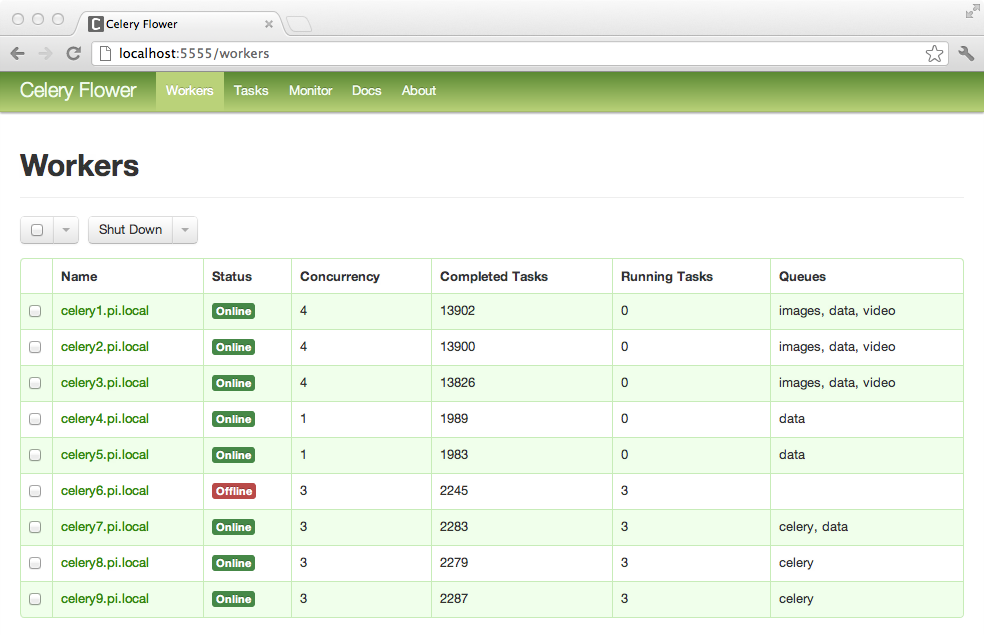

WORKERS

http://docs.celeryproject.org/en/latest/userguide/workers.html Celery workers are extremely efficient and customizable. Workers can set time-out for tasks (both before and during run-time), set concurrency levels, number of processes being run, and can even be set to autoscale. Workers can be assigned to specific queues and can be added to queues during run time. Although noted previously in 'ARCHITECTURE, it merits re-iterating that workers suffering from a catastrophic failure will not prevent a task from finishing. If a worker is halfway through executing a task and crashes, the task message will go unacknowledged, and another functioning worker will come along and pick it up.

DEBUGGING

http://docs.celeryproject.org/en/latest/userguide/monitoring.html

Celery allows for all sorts of debugging utilities.

QUEUES

As celery sends task through AMQP, you can use whatever tools your AMQP queue uses to examine its' contents.

WORKERS

Per individual worker, you can retrieve a dump of registered, currently executing, scheduled, and reserved tasks.

CLUSTERS

Per each celery cluster, you can examine the status of all nodes, find results of individual tasks, inspect active/registered/scheduled/reserved/revoked tasks, and see worker stats

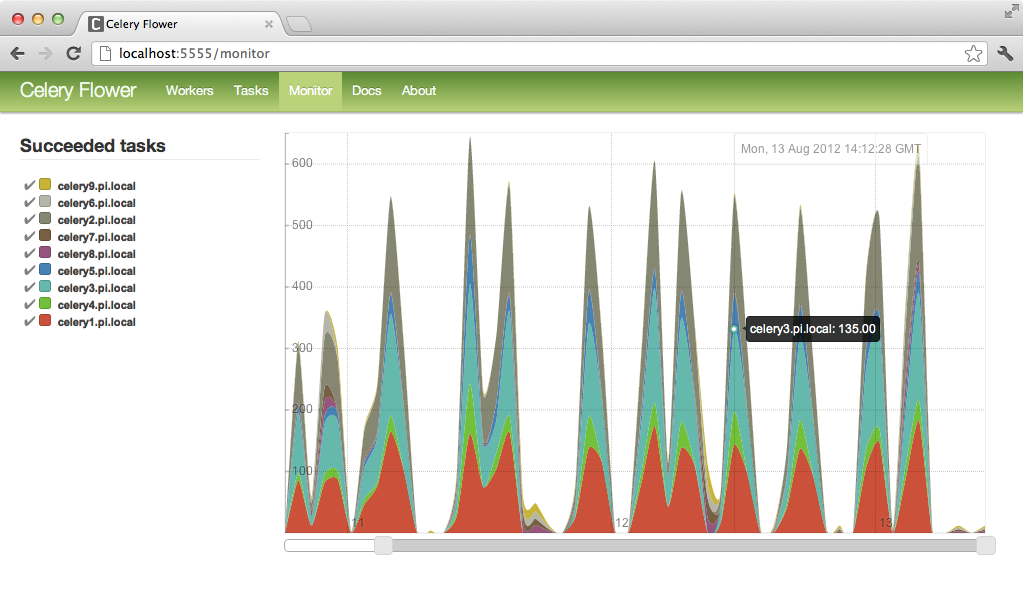

FLOWER

As this is a lot of information to process through logs (although completely do-able), Celery has provided a real-time web based monitor called Flower. Not only can you actively monitor tasks and their current status, but you can also modify workers and task during run-time (or before/after).

Features

Real-time monitoring using Celery Events

- Task progress and history.

- Ability to show task details (arguments, start time, runtime, and more)

- Graphs and statistics

Remote Control

- View worker status and statistics.

- Shutdown and restart worker instances.

- Control worker pool size and autoscale settings.

- View and modify the queues a worker instance consumes from.

- View currently running tasks

- View scheduled tasks (ETA/countdown)

- View reserved and revoked tasks

- Apply time and rate limits

- Configuration viewer

- Revoke or terminate tasks

HTTP API