RabbitmqHA

- Launchpad Entry: NovaSpec:rabbitmq-ha

- Created: 19 October 2010

- Last updated: 19 October 2010

- Contributors: Armando Migliaccio

Contents

Summary

This specification covers how Nova supports RabbitMQ configurations like clustering and active/passive replication.

Release Note

Austin release of Nova RPC mappings deals with intermittent network connectivity only. In order to support RabbitMQ clusters and active/passive brokers, more advanced Nova RPC mappings need to be provided, such as strategies to deal with failures of nodes holding queues within clusters and/or master/slave failover for active/passive replication.

Rationale

Currently, the message queue configuration variables are tied to RabbitMQ from nova/flags.py. In particular, only one rabbitmq host is provided and it is assumed, for simplicity of the deployment, that a single instance is up and running. In face of failures of the RabbitMQ host (e.g. disk or power related), Nova components cannot send/receive messages from the queueing system until it recovers. To provide higher resiliency, RabbitMQ can be made to work in an active/passive setup, such that persistent messages that have been written to disk on the active node are able to be recovered by the passive node should the active node fail. If high-availability is required, active/passive HA can be achieved by using shared disk storage, heartbeat/pacemaker, and possibly a TCP load-balancer in front of the service replicas. Although this solution ensures higher level of transparency to the client-side such as Nova API, Scheduler, and Compute (e.g. no or minimal fail-over strategies are required in the Nova RPC mappings) it still represents a bottleneck of the overall architecture, it may require expensive hardware to run, and hence it is far from ideal.

Another option is RabbitMQ Clustering. A RabbitMQ cluster (or broker) is a logical grouping of one or several Erlang nodes, each running the RabbitMQ application and sharing users, virtual hosts, queues, exchanges, bindings etc. The adoption of a RabbitMQ cluster becames appealing in the context of virtual appliances, where each appliance is dedicated to a single specific Nova task (e.g. compute, volume, network, scheduler, api, ...) and it also runs an instance of RabbitMQ server. By clustering all the instances together a single massive cluster spanning the deployment would be available, providing the following benefits:

- no single point of failure

- no requirement of expensive hardware

- no requirement of separate appliances/hosts to run RabbitMQ

- RabbitMQ becomes 'hidden' in the deployment

However, there is a problem that may hinder the realization of this scenario: all data/state required for the operation of a RabbitMQ broker is replicated across all nodes; an exception to this are message queues, which currently, only reside on the node that created them. Queues are still visible and reachable from all nodes, but in case of a failure of the node holding them, bad things will happen! This is the main reason why clusters are discouraged for high-availability and see their application primarily to improve scalability. Nonetheless, their choice is still appealing if client-side fail-over strategies are implemented accordingly.

To understand why RabbitMQ clusters cannot be used with the current Nova RPC mappings, and how a node break-down may lead to catostrophic failures, please keep reading.

User stories

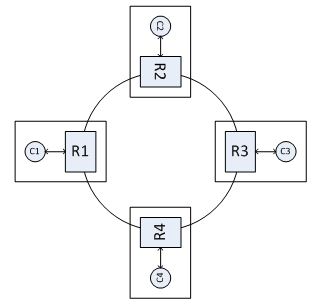

Nova uses topic-based queue, which are shared amongst components of the same nature (e.g. ‘compute’ or ‘scheduler’). When clusters are used, the queue gets created only on single node, and that node gets to be the one on which the queue is declared for the first time. Every subsequent attempt to re-declare the queue is no-opd. Now, consider for instance, the cluster configuration depicted in the Figure below: R1, R2, R3 and R4 are part of a RabbitMQ broker, and each client Ci connects to the broker via the local instance Ri. Let us also image that C1 is an API component, and that C2, C3, C4 are Scheduler components; let us finally assume that the shared queue is created on the RabbitMQ node R2. In such a cluster configuration and with the current Nova codebase, C3 and C4 will still connect via the queue created on C2. However, failures of R2 are catastrophic: messages published by C1 will be dropped (publishers may see their messages dropped from the queuing system as it is perfectly fine for AMQP to have messages without a route), and C3 and C4 will not detect any failure. Moreover, should the queues be durable, they cannot be recreate on another node by C3 or C4 as it is forbidden by the RabbitMQ implementation.

Assumptions

TBD

Design

TBD

Implementation

TBD

Code Changes

TBD